- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I'm using Intel FPGA AI 2023.2 on ubuntu 20.04 host computer and trying to infer a custom CNN in a Intel Arria 10 SoC FPGA.

I have followed Intel FPGA AI Suite SoC Design Example Guide and I'm able to copile the Intel FPGA AI suite IP and run the M2M and S2M examples.

I have also compiled the grpah for my custom NN and I'm trying to run it with the Inter FPGA AI suite IP but I have not clear how to do it. I'm trying to use the dla_benchmark app provided but for example, the input data of my NN (it is trained and graph was compiled in this way) must be float whereas the input data of the IP must be int8 if I'm not wrong.

Another problem I have is regarding the ground truth file. I have a ground truth file for each imput file because each groud truth is a 225 array.

Is there any additional information or guide to run custom model with Intel FPGA AI Suite?

Thank you in advance

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @JohnT_Intel ,

That application note only describes the process of compiling a graph from a custom NN and address potential issues during compilation. I have already successfully compiled the graph, and now the question is how to perform inference with the custom graph using the Intel FPGA IP. Is this information addressed in another AN or User Guide?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I read in "Intel® FPGA AI Suite: PCIe-based Design Example User Guide", which is not aimed for the device that I am using, that there are two different tools: OpenVINO™ FPGA Runtime Plugin and Intel FPGA AI Suite Runtime. The document explain the classes bot tools have but I do not find any information about how to modify them according to custom NN needs, compile or depoly the NN with them. Is there any guideline?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Once you have completed the flow in https://www.intel.com/content/www/us/en/docs/programmable/777190/current/example-1-customized-resnet-18-model.html then you can use the generate IR file on OpenVINO. It will be performing as how you run the example design.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

IR and compiled graph were succesfully obtained. The question is how to run the model. In the example S2M image_streaming_app and streaming_inference_app are executed. The problems are:

- The input date must be in 224x224x3 BGR .bmp format, while my NN input data is 3 channel float data array. The NN was trained in this way and IR and graph was get accordingly. I can convert the data to bmp by my input size is 3360x360x3. I can change width and height with image_streaming_app flags but I don't know if it works because the input buffer.

- The output is comparted directly with "/app/categories.txt" file and the top 5 scores are given in result.txt. My output is completely different. I have 225 outputs. How can I get the gross output?

The other example, M2M example uses dla_benchmark app. And the problem is the same, how to change the input or how to use it with different input size and how to read the output

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @JohnT_Intel,

Yes, that application, as well as dla_benchmark, is compiled for the given Resnet-50 app but is not useful for custom NN. The application itself read the output from the output buffer and process it to return the top5 scores according "categories.txt" file. So output data cannot be read. Is there any guideline or AN that explain how to build a similar application for custom purposes?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Resnet and custom NN will have their architecture on how the data is pass to the AI Suite IP. You will need to implement the interface so that it is running the correct custom NN architecture AI Suite IP.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Can you guide me how do you create the design? Are you running the application through ARM processor or PCIe?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @JohnT_Intel

I'm using Intel Arria 10 SoC FPGA so, I'm running ARM processor.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Can you provide me the guide on how you generate the FPGA bitstream? Can you also share the input file so that I can duplicate the issue from my side?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @JohnT_Intel

There is no custom bitstream. The bitstream was compiled following the instructions in the Intel FPGA AI Suite SoC Design Example Guide, specifically in section "3.3.2 Building the FPGA Bitstreams." The compilation was performed using the dla_build_example_design.py script with the following parameters:

dla_build_example_design.py \

-ed 4_A10_S2M \

-n 1 \

-a

$COREDLA_ROOT/example_architectures/A10_Performance.arch \

--build \

--build-dir

$COREDLA_WORK/a10_perf_bitstream \

--output-dir $COREDLA_WORK/a10_perf_bitstream

Which input do you need? The TF model, the IR model, the compiled graph, input data...?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Do you confirm if the custom NN is able to run on the current architecture design? If not then you will need to generate the bitstream that is suitable for your NN.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @JohnT_Intel ,

I am able to run the model but the input is expected to be U8 while my NN is trained with a normalized input. In addition I woudl linke to read de raw results because the provided applications (dla_benchmak and streaming_inferece_app) give a procesed output specific por the resnet-50 calssification problem.

What changes do you suggest?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Can you check the file that you use to run OpenVINO such as prototxt is updated?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @JohnT_Intel ,

I execute the compiled graph for fpga (.bin) file.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

How do you run the OpenVINO application?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @JohnT_Intel,

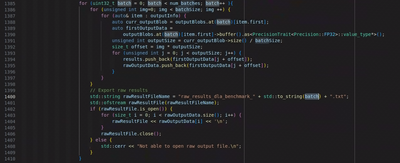

I modified the dla_benchmark application. So load the architecture file and the compiled graph with the dl_benchmark application. I read rawOutputData and write it in a txt file. Then I compute the accuracy with this raw output data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

How about the model optimization? Do you generate it based on the new architecture?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page