- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I'm using Intel FPGA AI 2023.2 on ubuntu 20.04 host computer and trying to infer a custom CNN in a Intel Arria 10 SoC FPGA.

I have followed Intel FPGA AI Suite SoC Design Example Guide and I'm able to copile the Intel FPGA AI suite IP and run the M2M and S2M examples.

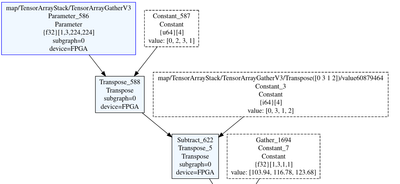

I have a question regarding the input layer data type. In the example, resnet-50-tf NN, the input layer seems to be FP32 [1,3,224,224] in the .dot file obtained after the graph compiling (see screenshot below)

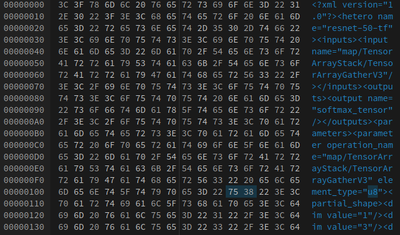

However, when running dla_benchmark example I noticed that U8 input data type was detected. After reading the .bin file of the graph compiled, from which this info is extracted by the dla_benchmark application, it can be seen that input data type is U8 (see screenshot below)

The graph was compitled according to Intel FPGA AI Suite SoC Design Example Guide using:

omz_converter --name resnet-50-tf \

--download_dir $COREDLA_WORK/demo/models/ \

--output_dir $COREDLA_WORK/demo/models/

In addition I compiled my custom NN with dla_command and I get the same result: the input layer is fp32 in the .dot and u8 in the .bin.

dla_compiler

--march "{path_archPath}"

--network-file "{path_xmlPath}"

--o "{path_binPath}"

--foutput-format=open_vino_hetero

--fplugin "HETERO:FPGA,CPU"

--fanalyze-performance

--fdump-performance-report

--fanalyze-area

--fdump-area-report

Compilation was performed for A10_Performance.ach example architecture architecture in both cases.

In addition, input precission should be reported in input_transform_dump report but it is not.

2 questions:

-Why is the input data_type changed?

- Is it possible to compile the graph with input data type FP32?

Thank you in advance

Link Copied

- « Previous

-

- 1

- 2

- Next »

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello@JohnT_Intel,

I prefer to manage the issues in different threads as they seem to have different root causes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @JohnT_Intel ,

Is there any news? The same issue is pressent when importing from ONNX model.

ONNX is imported to IR model and then Input layer is detected as U8 layer in the compiled graph. It should be FP32 instead.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

It seems fixed in Intel FPGA AI Suite 2023.3.

In Intel FPGA AI Suite 2023.2 input datatype is not modified by using "--bin-data" dla_compiler flag.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »