- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

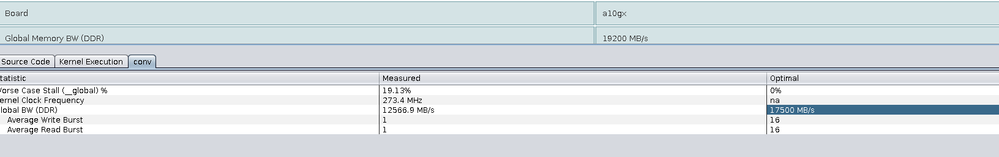

I am looking at my report generated by compiling my opencl kernel for intel fpga . So in that report I am see average write burst and read burst measured as 1 but optimal possible is 16. I believe having more read burst will help improving the design but not sure how to do it. Any suggestions will be really helpful

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unless you post your kernel code, it would be difficult to recommend ways to improve the memory throughput just based on profiling results.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

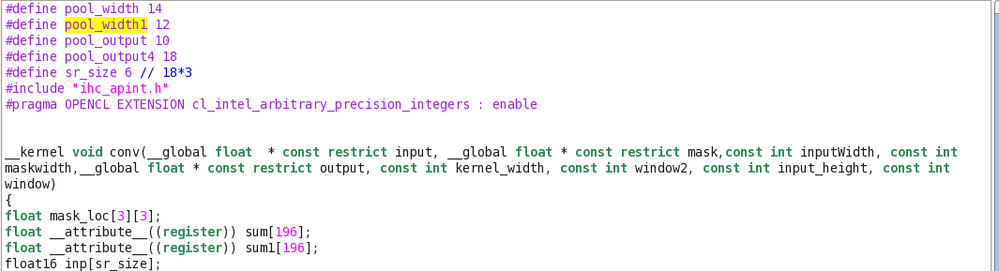

Okay , I am sharing

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you also post your kernel header/variable declarations? I am not sure which buffer is local and which is global. Note that the system viewer tab in the HTML report provides invaluable details regarding the memory ports and their length which can help considerably in debugging memory performance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unless I am missing something here, you should get one 512-bit read port for input and one 512-bit write port for output and one 128-bit port for mask. Assuming that your board has two banks of DDR3/4 memory, this should give you relatively good memory throughput. Can you check the system viewer tab in the HTML report to make sure the compiler is correctly coalescing the accesses? You can also archive the "reports" folder and post it here and I will take a look at it.

P.S. Can you post your board model?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

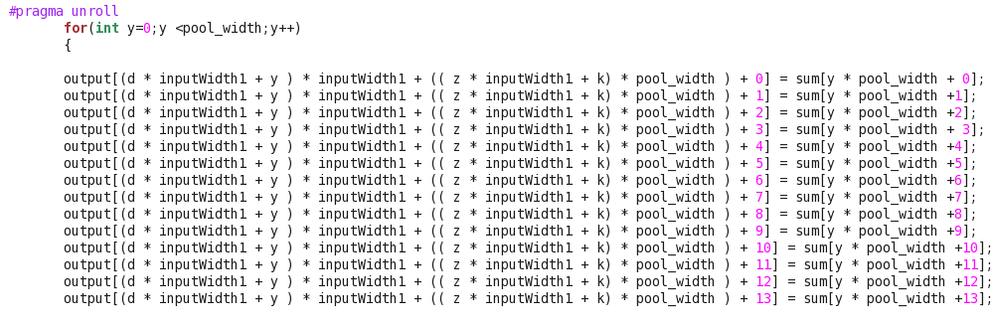

I am getting one 512 bit read port with one 512 with mask port as I am unrolling by the factor of 3x3(inner and outer) so reading 32*9 bits. So it generated one 512 bits for that. Also for the output because I unrolled by the factor of 14 so I am getting 14 512 bit ports for the output , with burst coalesced write-ack non aligned which I am assuming does the coalesced access , is that true to assume ?

The profiler gives low occupancy and utilization for the output. And the burst size I am able to get is with 1 with the possibility of 16 for both read and write port. Do you know how burst read and write is controlled or any suggestions how can I improve that, I think that could also be the way to improve the results

My board is arria 10 with one DDR4.

I have also attached my reports to this , you can take a look at it

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The report makes it much easier to understand what is happening. Basically, your memory performance is poor because you are putting too much pressure on the memory bus. Specifically, unrolling the "y" loop over the output write is unnecessary. The addresses are not consecutive over "y" and hence, you are getting too many [wide] memory ports competing with each other over the memory bus. Furthermore, since you are using the reference board which only has one bank of DDR4 memory, your memory bandwidth is limited to only ~17 GB/s. One single 512-bit access per iteration is enough to saturate the memory bandwidth in this case. Your kernel, however, has 14 such accesses. I would recommend using a vector size of 8 instead of 16, and avoiding to unroll the "y" loop over output writes. This will result in two 256-bit accesses for input and output. I assume the mask read is done only once, so it shouldn't cause much contention on the bus.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To make sure I get low stall rate I am using float8 and float8 reading and writing. I got the improvement with respect to the stall rate , reduced to under 20% , but I am still not able to utilize optimal write burst size and read burst size of 16 . Can you suggest something on that perspective?

I have attached my report and result on profiler I am getting

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Cannot follow the whole issue as I am quite new here.

I have had more success (in regards to wirting bandwith) with declaring dependencies using the #pragma ivdep safelen(numberOfIterations), vectorizing and letting the compiler orginize the pipeline, than manually paralellizing the kernel.

Hope it can help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey , I have followed all the methods that you suggested, thanks for that. All I need is more information on to improve the read and write burst size which should help improving the kernel performance. Any suggestions will be really helpful.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What the profiler is reporting is really not that important as long as you are saturating the memory bandwidth. Calculate how much data you are moving in and out of the FPGA (to/from external memory) and divide it by the kernel run time and compare against the theoretical peak throughput of the external memory. If you are getting at least 70-80% of the memory throughput, you are at near-optimal performance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have calculated the memory throughput, it turns out I am able to utilize 50% bandwidth which is measured . for example, in my case I am achieving 12GB/s of bandwidth and measuring the throughput I get ~ 50% efficiency which in fact aligns with memory efficiency I get from the profiler. But theoretical external memory bandwidth possible is 19GB/s of which I am able to utilize only 30%. I am not sure how to improve on that factor, I have 8 read and 8 write ports so there should be minimal memory contention?

Do you have any suggestions?

Also when i keep the indexing simpler like accessing smaller data , I get small stall rate but if my indexing is bit complex, stall rate increasing although each time I am accessing new data. For example data[ a* b+c] has much less stall rate as compared to data[ a* b + c*d + e*f + g], do you know any was to improve that because for my kernel I need a bit complex indexing?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The complexity of indexing should have no effect since index caluclation is pipelined. What can make a large difference is alignment; the starting address of the wide accesses must be at least 256-bit aligned or else effective throughput will be reduced. The difference you are seeing could also be due to difference in operating frequency of your kernels. What is the operating frequency with/without profiling enabled?

I have measured the memory controller efficiency to be around 85-90% (i.e. peak practical throughput for you will be 16-17 GB/s). In your case, since your board has only one memory bank, full performance can be achieved if your kernel has *only one 512-bit access* (either read or write) but such kernel will have little practical usecase. Two 256-bit accesses (plus another for mask) will always be slower than that. You can try increasing the vector size to 16 which might help increasing memory throughout (but also stall rate) by oversubscribing the memory interface, especially if your kernel is running below 266 MHz.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

By Index calculation, because I am doing inside the array only I am not sure it will be pipelined or not ? Is that correct to say that inp[a*b + c*d + e] also results in pipelined index calculation ?

By achieving 70-80% of utlization you meant for your test cases right ?

Currently I am performing my results using profiler only, which I achieve ranging between 255- 270 MHz, but even without profiling (in previous cases) not much difference. Should I expect major like (10-20ms) of performance impact because of compiling code without profiler?

I got confused by above comment, because in the previously in same thread you suggested to have 8 read and 8 write ports that helps in solving memory contention from having 16 read and write ports. Can you please clarify that?

Also , based on previous suggestion I changed to having 8 read and 8 write ports, and I am able to achieve better performance for similar resource utilization.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If the compiler report says the loop is pipelined, then index calculation is also pipelined.

70-80% is optimal for realistic cases based on my own testing. 85-90% is for perfect cases (one 512-bit access per memory bank with interleaving disabled).

If you do not see much difference in operating frequency without profiling, then performance difference will also be minimal. The profiler does not change loop II as far as I have seen and hence, the only factor causing performance difference between enabling profiling or not should be operating frequency.

Previously you had 14x 512-bit accesses which was excessive. One bank of DDR should saturate with a total of 512 bits read/written per iteration but the memory controller/interface is far from optimal. I have experienced that oversubscribing the memory interface (by 2x and not 14x) can sometimes improve the performance a little bit, but certainly not as much as it would increase resource usage.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the reply, So for my case I am restricting to 8 read and write ports as I am getting better performance from that. But for writing I am not able to achieve high bandwidth , I am getting very poor writing performance with memory bandwidth in few 100's MB/s but for reading I am getting around 12GB/s of bandwidth, is there any optimization technique you can suggest to improve upon my writing bandwidth?

Also for reading although I get that 12GB/s of bandwidth but profiler gives the description of 60% efficient , do you know what that could mean?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Check this part of the guide where it is explained how to interpret the information obtained from the profiler:

https://www.intel.com/content/www/us/en/programmable/documentation/mwh1391807516407.html#ewa1399325588941

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My question aligns with this topic only, so that's why I am asking here. For my OpenCl design i have channel between 2 kernels. So for read and write channel I see in the report generated that my write cycle starts is 40 and read cycle is 4 that leads to stalling at read end and it is affecting the performance as it stalls because of channel is 50%. But I am reading same number of time as writing is , so it should be balanced as such. Do you have any suggestions on how to improve on the end?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Channels are pretty much never a source of bottleneck. Channel stalls pretty much always are stalls propagated from the top of the pipeline, very likely global memory operations. Since the operations are pipelined, it doesn't matter what the start cycle of the operation is since the start latency will be amortized if the pipleine is kept busy for long enough. Stall rate for channel reads and writes do not need to be balanced. In fact, they definitely won't be balanced if the source of the stall is somewhere else. e.g. if the source of the stall is globall memory, channels writes will never stall sine the channel never becomes full, but channel reads will stall since the channel is faster than globabl memory and it becomes empty frequently, waiting for data to arrive from global memory. The behavior you are seeing is completely normal. The document I posted above also describes how to find the source of stalls in a kernel.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page