- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi guys

I found some problems that I can't understand same as the topic

"My Bit Depth is reduced to 6 from 8, If I set monitor resolution to 3440*1440 at 144Hz"

This is my HW/SW below

Intel UHD770 with latest driver 30.0.101.1404 / Intel GCC 1.100.3407.0 latest

Mi 34 Monitor Ultrawide screen that supports 3440*1440 Max 144Hz with DP 1.4

MB Asus B660A Gaming Wifi D4 (Support DP 1.4 in back I/O)

Windows 11 with the latest update

1. If I set monitor resolution to 3440*1440 at 144Hz, My Bit Depth is reduced to 6 from 8

But If I change the refresh rate to 120Hz, It works fine and shows with 8-bit Depth in the system PC and Intel GCC tray.

Can anyone give me some answer or explain this problem?

Because Is it limited by HW or something?

2. While I found an article for detail, So I found that We can't set Bit Depth in Intel GCC if use a Display port, It must be connected by HDMI only if you want to set this.

Am I understanding correctly?

Intel will be adding this future as a Display port same as HDMI in Intel GCC?

Thank you

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If, in the process of increasing the frequency from 120 to 144Hz, you see the bit depth being dropped to 6, it is an indication that the tested bandwidth (for a pixel depth of 😎 was not sustainable. Consequently, the pixel depth was reduced.

Essentially, you need to look at your cable as being the culprit. Remember the golden rule: You get what you pay for - or don't, as the case may be. My other rule is: if the cable came with the monitor, it is junk and should be discarded.

Hope this helps,

...S

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

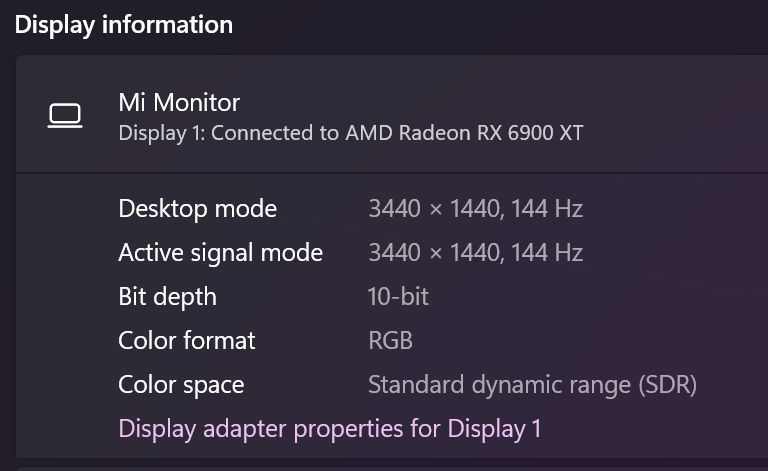

Dude this cable works fine with my RX 6900XT

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And?

No one ever said that iGFX wasn't pickier than Nvidia or AMD. Look, you want to ignore my input, you go right ahead. If this proves to be the issue, well, ...

...S

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I still waiting for a good answer or someone can explain this problem

Regard 😋

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page