- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My platform details: Ubuntu 18.04.5 LTS (Bionic Beaver), Openvino v2021.4.689

I would like to deploy darknet model on NCS2 and I have converted .weights to tensorflow .pb format using this repo. After that I used model optimizer and converted tensorflow weights to IR using

python3 mo.py --framework=tf --data_type=FP16 --saved_model_dir /root/yolov4-416 --output_dir /root/IR --model_name yolov4 --input_shape=[1,608,608,3]As a result I got 3 files .bin .mapping .xml. But when I try to load it I got an error:

net = ie.read_network(model=model, weights=wights, init_from_buffer=False)

exec_net = ie.load_network(net, device_name=device, num_requests=2)

Traceback (most recent call last):

File "detector.py", line 219, in <module>

sys.exit(main() or 0)

File "detector.py", line 81, in main

exec_net = ie.load_network(net, device_name=device, num_requests=2)

File "ie_api.pyx", line 372, in openvino.inference_engine.ie_api.IECore.load_network

File "ie_api.pyx", line 390, in openvino.inference_engine.ie_api.IECore.load_network

RuntimeError: Check 'element::Type::merge(inputs_et, inputs_et, get_input_element_type(i))' failed at core/src/op/concat.cpp:50:

While validating node 'v0::Concat StatefulPartitionedCall/functional_1/tf_op_layer_Shape_10/Shape_10 (DynDim/Gather_5760[0]:i64{1}, ConstDim/Constant_5761[0]:i32{1}) -> (i32{2})' with friendly_name 'StatefulPartitionedCall/functional_1/tf_op_layer_Shape_10/Shape_10':

Argument element types are inconsistent.I would be really appreciate for help.

Thanx.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Oltsym,

Thank you for reaching out to us and thank you for using Intel® Distribution of OpenVINO™ Toolkit!

I have downloaded yolov4.weights and converted it into .pb format using the command from this repo. However, I encountered an error: [ FRAMEWORK ERROR ] Cannot load input model: SavedModel format load failure when converting .pb file to IR file.

For your information, yolo-v4-tf, which is a Public Pre-Trained Model provided by Open Model Zoo, use the same yolov4.weights file and converted into IR using converter.py from Model Downloader.

I recommend you use the Model Downloader and other automation tools such as Model Downloader and Model Converter to download yolov4.weights and convert the model into Intermediate Representation.

Steps to download and convert yolo-v4-tf using Model Downloader and Model Converter are as follow:

cd <INSTALL_DIR>/deployment_tools/open_model_zoo/tools/downloader

python3 downloader.py --name yolo-v4-tf

python3 converter.py --name yolo-v4-tf

Default location of the IR file is located at the following directory:

<INSTALL_DIR>deployment_tools/open_model_zoo/tools/downloader/public/yolo-v4-tf

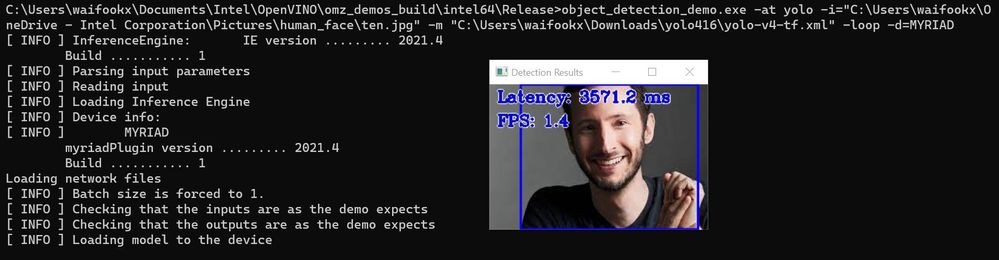

The inference result of running Object Detection C++ Demo with yolo-v4-tf using Intel® Neural Compute Stick 2 is shown as follows:

On another note, could you please share the following information with us for further investigation if the suggestion provided above does not resolve your issue?

· Specific Darknet model

· Demo application / scripts (detector.py)

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Oltsym,

Thanks for your question!

If you need any additional information from Intel, please submit a new question as this thread is no longer being monitored.

Regards,

Wan

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page