- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a Keras/Tensorflow .h5 model already trained that I would like to start running inference/classifying images using the Intel Neural Compute Stick 2 device on a Raspberry Pi 4. I am having trouble finding the right guides as most seem outdated, therefore it doesn't have a specific file or directory when I try to run inference. Additionally, the contents regarding IR conversion and inferencing are heavily scattered which makes it a challenge for me to understand.

I'd like to kindly request a dummy step-by-step guide on how to convert a keras/tensorflow model to an IR representation where my model is a custom trained model from scratch and the layers are compatible with OpenVino format after checking, and also would like to know how to run a simple image classification example with the same model on Raspberry Pi 4

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Publio,

Thanks for reaching out to us.

Is your custom model a TensorFlow 2 model? For your information, TensorFlow 2 officially supports two model formats, which are SavedModel and Keras H5 (HDF5).

Steps to convert Keras H5 model to Intermediate Representation are as follow:

Step 1: Convert Keras H5 model to SavedModel Format

Install dependencies:

cd <INSTALL_DIR>\deployment_tools\model_optimizer\install_prerequisites

install_prerequisites_tf2.bat

Load the model using TensorFlow 2 and serialize it in the SavedModel format. For example:

import tensorflow as tf

model = tf.keras.models.load_model('model.h5')

tf.saved_model.save(model,'model')

Optional: If your Keras H5 model has custom layer, it has specifics to be converted into SavedModel format. For example, the model with a custom layer CustomLayer from custom_layer.py is converted as follows:

import tensorflow as tf

from custom_layer import CustomLayer

model = tf.keras.models.load_model('model.h5', custom_objects={'CustomLayer': CustomLayer})

tf.saved_model.save(model,'model')

SavedModel format which consists of a directory with a saved_model.pb file and two subfolders: (variables and assets) will be generated.

Step 2: Convert SavedModel Format to Intermediate Representation

Change directory to <INSTALL_DIR>/deployment_tools/model_optimizer

Run the mo_tf.py script with a path to the SavedModel directory and a writable output directory:

python mo_tf.py --saved_model_dir <SAVED_MODEL_DIRECTORY> --output_dir <OUTPUT_MODEL_DIR>

For more information on converting TensorFlow 2 models, please refer to Convert TensorFlow 2 Models.

On another note, Hello Classification C++ Sample demonstrates how to execute an inference of image classification networks like alexnet and googlenet-v1 using Synchronous Inference Request API. You may refer to Hello Classification C++ Sample as an example to create your own application.

Refer to Use Inference Engine API to Implement Inference Pipeline for step-by-step instructions to implement a typical inference pipeline with the Inference Engine C++ API.

Refer to Open Model Zoo Demos for more demo applications that provide robust application templates to help you implement specific deep learning scenarios.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello thank for your reply to my question. Currently, i am facing the following problem:

The TensorFlow library was compiled to use AVX instructions, but these aren't available on your machine

I am using a Q4560 processor.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Publio,

Thanks for sharing your information.

Refer to section Specifications, subsection Advanced Technologies, in Intel® Pentium® Processor G4560, your processor does not have Intel® AVX2 Instruction Set Extensions.

On another note, referring to this thread, the solution to solve this issue is to build TensorFlow from source that does not use the AVX instruction set. If you have any issues in building TensorFlow from source, you can post your question here.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I have switched to a i5-11400 and I have tried running the optimization again. However, I am getting the following errors:

My model is a custom model that uses transfer learning and pre-training from a VGG16 model.

The command i ran was: python mo.py --data_type=FP16 --model_name my_model_vpu --input_model "C:\Users\MyUser\Downloads\frozen_graph.pb" --output_dir "C:\Users\MyUser\Documents\model_output"

And the output is:

Inference Engine version: 2021.4.2-3974-e2a469a3450-releases/2021/4

Model Optimizer version: 2021.4.2-3974-e2a469a3450-releases/2021/4

2022-01-13 20:59:51.957148: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'cudart64_110.dll'; dlerror: cudart64_110.dll not found

2022-01-13 20:59:51.957604: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

C:\Users\publi_l\AppData\Roaming\Python\Python37\site-packages\tensorflow\python\autograph\impl\api.py:22: DeprecationWarning: the imp module is deprecated in favour of importlib; see the module's documentation for alternative uses

import imp

[ ERROR ] Shape [ -1 16 20 256] is not fully defined for output 0 of "x". Use --input_shape with positive integers to override model input shapes.

[ ERROR ] Cannot infer shapes or values for node "x".

[ ERROR ] Not all output shapes were inferred or fully defined for node "x".

For more information please refer to Model Optimizer FAQ, question #40. (https://docs.openvinotoolkit.org/latest/openvino_docs_MO_DG_prepare_model_Model_Optimizer_FAQ.html?question=40#question-40)

[ ERROR ]

[ ERROR ] It can happen due to bug in custom shape infer function <function Parameter.infer at 0x000001B7A94F3F28>.

[ ERROR ] Or because the node inputs have incorrect values/shapes.

[ ERROR ] Or because input shapes are incorrect (embedded to the model or passed via --input_shape).

[ ERROR ] Run Model Optimizer with --log_level=DEBUG for more information.

[ ERROR ] Exception occurred during running replacer "REPLACEMENT_ID" (<class 'extensions.middle.PartialInfer.PartialInfer'>): Stopped shape/value propagation at "x"

I don't understand which model has shape size of [ -1 16 20 256] , my model layers actually are:

Model layers:

block1_conv1 (Conv2D) (None, 128, 160, 64)

block1_conv2 (Conv2D) (None, 128, 160, 64)

block1_pool (MaxPooling2D) (None, 64, 80, 64)

block2_conv1 (Conv2D) (None, 64, 80, 128)

block2_conv2 (Conv2D) (None, 64, 80, 128)

block2_pool (MaxPooling2D) (None, 32, 40, 128)

block3_conv1 (Conv2D) (None, 32, 40, 256)

block3_conv2 (Conv2D) (None, 32, 40, 256)

block3_conv3 (Conv2D) (None, 32, 40, 256)

block3_pool (MaxPooling2D) (None, 16, 20, 256)

block4_conv1 (Conv2D) (None, 16, 20, 512)

block4_conv2 (Conv2D) (None, 16, 20, 512)

block4_conv3 (Conv2D) (None, 16, 20, 512)

block4_pool (MaxPooling2D) (None, 8, 10, 512)

block5_conv1 (Conv2D) (None, 8, 10, 512)

block5_conv2 (Conv2D) (None, 8, 10, 512)

block5_conv3 (Conv2D) (None, 8, 10, 512)

block5_pool (MaxPooling2D) (None, 4, 5, 512)

flatten (Flatten) (None, 10240)

dense (Dense) (None, 16)

output (Dense) (None, 1)

Additionally, it has two classes positive and negative.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Publio,

The error you encountered: [ ERROR ] Shape [ -1 16 20 256] is not fully defined is due to the input shapes are not defined in the TensorFlow model, hence the Model Optimizer fails to convert the model.

Please provide the input shapes using --input_shape command line parameter or provide the batch size using -b=1.

Refer to General Conversion Parameter and When to Specify Input Shapes in Converting a Model to Intermediate Representation for more information.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I was able to convert my model to using the correct input shape. I started following the steps to use the hello_classification.py as I am using Python for my project. Whenever I try running the following command:

python3 hello_classification.py -m my_model_vpu.xml -i /home/pi/OpenVino/deployment_tools/inference_engine/samples/python/classification_sample_async/573f0a79-f5f8-465f-9d10-2037595120c4.png -d MYRIAD

The following is output to the screen:

[ INFO ] Creating Inference Engine

[ INFO ] Reading the network: drunk-sober_VPU.xml

[ INFO ] Configuring input and output blobs

[ INFO ] Loading the model to the plugin

[ WARNING ] Image

/home/pi/OpenVino/deployment_tools/inference_engine/samples/python/classification_sample_async/573f0a79-f5f8-465f-9d10-2037595120c4.png is resized from (1080, 1420) to (128, 160)

[ INFO ] Starting inference in synchronous mode

E: [global] [ 955824] [Scheduler00Thr] dispatcherEventSend:54 Write failed (header) (err -4) | event XLINK_WRITE_REQ

E: [xLink] [ 955825] [Scheduler00Thr] sendEvents:1132 Event sending failed

E: [global] [ 955825] [python3] addEvent:360 Condition failed: event->header.flags.bitField.ack != 1

E: [global] [ 955825] [python3] addEventWithPerf:372 addEvent(event, timeoutMs) method call failed with an error: 3

E: [global] [ 955825] [python3] XLinkReadData:156 Condition failed: (addEventWithPerf(&event, &opTime, 0xFFFFFFFF))

E: [ncAPI] [ 955825] [python3] ncFifoReadElem:3295 Packet reading is failed.

Traceback (most recent call last):

File "hello_classification.py", line 125, in <module>

sys.exit(main())

File "hello_classification.py", line 90, in main

res = exec_net.infer(inputs={input_blob: image})

File "ie_api.pyx", line 1062, in openvino.inference_engine.ie_api.ExecutableNetwork.infer

File "ie_api.pyx", line 1441, in openvino.inference_engine.ie_api.InferRequest.infer

File "ie_api.pyx", line 1463, in openvino.inference_engine.ie_api.InferRequest.infer

RuntimeError: Failed to read output from FIFO: NC_ERROR

E: [global] [ 955857] [Scheduler00Thr] dispatcherEventSend:54 Write failed (header) (err -4) | event XLINK_WRITE_REQ

E: [xLink] [ 955857] [Scheduler00Thr] sendEvents:1132 Event sending failed

E: [global] [ 955857] [Scheduler00Thr] dispatcherEventSend:54 Write failed (header) (err -4) | event XLINK_WRITE_REQ

E: [xLink] [ 955857] [Scheduler00Thr] sendEvents:1132 Event sending failed

E: [global] [ 956407] [python3] addEvent:360 Condition failed: event->header.flags.bitField.ack != 1

E: [global] [ 956407] [python3] addEventWithPerf:372 addEvent(event, timeoutMs) method call failed with an error: 3

E: [global] [ 956407] [python3] XLinkReadData:156 Condition failed: (addEventWithPerf(&event, &opTime, 0xFFFFFFFF))

E: [ncAPI] [ 956407] [python3] getGraphMonitorResponseValue:1902 XLink error, rc: X_LINK_ERROR

E: [global] [ 956407] [Scheduler00Thr] dispatcherEventSend:54 Write failed (header) (err -4) | event XLINK_WRITE_REQ

E: [xLink] [ 956407] [Scheduler00Thr] sendEvents:1132 Event sending failed

E: [global] [ 956407] [python3] addEvent:360 Condition failed: event->header.flags.bitField.ack != 1

E: [global] [ 956407] [python3] addEventWithPerf:372 addEvent(event, timeoutMs) method call failed with an error: 3

E: [global] [ 956407] [python3] XLinkReadData:156 Condition failed: (addEventWithPerf(&event, &opTime, 0xFFFFFFFF))

E: [ncAPI] [ 956407] [python3] getGraphMonitorResponseValue:1902 XLink error, rc: X_LINK_ERROR

E: [global] [ 956407] [Scheduler00Thr] dispatcherEventSend:54 Write failed (header) (err -4) | event XLINK_CLOSE_STREAM_REQ

E: [xLink] [ 956407] [Scheduler00Thr] sendEvents:1132 Event sending failed

E: [global] [ 956407] [Scheduler00Thr] dispatcherEventSend:54 Write failed (header) (err -4) | event XLINK_WRITE_REQ

E: [xLink] [ 956407] [Scheduler00Thr] sendEvents:1132 Event sending failed

E: [global] [ 956408] [python3] addEvent:360 Condition failed: event->header.flags.bitField.ack != 1

E: [global] [ 956408] [python3] addEventWithPerf:372 addEvent(event, timeoutMs) method call failed with an error: 3

E: [global] [ 956408] [python3] XLinkReadData:156 Condition failed: (addEventWithPerf(&event, &opTime, 0xFFFFFFFF))

E: [ncAPI] [ 956408] [python3] getGraphMonitorResponseValue:1902 XLink error, rc: X_LINK_ERROR

E: [global] [ 956413] [Scheduler00Thr] dispatcherEventSend:54 Write failed (header) (err -4) | event XLINK_RESET_REQ

E: [xLink] [ 956413] [Scheduler00Thr] sendEvents:1132 Event sending failed

E: [ncAPI] [ 966414] [python3] ncDeviceClose:1852 Device didn't appear after reboot

Some previous questions may suggest it is related to faulty connection to the Raspberry Pi device or low energy, So I tried running inference both on-device directly connected to the Raspberry Pi device and using a USB hub, in both cases, I received the same error. I also checked the connection apparently the device is being detected

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Publio,

Glad to know you have converted your custom VGG16 model into Intermediate Representation.

For your information, Hello Classification Python Sample has been validated on alexnet and googlenet-v1 only.

On another note, Classification Python Demo, which is one of the demo applications from Open Model Zoo that support vgg16. Could you please try to run this application with your custom model?

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

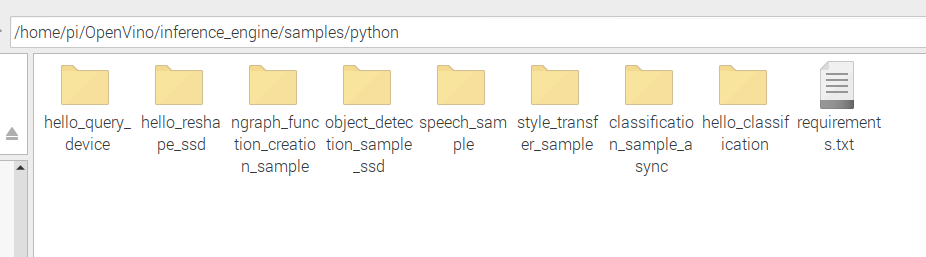

Hey, I'd like to ask how do I run the aforementioned Classification Python Demo on Raspberry Pi? Currently, my folders do not have a classification demo. This is what the latest version of OpenVino gives me:

Additionally, I tried going directly into the github page where there is a classification_demo.py and I downloaded the file, placing it on the same folder from the hello_classification example and I got the following error: ModuleNotFoundError: No module named 'model_api'

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Publio,

Thanks for your patience.

For your information, the Classification Python Demo is one of the demo applications from the Open Model Zoo master branch.

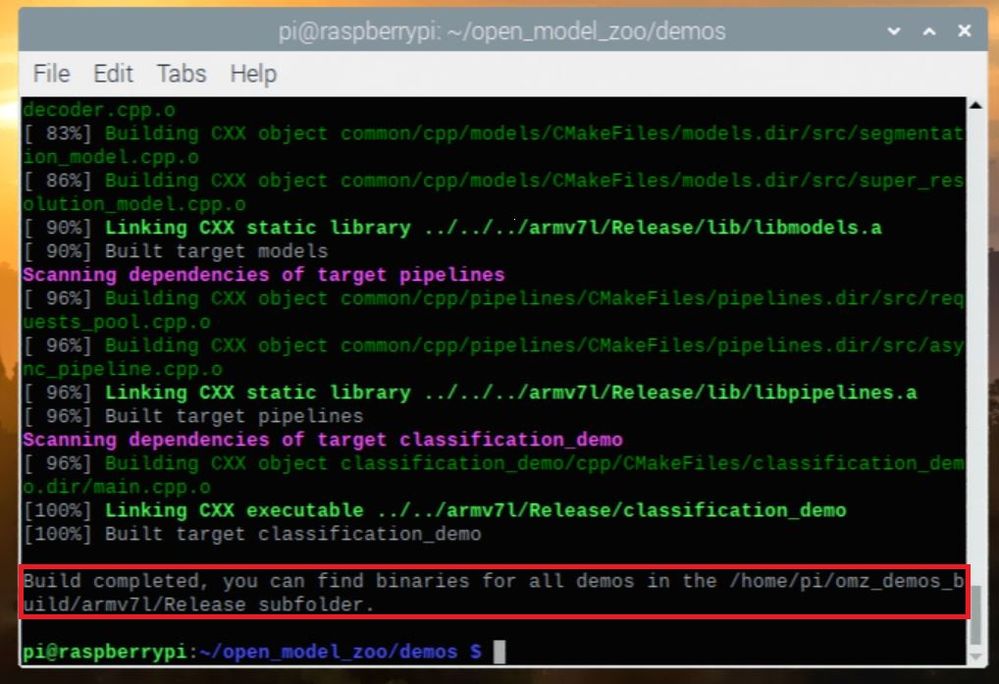

I recommend you follow the steps below to build the Classification C++ Demo:

git clone -b 2021.4.2 https://github.com/openvinotoolkit/open_model_zoo.git

cd <omz_dir>/demos

source /opt/intel/openvino_2021/bin/setupvars.sh

./build_demos.sh --target=classification_demo

To run the application, please use the following command:

./classification_demo -m <path_to_your_custom_model> -i <path_to_folder_with_images> -labels <omz_dir>/data/dataset_classes/imagenet_2012.txt

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was able to follow the steps except for the final one where I run the inference. I tried running inference from the following directory: pi@raspberrypi:~/Downloads/open_model_zoo/demos. The command I used to run inference from this directory was:

./classification_demo -m drunk-sober_VPU.xml -i /home/pi/OpenVino/deployment_tools/inference_engine/samples/python/classification_sample_async/573f0a79-f5f8-465f-9d10-2037595120c4.png -labels /home/pi/Downloads/open_model_zoo/data/dataset_classes/imagenet_2012.txt

From the demo directory after running the above command, I got the following error: bash: ./classification_demo: Is a directory.

I'd like to know what do I need to do to get around it and is it possible to build a python classification demo?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Publio,

You must change the directory to the application’s location before you execute the command.

For example, the application’s location is built under the following directory from my end:

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, thanks for replying. I was able to move forward in my attempt to run inference. However, there is a problem input shape compatibility problem, which prevents me from finally running inference.

My IR input shape is this:

<layers>

<layer id="0" name="x" type="Parameter" version="opset1">

<data element_type="f32" shape="1, 3, 128, 160"/>

<output>

<port id="0" names="x:0" precision="FP32">

<dim>1</dim>

<dim>3</dim>

<dim>128</dim>

<dim>160</dim>

</port>

</output>

</layer>

I am trying to use an image for testing, given that I already specified the channel reverse option when converting my model and also specified the input shape to be [1,128,160, 3]. My Keras model originally had an input shape of (None, 128, 160, 3).

After using the command inference command from the proper directory, I got the following error:

[ INFO ] InferenceEngine: IE version ......... 2021.4

Build ........... 2

[ INFO ] Parsing input parameters

[ INFO ] Reading input

[ INFO ] Files were added: 1

[ INFO ] resized_image.png

[ INFO ] Loading Inference Engine

[ INFO ] Device info:

[ INFO ] MYRIAD

myriadPlugin version ......... 2021.4

Build ........... 2

Loading network files

[ INFO ] Batch size is forced to 1.

[ ERROR ] Model input has incorrect image shape. Must be NxN square. Got 128x160.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Publio,

Thanks for your information.

Could you please share the following information with us to further assist you?

· Source model (Keras / TensorFlow model)

· Intermediate Representation (IR)

· Command you used to convert your source model into IR.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wan,

I have uploaded the requested files to google drive here.

Additionally, the command used to run inference was:

./classification_demo -m model_VPU.xml -i /home/pi/Desktop/images/my_test_image.tiff -labels /home/pi/Downloads/open_model_zoo/data/dataset_classes/imagenet_2012.txt

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Publio,

I encountered the same error when using your Intermediate Representation (IR) (model_VPU.xml + bin) with Classification Python Demo. Therefore, I try to convert your Keras model to IR.

However, I encountered an error: “TypeError: __init__() got an unexpected keyword argument 'axis'” when converting your “vgg19_transfered_model.h5” to SavedModel Format with the following command:

import tensorflow as tf

model = keras.models.load_model('vgg19_transfered_model.h5')

tf.saved_model.save(model,'model')

For your information, here are my TensorFlow and Keras versions:

TensorFlow version = 2.4.3

Keras version = 2.4.0

Do you have another version of TensorFlow and Keras?

Are you using the same command to convert vgg19_transfered_model.h5” to SavedModel Format?

Also, do you have the weights file of this model?

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wan, I was using a manuel code to save the model to a .pb format. I was able to successfully convert the model using your code:

import tensorflow as tf model = keras.models.load_model('vgg19_transfered_model.h5') tf.saved_model.save(model,'model')

Could you please try with this pb model?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Publio,

After I upgrade my current TensorFlow and Keras version to 2.6, I'm able to convert the Keras model into SavedModel format.

For your information, the recommended TensorFlow 2 version for using OpenVINO 2021.4.2 was tensorflow~=2.4.1. You may refer to requirements_tf2.txt.

We'll continue to investigate this issue and update you soon.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Publio,

The error you encountered: “[ ERROR ] Model input has incorrect image shape. Must be NxN square. Got 128x160." was caused by the model’s non-square input image shape. You may refer to Line 81 to Line 84 in classification_model.cpp for more information.

The Classification CPP demo has only been validated on specific models from Intel Pre-Trained models and Public Pre-Trained models. In your case, the topology is supported, however, the input shape constraints render it unable to be used.

For your information, the supported models for Classification CPP demo are available on the following page: Classification C++ Demo

We suggest you create your own demo script to test with your custom model.

You may refer to Hello Classification C++ Sample to create your own demo application.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Munesh thanks for explaining.

Is there any example on how to run inference on a python script? Reason why I need it it's because I am using Python 3 for my project and the images are received via TCP/IP protocol and I also do some additional processing before classification, is there any chance I could get a simple example on how to to run my model on a python script?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Publio,

You can refer to the following demo and samples:

Classification Python Demo – Supports VGG19 model.

Image Classification Async Python Sample - Example - Validated with AlexNet model only.

Hello Classification Python Sample – Example - Validated with AlexNet and GoogleNet-v1 models only.

Here is an interesting article that shows step by step how to create inference scripts.

How to build an Image Classifier using OpenVINO & Alexnet models

Please also watch the following video, which introduces the simplest way to infer.

Inference in Five Lines of Code

Regards,

Munesh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page