- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have issue while converting ONNX transfered from MATLAB to IR files.

My running environment: MacOS 11.0.1 Python3.8 OpenVINO2021.2.185

I have attached the onnx file in Train.onnx.zip

XudeMacBook:model_optimizer xuhe$ python3 mo_onnx.py --input_model Train.onnx

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: /Users/xuhe/intel/openvino_2021.2.185/deployment_tools/model_optimizer/Train.onnx

- Path for generated IR: /Users/xuhe/intel/openvino_2021.2.185/deployment_tools/model_optimizer/.

- IR output name: Train

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP32

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: None

- Reverse input channels: False

ONNX specific parameters:

Model Optimizer version: 2021.2.0-1877-176bdf51370-releases/2021/2

/Users/xuhe/intel/openvino_2021.2.185/deployment_tools/model_optimizer/mo/ops/reshape.py:72: RuntimeWarning: divide by zero encountered in long_scalars

undefined_dim = num_of_input_elements // num_of_output_elements

[ ERROR ] Exception occurred during running replacer "REPLACEMENT_ID" (<class 'extensions.middle.DecomposeBias.DecomposeBias'>): After partial shape inference were found shape collision for node CNN1 (old shape: [ 0 16 1 1024], new shape: [ -1 16 1 1024])

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

May I know which onnx model that you were using? (eg: topologies, framework layers).

Plus, I would like to confirm whether your current model is using dynamic shape for the input?

If you are, the dynamic shape for RNN/GRU for ONNX model is yet to be supported. However, our developers have worked on this in Feb 2021 and possible implementation on the next version release of OV - ONNX RNN/GRU enable dynamic input shape by mitruska · Pull Request #4241 · openvinotoolkit/openvino ...

Sincerely,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry, I don't really understand what's the difference between topology and framework layer. I think my onnx model is framework layer. Here is my operation process on MATLAB :

I tried the dynamic input shape by typing" --input_shape[-1,16,1,1024]'', as the ERROR told me, while it told me that it was unrecognized arguments.

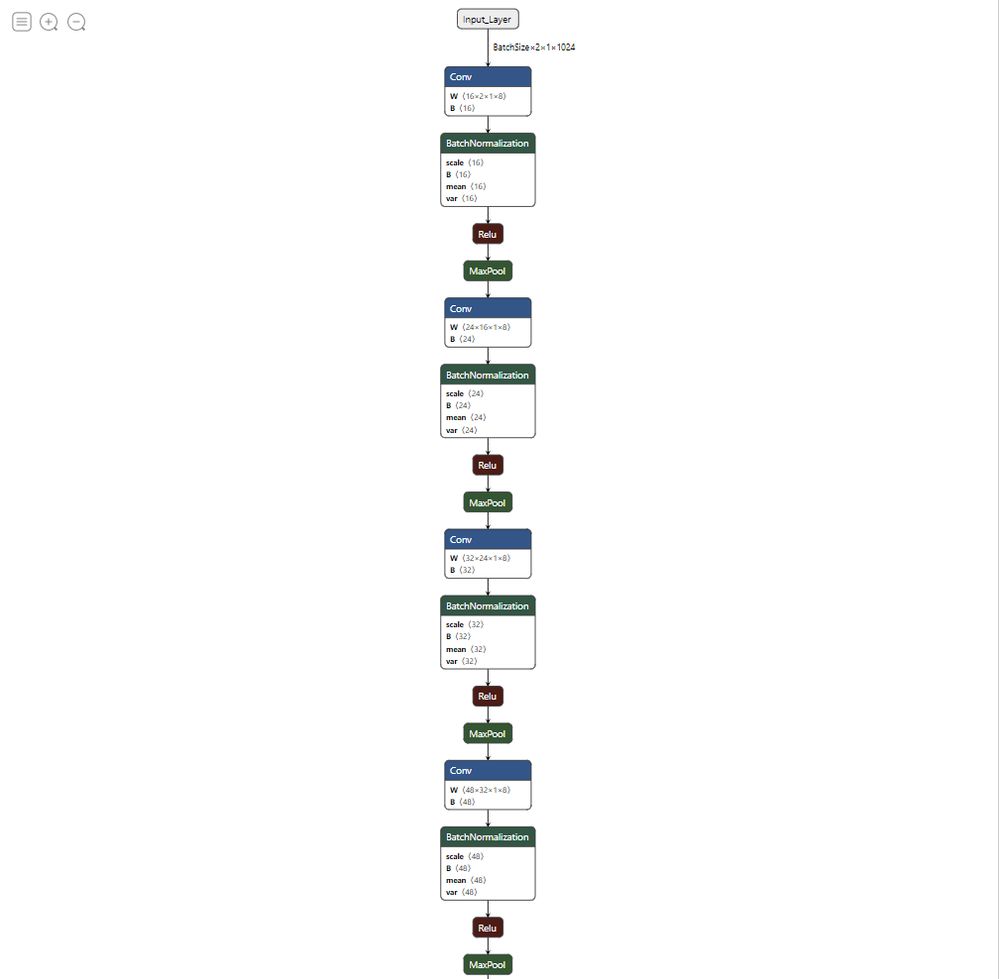

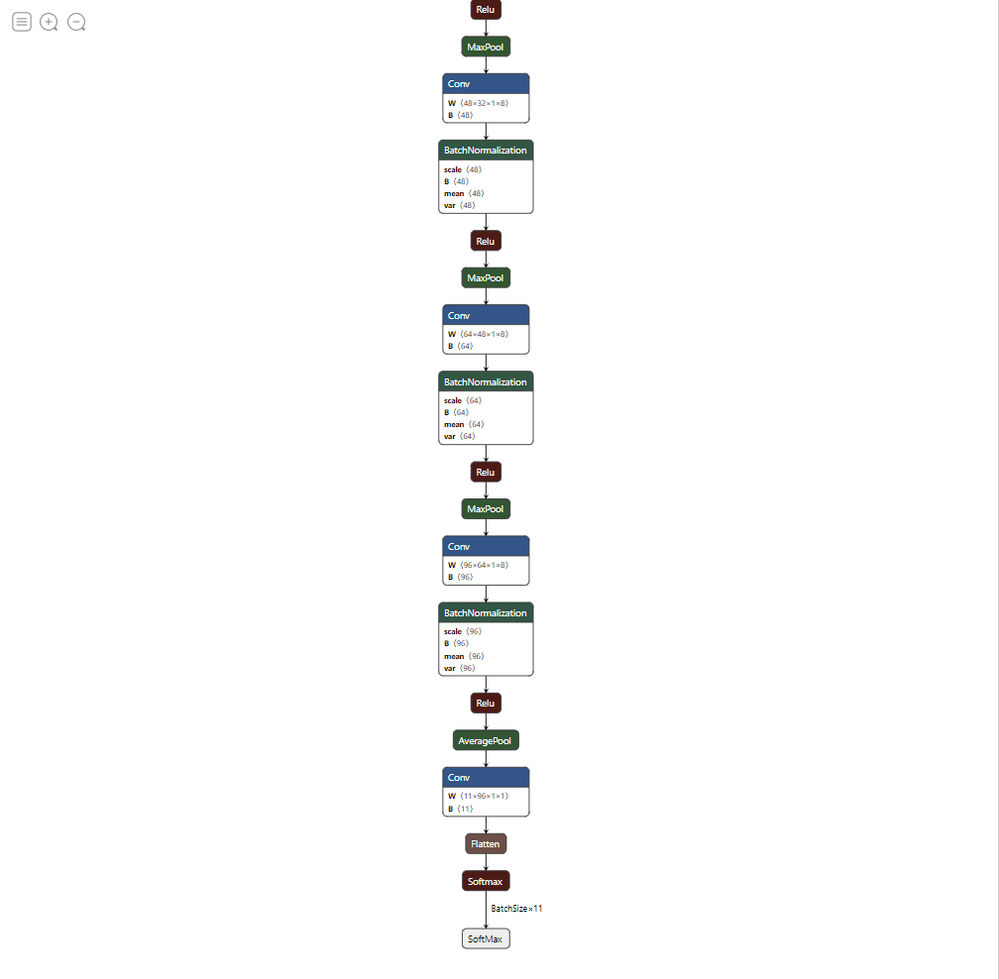

This is the design of my trained network.

Sincerely,

Jerry

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

It seems that the input_shape was incorrect in Train.onnx model. This is the reason for the error that persist.

You need to use input_shape parameter with the correct formatting [1,1,32,WIDTH>] as proposed by Evgeny (how to use dynamic shape ? · Issue #436 · openvinotoolkit/openvino (github.com)), the success result is as below.

Please help to implement one of these options:

1) Fix the input shape in the model to follow OV formatting : [1,1,16,WIDTH>] and run mo.py without input_shape parameter

2) Specify the input_shape during model conversion and follow the exact format: [1,1,16,1024]

Sincerely,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Greetings,

Intel will no longer monitor this thread since we have provided a solution. If you need any additional information from Intel, please submit a new question.

Sincerely,

Iffa

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page