- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am trying to convert DINO model to IR to inference on VPU. Conversion of IR files on v2022.1 fails with error:

[ ERROR ] Check 'i < input_pshape.rank().get_length()' failed at C:\j\workspace\private-ci\ie\build-windows-vs2019@3\b\repos\openvino\src\core\src\op\reshape.cpp:391:

While validating node 'v1::Reshape Reshape_428470 (onnx::Shape_14522[0]:f32{?,256}, onnx::Reshape_14532[0]:i64{3}) -> (f32{?,?,?})' with friendly_name 'Reshape_428470':

'0' dimension is out of range

Hence, I tried creating IR files with v2022.3, to inference on v2022.2. I was able to convert the onnx model to IR in v2022.3, but while inferencing on v2022.1 it shows the following error (even though I converted all ops to fp16 using --data_type FP16):

compiled_model = benchmark.core.compile_model(model, benchmark.device)

File "C:\Program Files (x86)\Intel\openvino_2022\python\python3.9\openvino\runtime\ie_api.py", line 266, in compile_model

super().compile_model(model, device_name, {} if config is None else config)

RuntimeError: Less_3204 of type Less: [ GENERAL_ERROR ]

C:\j\workspace\private-ci\ie\build-windows-vs2019@3\b\repos\openvino\src\plugins\intel_myriad\graph_transformer\src\stages\eltwise.cpp:164 Stage node Less_3204 (Less) types check error: input #0 has type S32, but one of [FP16] is expected

This is the model link: https://drive.google.com/drive/folders/1P-hwHMCYsu5bC4YKWa532rdQ3pYLkJ3F?usp=sharing

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Simardeep,

Thank you for reaching out to us.

As you can see in the release notes, the MYRIAD plugin is not a part of the 2022.3 release and you need to use 2022.1 to leverage MYRIAD stick.

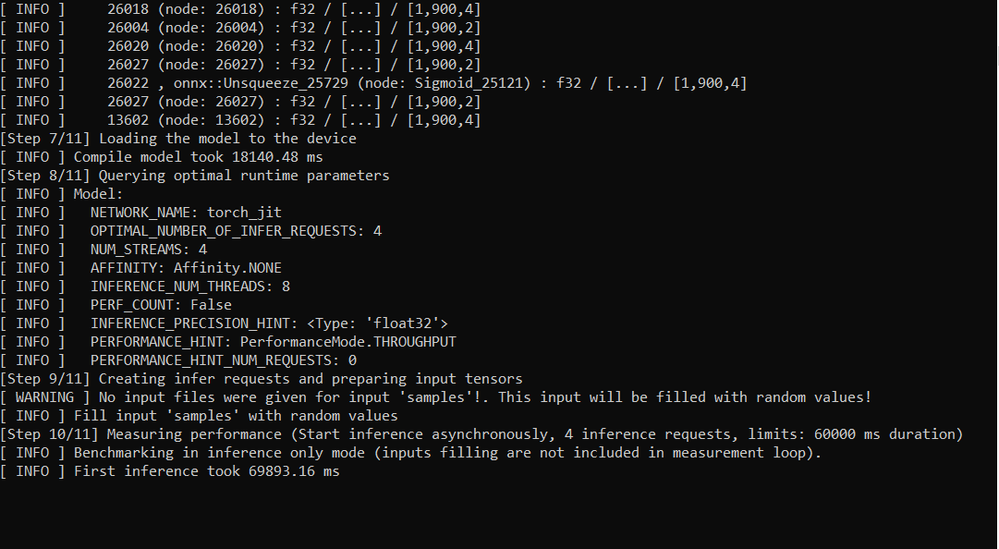

I tested your model on my side. I'm able to convert your ONNX model to IR using OpenVINO 2022.3. Then tested it using benchmark_app and the test hang here:

I tried to convert the model using OpenVINO 2022.1 and received a similar error. The error may due to unsupported operations (such as opsets and layers).

Just so you're aware, using two different OpenVINO versions to convert and infer a model is not a recommended practice, because most likely it will cause conflict between the two versions.

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have modified numerous layers already to make the model compatible with ov2022.1. Is the error above due to an unsupported layer or there might be any other cause? Also, is there any workaround to convert this model?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Simardeep,

The MO errors you received when using OpenVINO 2022.1 are due to the unsupported layers and operations. These issues are fixed in OpenVINO 2022.3.

The model can be inferred with CPU without any error but received errors when the Myriad plugin was used. The reason behind this is OpenVINO 2022.3 doesn't support Myriad.

If you want to use Myriad as a target device, I would suggest you wait until the next OpenVINO release (expected 2023.1) for Myriad support.

Note that, there's a high consumption of CPU and memory when running your model and Myriad device may not be supporting this behavior.

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I do agree that this model has high memory consumption. Just one query, while compiling with v2022.1, can you assist me in pinpointing the cause of the error? I have already made numerous changes for model compilation for v2022.3 and was wondering whether the same could be replicated for v2022.1.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Simardeep.

The model file you are currently using does not support MYRIAD for version 2022.1.

When using benchmark_app on the .onnx file, the CPU works fine. When tried MYRIAD devices, I received an error:

RuntimeError: onnx::Where_3433 of type Less: [ GENERAL_ERROR ]

C:\j\workspace\private-ci\ie\build-windows-vs2019@3\b\repos\openvino\src\plugins\intel_myriad\graph_transformer\src\stages\eltwise.cpp:164 Stage node onnx::Where_3433 (Less) types check error: input #0 has type S32, but one of [FP16] is expected

From the error message, one of the layers is FP32 which is not supported by MARIAD (FP16). This issue is fixed on the latest OpenVINO 2022.3.0, but this version is yet to support MYRIAD.

I am testing with the Benchmark app to verify if the benchmark app can infer the onnx file. If the benchmark fails, model optimization will fail as well.

I suggest using the model supported by MYRIAD (Supported Devices), especially the framework layer, or you could wait for the next OpenVINO 2022.3.1 release, which is soon.

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Simardeep,

This thread will no longer be monitored since we have provided alternatives for you. If you need any additional information from Intel, please submit a new question.

Sincerely,

Zulkifli

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page