- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1.Problem:

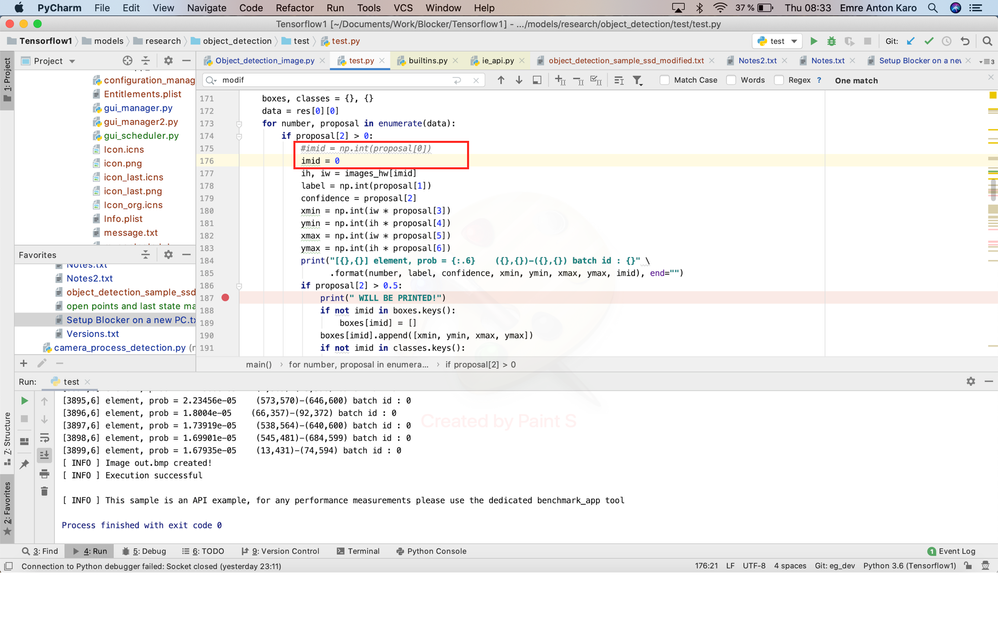

I want to optimise my custom-trained model (Detects different body parts with faster_rcnn_inception_v2_coco-tensorflow) for my Intel Core i5 which works fine without openvino optimisation. My problem is if I execute my custom-trained model (With my created openvino .xml and .bin files) with openvino object_detection_sample_ssd.py sample, several irrelevant parts are marked by inference engine, although what I expect is just specific body parts like face, hands etc. (please see attachment for the output:"my output.png").

Could someone please help me about my problem? For the details please see below.

Thanks a lot in advance.

PS:

1-If I execute the same inference engine sample object_detection_sample_ssd.py with pre-trained Openvino model "faster-rcnn-resnet101-coco-sparse-60-0001", it works totally fine, the person is detected as expected.

2- Originial object_detection_sample_ssd.py file is modified by me(please see attachment for modified file), since the code was crashed during the execution. But the same modified code works fine with faster-rcnn-resnet101-coco-sparse-60-0001 as I mentioned above. Moreover faster-rcnn-resnet101-coco-sparse-60-0001 model got also totally the same errors, during the execution. So it is not possible to execute it with the original object_detection_sample_ssd.py either. Please see the original file in environment link and modified lines in (object_detection_sample_ssd_modified.txt) in attachment. Modified lines are already commented as "#Modified"

2-Commands:

2.1.1.xml and bin generation:(please see attachment for my_pipeline.config file (my_pipeline.config.txt)

python3.6 mo_tf.py --input_model /Users/Documents/Work/Blocker/OpenVINO/my_frozen_inference_graph.pb --transformations_config /opt/intel/openvino_2021.2.185/deployment_tools/model_optimizer/extensions/front/tf/faster_rcnn_support.json --tensorflow_object_detection_api_pipeline_config /Users/Documents/Work/Blocker/OpenVINO/my_pipeline.config

2.1.2.xml and bin generation output:

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: /Users/Documents/Work/Blocker/OpenVINO/my_frozen_inference_graph.pb

- Path for generated IR: /opt/intel/openvino_2021.2.185/deployment_tools/model_optimizer/.

- IR output name: my_frozen_inference_graph

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP32

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: None

- Reverse input channels: False

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: /Users/Documents/Work/Blocker/OpenVINO/my_pipeline.config

- Use the config file: None

Model Optimizer version: 2021.2.0-1877-176bdf51370-releases/2021/2

[ WARNING ] Model Optimizer removes pre-processing block of the model which resizes image keeping aspect ratio. The Inference Engine does not support dynamic image size so the Intermediate Representation file is generated with the input image size of a fixed size.

Specify the "--input_shape" command line parameter to override the default shape which is equal to (600, 600).

The Preprocessor block has been removed. Only nodes performing mean value subtraction and scaling (if applicable) are kept.

The graph output nodes "num_detections", "detection_boxes", "detection_classes", "detection_scores" have been replaced with a single layer of type "Detection Output". Refer to IR catalogue in the documentation for information about this layer.

[ SUCCESS ] Generated IR version 10 model.

[ SUCCESS ] XML file: /opt/intel/openvino_2021.2.185/deployment_tools/model_optimizer/./my_frozen_inference_graph.xml

[ SUCCESS ] BIN file: /opt/intel/openvino_2021.2.185/deployment_tools/model_optimizer/./my_frozen_inference_graph.bin

[ SUCCESS ] Total execution time: 126.86 seconds.

[ SUCCESS ] Memory consumed: 744 MB.

2.2.1.Execution:

python3.6 object_detection_sample_ssd_modified.py -i /Users/Documents/Work/Blocker/Tensorflow1/models/research/object_detection/images/test/300_1000.bmp -m /Users/Documents/Work/Blocker/OpenVINO/new/my_frozen_inference_graph.xml --model_bin /Users/Documents/Work/Blocker/OpenVINO/new/my_frozen_inference_graph.bin -d CPU

2.2.2.Execution Output:

[3898,6] element, prob = 3.66124e-05 (2,442)-(52,477) batch id : 0

[3899,6] element, prob = 3.2293e-05 (307,408)-(439,600) batch id : 0

[ INFO ] Image out.bmp created!

[ INFO ] Execution successful

[ INFO ] This sample is an API example, for any performance measurements please use the dedicated benchmark_app tool

3.Environment:

OS:macOS Catalina 10.15.4

Tensorflow=1.15.2

Openvino=2021.2.185

Sample=https://github.com/openvinotoolkit/openvino/blob/master/inference-engine/ie_bridges/python/sample/object_detection_sample_ssd/object_detection_sample_ssd.pyProcessor:1,6 GHz Dual-Core Intel Core i5

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Problem Solved by me:

Following steps work fine with my model and

faster-rcnn-resnet101-coco-sparse-60-0001 model. I guess something is buggy in openvino_2021.2. openvino_2020.4 works fine.

STEPS:

1-install openvino_2020.4 (instead of current version openvino_2021.2)

2-use object_detection_sample_ssd.py in the openvino_2020.4 toolkit(2 modifications were required):

1-original:

not_supported_layers = [l for l in net.layers.keys() if l not in supported_layers]

modification:

not_supported_layers = [l for l in net.input_info if l not in supported_layers]

2-original:

net.layers[output_key].type

modification:

for output_key in net.outputs:

if output_key == "detection_output":

3-regenerate my xml and bin in the openvino_2020.4 toolkit :

sudo python3.6 mo_tf.py --input_model /Users/Documents/Work/Blocker/OpenVINO/frozen_inference_graph.pb --transformations_config /opt/intel/openvino_2021.2.185/deployment_tools/model_optimizer/extensions/front/tf/faster_rcnn_support.json --tensorflow_object_detection_api_pipeline_config /Users/Documents/Work/Blocker/OpenVINO/pipeline.config

4-Execution with new xml created above:

python3.6 -i /Users/karoantonemre/Documents/Work/Blocker/Tensorflow1/models/research/object_detection/images/test/072_1000.bmp -m /Users/karoantonemre/Documents/Work/Blocker/OpenVINO/new/frozen_inference_graph.xml

Thanks a lot for your support.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Sorry I forgot one modification in my object_detection_sample_ssd_modified.py file. The up-to-date file is attached.

By the way, I saw that my problem is totally related with following topic:

but unfortunately, in my case object_detection_sample_ssd.py is not working either.

I would be appreciated for a response.

Thanks a lot in advance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Emre Gun,

Thanks for reaching out. We are investigating this and will get back to you at the earliest.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Emre Gun,

Can you clarify what do you mean by this sentence " But the same modified code works fine with faster-rcnn-resnet101-coco-sparse-60-0001 as I mentioned above? Moreover, faster-rcnn-resnet101-coco-sparse-60-0001 model got also totally the same errors, during the execution. So it is not possible to execute it with the original object_detection_sample_ssd.py either". Did the original object_detection_sample_ssd.py work fine with the faster-rcnn-resnet101-coco-sparse-60-0001 model? Could you please share the screenshot of the error during the execution crash as you mentioned?

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aznie,

Thank you for your fast response. The original object_detection_sample_ssd.py did not work either with faster-rcnn-resnet101-coco-sparse-60-0001 model. I did some modifications to make it work. The first 3 errors occur in both models(mine and faster-rcnn-resnet101-coco-sparse-60-0001 model), just the fourth one is specific for mine:

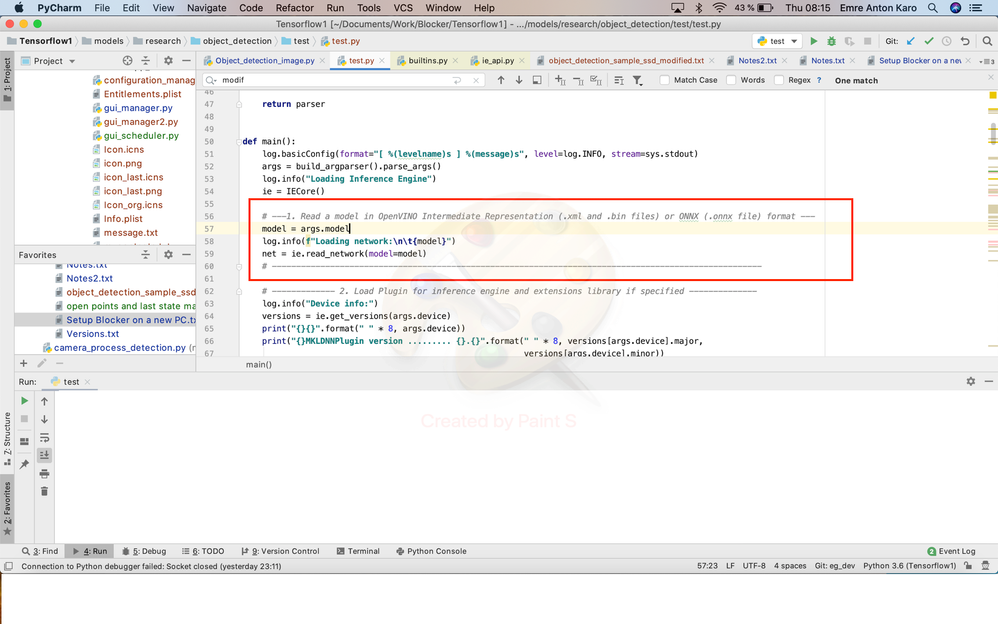

1-Error: "No Error"

1.1Description:The code did not crash but as I know we have to give .bin file also into ie.read_network

1.2Original:

1.3Modification:

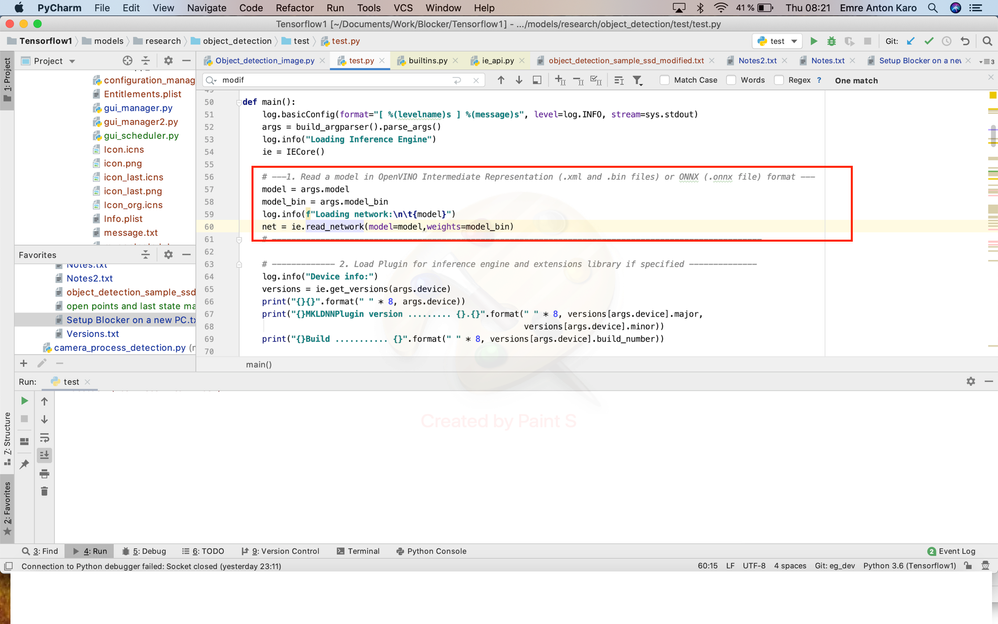

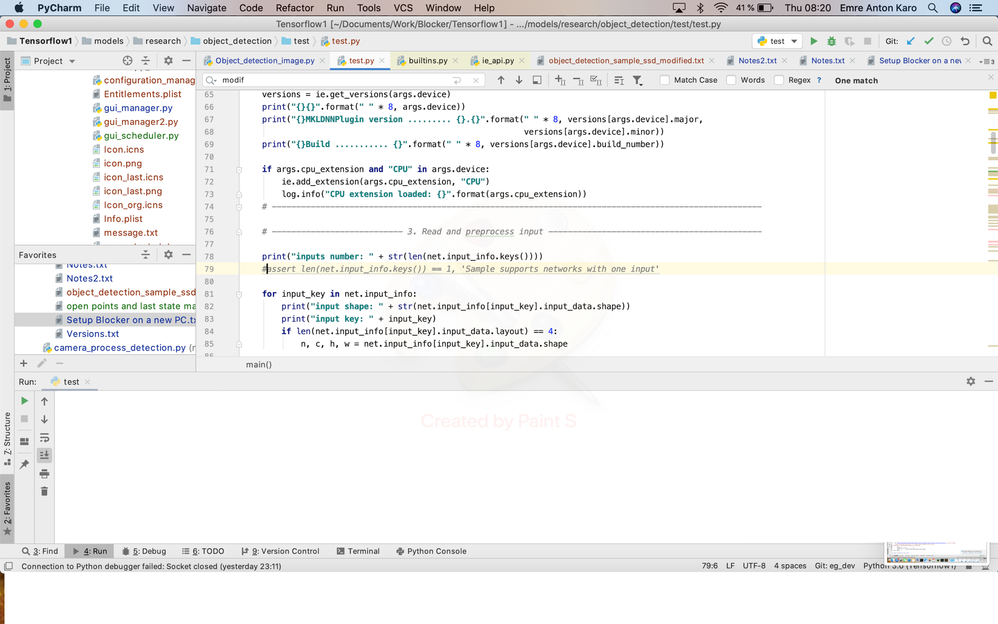

2-Error:"Sample supports networks with one input"

2.1Description:Model has 2 input keys:image_info and image_tensor. This problem is also discussed under this topic. https://community.intel.com/t5/Intel-Distribution-of-OpenVINO/Not-able-to-run-inference-on-custom-trained-faster-rcnn/td-p/1156986. But I could not even make object_detection_sample_ssd.py work, as mentioned in topic.

2.2Original:

2.3Modification:

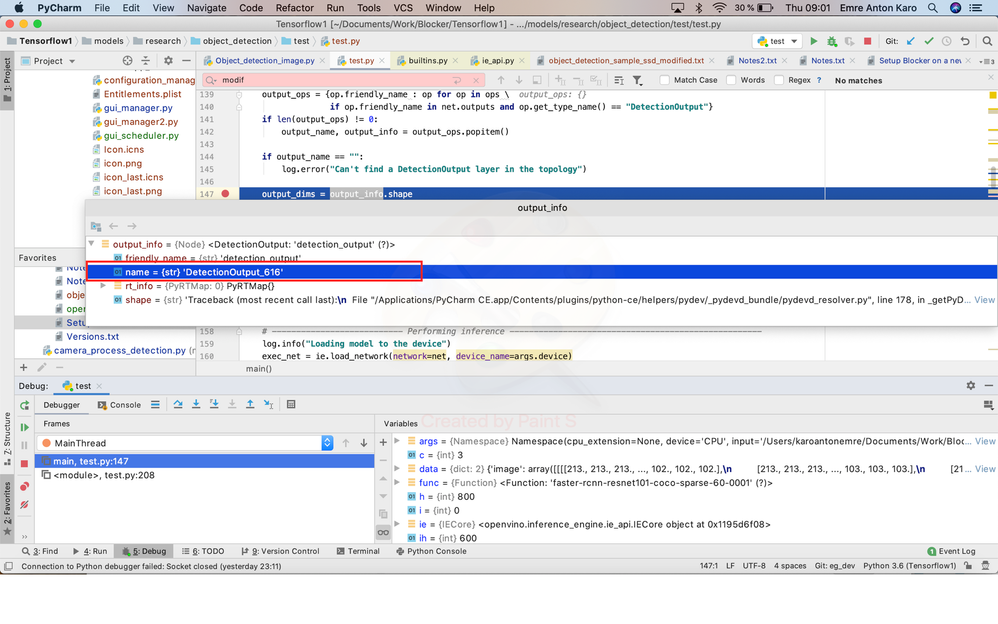

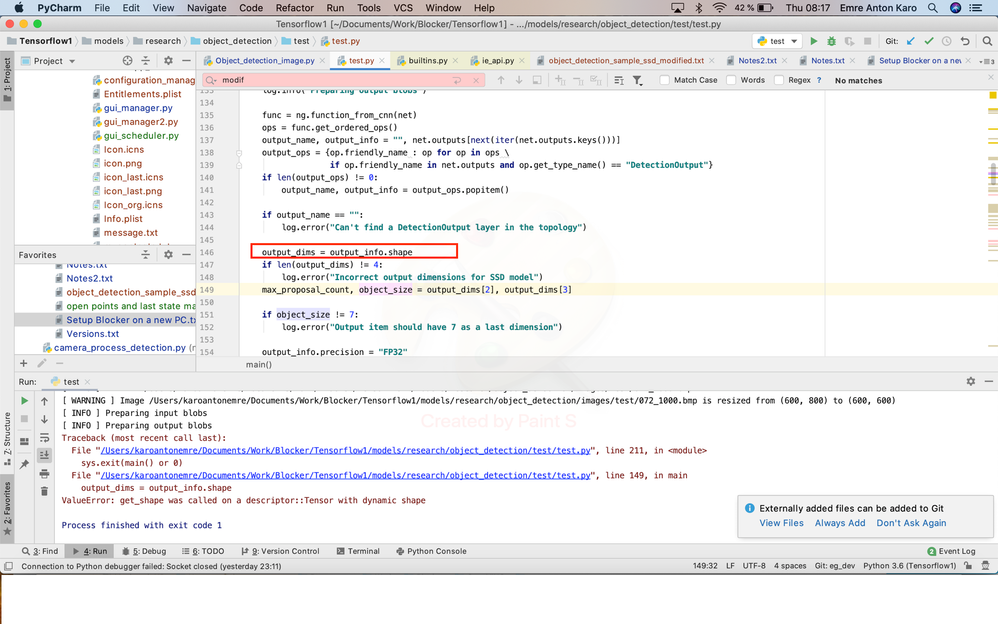

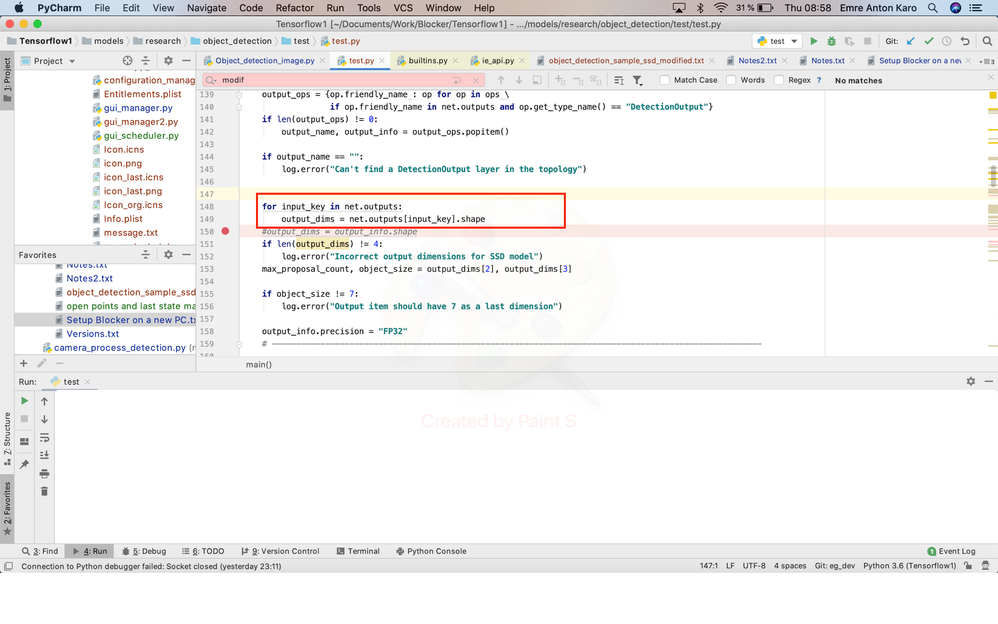

3-Error: ValueError: get_shape was called on a descriptor::Tensor with dynamic shape

3.1Description:Somehow detectionoutput_616 shape value is corrupted. I could achieve to read the value with my modification below.

3.2Original:

3.3Modification:

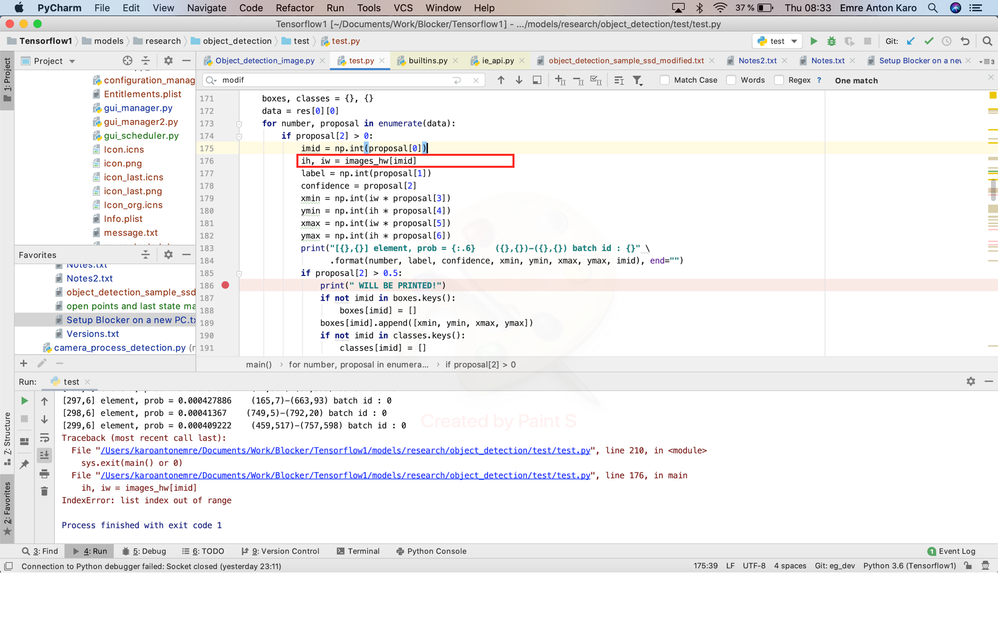

4-Error: List index out of range.

4.1Description: In my model there are several batch ids(imid). Unfortunately I could not understand what batch id is. I made a dummy workaround. But I am pretty sure that it is not correct :). I just want to see the end of the execution. (!!!!This error occurs just in my model!!!!)

4.2Original:

4.3Modification:

PS:

1-By the way, there is a wrong detection also in faster-rcnn-resnet101-coco-sparse-60-0001 model as you can see below, but I guess it is training problem. irrelevant from code:

2-You can see my frozen_inference_graph.xml file in attachment if you require. In my case 3.Error occurs for layer id="459".

Thanks a lot for your support.

- Tags:

- Hi A

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Problem Solved by me:

Following steps work fine with my model and

faster-rcnn-resnet101-coco-sparse-60-0001 model. I guess something is buggy in openvino_2021.2. openvino_2020.4 works fine.

STEPS:

1-install openvino_2020.4 (instead of current version openvino_2021.2)

2-use object_detection_sample_ssd.py in the openvino_2020.4 toolkit(2 modifications were required):

1-original:

not_supported_layers = [l for l in net.layers.keys() if l not in supported_layers]

modification:

not_supported_layers = [l for l in net.input_info if l not in supported_layers]

2-original:

net.layers[output_key].type

modification:

for output_key in net.outputs:

if output_key == "detection_output":

3-regenerate my xml and bin in the openvino_2020.4 toolkit :

sudo python3.6 mo_tf.py --input_model /Users/Documents/Work/Blocker/OpenVINO/frozen_inference_graph.pb --transformations_config /opt/intel/openvino_2021.2.185/deployment_tools/model_optimizer/extensions/front/tf/faster_rcnn_support.json --tensorflow_object_detection_api_pipeline_config /Users/Documents/Work/Blocker/OpenVINO/pipeline.config

4-Execution with new xml created above:

python3.6 -i /Users/karoantonemre/Documents/Work/Blocker/Tensorflow1/models/research/object_detection/images/test/072_1000.bmp -m /Users/karoantonemre/Documents/Work/Blocker/OpenVINO/new/frozen_inference_graph.xml

Thanks a lot for your support.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Emre Gun,

I am glad that you are able to solve the issue. Thank you for reporting the bug and sharing a detailed solution. I've tested the same workaround on my machine and seems like the same error encountered. Basically, the third error you are getting is because of the Shape Inference feature. Therefore, this thread will no longer be monitored since this issue has been resolved. If you need any additional information from Intel, please submit a new question.

Regards,

Aznie

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page