- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

I'm trying to deploy custom U-Net models on NCS-2 device. I have saved my U-Net models in .h5 format(keras/tensorflow) and have converted it to .ONNX format. Then with the help of OpenVINO's model optimizer - converted it to IR-format(.xml and .bin) with data_type set to FP16. But unfortunately, the .xml models are taking a lot of time to get loaded into the NCS-2 (Execution Time: 61.73 seconds), on my CPU it took just 2.34 seconds.

I'm not sure what could be the issue behind this.

Note: I have precisely followed the openvino documentation(2022.1).

Thank you.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mehul21,

Thank you for reaching out to us.

Intel® Neural Compute Stick 2 (NCS2) is a plug & play AI device for deep learning inference at the edge. It offers access to deep learning inference without the need for large, expensive hardware.

For your information, the general performance of NCS2 is generally lower than GPU, and high-end CPUs due to different processing and computing power thus resulting in different performance results such as model loading time. You can refer to Benchmark results for a comparison of OpenVINO™ inferencing on different hardware.

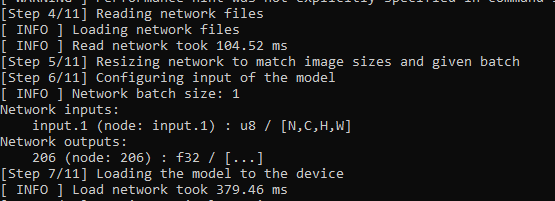

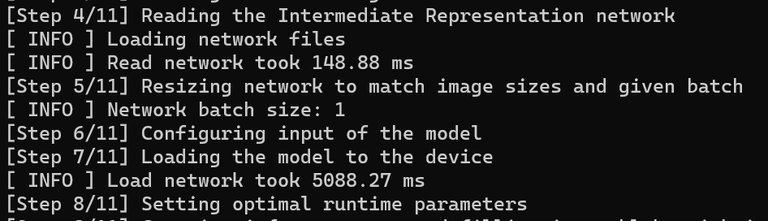

On the other hand, I have validated by running Benchmark App on a U-Net model (unet-camvid-onnx-0001) from Open Model Zoo on both CPU and MYRIAD and received differences in load model network time. My Intel® Core™ i7-10610U CPU takes 379.46ms to load while MYRIAD takes 5088.27ms. I share my results here:

CPU:

MYRIAD:

Regards,

Megat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mehul21,

Thank you for your question. This thread will no longer be monitored since we have provided a suggestion. If you need any additional information from Intel, please submit a new question.

Regards,

Megat

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page