- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi I am using OpenVINO 2020.4. It crashes very frequently when accessed by more than one threads. The code shown below is called by each thread in parallel and it crashes at any random API every time on my system (Find Sys info below code). It works perfectly when only one thread is running or making this entire method thread safe using a lock and setting KEY_CPU_THREAD_NUM to "1". But for "3" it even crashes when using locks around this method. Please suggest what can be the issue.

/* Config used for LoadNetwork api

std::map<std::string, std::string> cpuConfig = {

{ InferenceEngine::PluginConfigParams::KEY_CPU_THREADS_NUM, "3"},

{ InferenceEngine::PluginConfigParams::KEY_CPU_BIND_THREAD, "NO"}

};

*/

/* Our Inference Method called from multiple threads symultaneously.... */

int runInference(std::vector <std::pair<float *, DetectionOutput **>>::iterator & inputStart,

std::pair<int, int> &heightWidth, int BatchSize)

{

/* Input Info from netwok */

InferenceEngine::InputsDataMap tInputInfo;

/* Get Input Info from loaded network */

tInputInfo = mNetwork.getInputsInfo();

tInputInfo.begin()->second->setPrecision(InferenceEngine::Precision::FP32);

tInputInfo.begin()->second->setLayout(InferenceEngine::Layout::NCHW);

/* Get Infernce Request */

InferenceEngine::InferRequest tInferReq = mExecutableNetwork.CreateInferRequest();;

for (auto &item : tInputInfo)

{

/* Input model string */

const std::string inputNameString = item.first;

/* Get input blob pointer */

InferenceEngine::Blob::Ptr inputBlob = tInferReq.GetBlob(inputNameString);

/* Get Input Image dimention */

InferenceEngine::SizeVector dims = inputBlob->getTensorDesc().getDims();

int tChannel = (int)dims[1];

int tHeight = (int)dims[2];

int tWidth = (int)dims[3];

/* Get moemry blob to address directly to input blob memory */

InferenceEngine::MemoryBlob::Ptr tMemBlobInputPtr = InferenceEngine::as<InferenceEngine::MemoryBlob>(inputBlob);

if (nullptr == tMemBlobInputPtr)

{

return FAILED;

}

// locked memory holder should be alive all time while access to its buffer happens

auto minputHolder = tMemBlobInputPtr->wmap();

/* Get The raw pointer */

auto data = minputHolder.as<InferenceEngine::PrecisionTrait<InferenceEngine::Precision::FP32>::value_type *>();

std::vector <std::pair<float *, DetectionOutput **>>::iterator tTempIter = inputStart;

for (int tIdx = 0; tIdx < BatchSize; ++tIdx)

{

/* Fill data intot input blob pointer */

memcpy(data, tTempIter->first, heightWidth.first * heightWidth.second * 3 * sizeof(float));

tTempIter++;

}

}

/* Run Inference */

try

{

/* Start Async Request */

tInferReq.StartAsync();

/* Wait untill reult is ready */

tInferReq.Wait(InferenceEngine::IInferRequest::WaitMode::RESULT_READY);

}

catch (InferenceEngine::details::InferenceEngineException &exp)

{

std::cout << "[InferEngine_OpenVINO] == " << exp.what() << std::endl;

return FAILED;

}

// Parse Output

// .

// .

// .

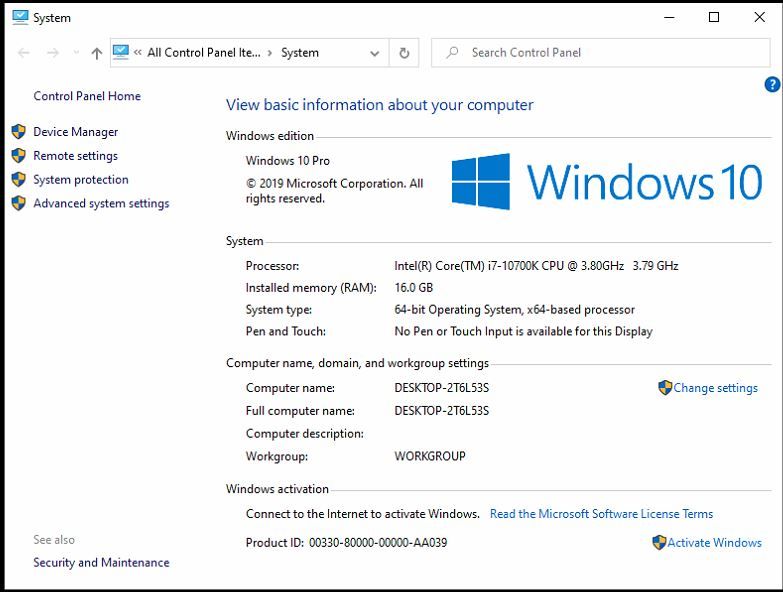

return OK;

}Here is my system information. I have tried running this code on different machines but currently crashes on this specific hardware. It has 8 Physical cores and 16 logical cores.

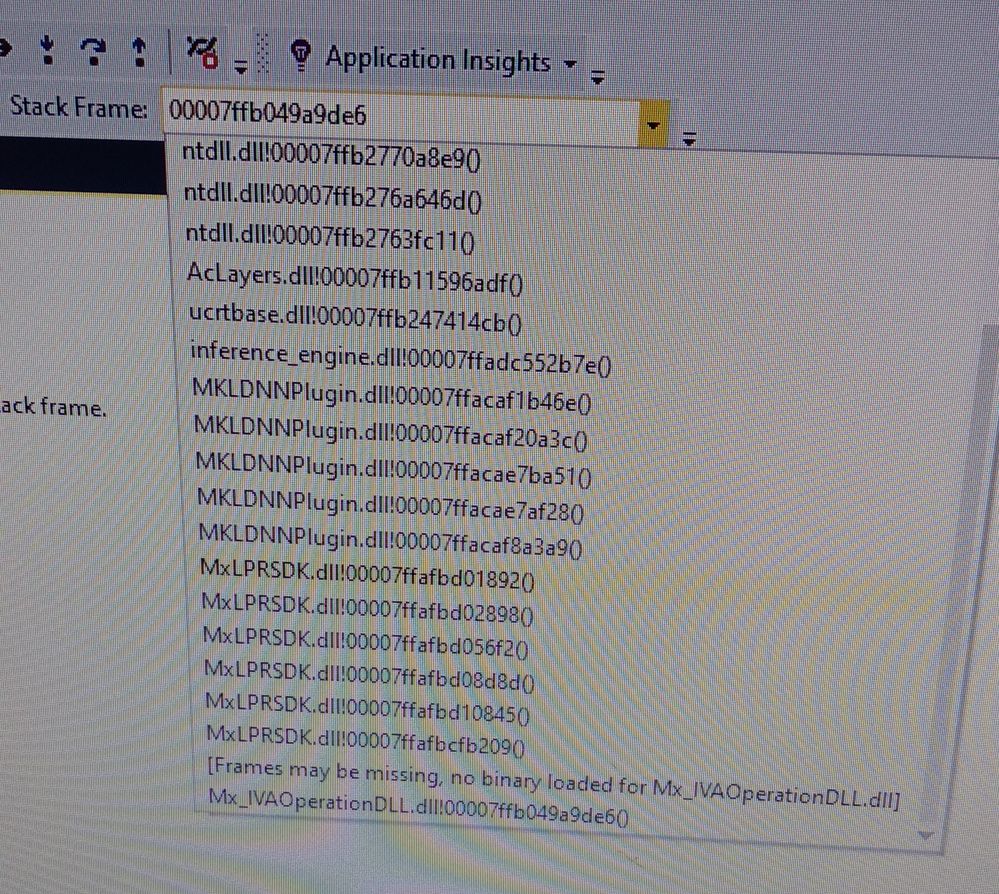

It shows that the reason for crash is ntdll.dll which is dependency of inference_engine.dll

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Hetul,

Thank you for reaching out to us. Have you replicated this issue by running the program on other versions apart from 2020.4 version? If possible, could you install OpenVINO toolkit 2021.1 version and try executing the program. You can download the latest version of OpenVINO toolkit at the following link:

https://software.intel.com/content/www/us/en/develop/tools/openvino-toolkit/download.html

If it does not work, we need your cooperation to share additional information regarding:

- CMake version

- Microsoft Visual Studio version

- Full error output in snapshot/text if available

Regards,

Adli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Hetul,

Thank you for your question. If you need any additional information from Intel, please submit a new question as this thread is no longer being monitored.

Regards,

Adli

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page