- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I have a pretrained model developed using tensor frame work. When I tried to use the model optimizer to convert the model for FPGA inference. I am facing the below error. Please help to review the error message and let me know your feedback to resolve this issue.

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: /home/macnica/Desktop/bkav_pretrained_model/frozen_inference_graph.pb

- Path for generated IR: /opt/intel/computer_vision_sdk_2018.5.455/deployment_tools/model_optimizer/.

- IR output name: frozen_inference_graph

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: [1,1,1,3]

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP32

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: False

- Reverse input channels: True

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Offload unsupported operations: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: None

- Operations to offload: None

- Patterns to offload: None

- Use the config file: None

Model Optimizer version: 1.5.12.49d067a0

/opt/intel/computer_vision_sdk_2018.5.455/deployment_tools/model_optimizer/mo/ops/slice.py:111: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

value = value[slice_idx]

[ ERROR ] Shape is not defined for output 0 of "Postprocessor/BatchMultiClassNonMaxSuppression/map/while/Slice_1".

[ ERROR ] Cannot infer shapes or values for node "Postprocessor/BatchMultiClassNonMaxSuppression/map/while/Slice_1".

[ ERROR ] Not all output shapes were inferred or fully defined for node "Postprocessor/BatchMultiClassNonMaxSuppression/map/while/Slice_1".

For more information please refer to Model Optimizer FAQ (<INSTALL_DIR>/deployment_tools/documentation/docs/MO_FAQ.html), question #40.

[ ERROR ]

[ ERROR ] It can happen due to bug in custom shape infer function <function Slice.infer at 0x7f20449d92f0>.

[ ERROR ] Or because the node inputs have incorrect values/shapes.

[ ERROR ] Or because input shapes are incorrect (embedded to the model or passed via --input_shape).

[ ERROR ] Run Model Optimizer with --log_level=DEBUG for more information.

[ ERROR ] Stopped shape/value propagation at "Postprocessor/BatchMultiClassNonMaxSuppression/map/while/Slice_1" node.

For more information please refer to Model Optimizer FAQ (<INSTALL_DIR>/deployment_tools/documentation/docs/MO_FAQ.html), question #38.

thanks and regards

Dineshkumar

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear SUGUMAR, DINESHKUMAR,

You are using an extremely old version of OpenVino. We are now on 2019R1.1 . Please upgrade and try again,

Thanks,

Shubha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

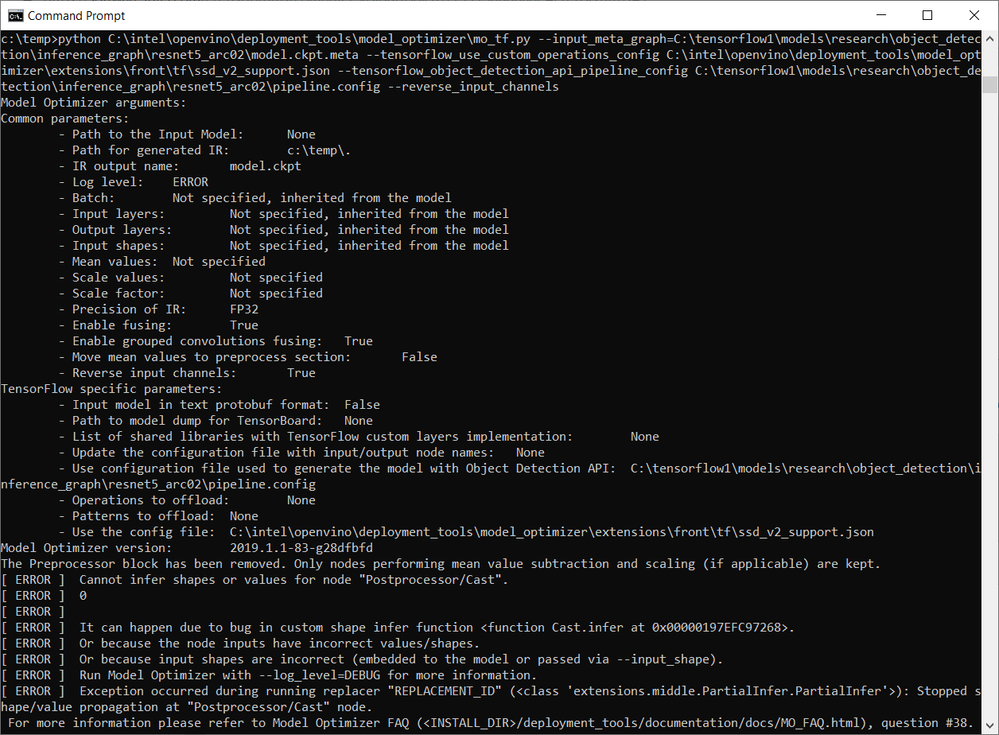

Dear Shubha

thanks for your suggestion.

I have tried using Openvino2019R1 and got the same error. Attached is the error message. please help to review and let me know your feedback.

thanks and regards

Dineshkumar S

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am also taking the same approach I am also getting the same error while converting the pre-trained model in ssd_mobilenet_v2_coco_2018_03_29. How to deal with it

C:\Program Files (x86)\IntelSWTools\openvino_2019.1.148\deployment_tools\model_optimizer>python mo_tf.py --input_model "C:\tensorflow2\models\research\object_detection\inference_graph\frozen_inference_graph.pb" --tensorflow_use_custom_operations_config "C:\Users\manisha\Desktop\ssd\ssd_v2_support.json" --tensorflow_object_detection_api_pipeline_config "C:\tensorflow2\models\research\object_detection\inference_graph\pipeline.config" --batch 1

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: C:\tensorflow2\models\research\object_detection\inference_graph\frozen_inference_graph.pb

- Path for generated IR: C:\Program Files (x86)\IntelSWTools\openvino_2019.1.148\deployment_tools\model_optimizer\.

- IR output name: frozen_inference_graph

- Log level: ERROR

- Batch: 1

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP32

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: False

- Reverse input channels: False

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: C:\tensorflow2\models\research\object_detection\inference_graph\pipeline.config

- Operations to offload: None

- Patterns to offload: None

- Use the config file: C:\Users\manisha\Desktop\ssd\ssd_v2_support.json

Model Optimizer version: 2019.1.1-83-g28dfbfd

[ WARNING ]

Detected not satisfied dependencies:

tensorflow: installed: 1.12.0, required: 1.12

test-generator: installed: 0.1.2, required: 0.1.1

Please install required versions of components or use install_prerequisites script

C:\Program Files (x86)\IntelSWTools\openvino_2019.1.148\deployment_tools\model_optimizer\install_prerequisites\install_prerequisites_tf.bat

Note that install_prerequisites scripts may install additional components.

The Preprocessor block has been removed. Only nodes performing mean value subtraction and scaling (if applicable) are kept.

[ ERROR ] Cannot infer shapes or values for node "Postprocessor/Cast".

[ ERROR ] 0

[ ERROR ]

[ ERROR ] It can happen due to bug in custom shape infer function <function Cast.infer at 0x00000169975F5950>.

[ ERROR ] Or because the node inputs have incorrect values/shapes.

[ ERROR ] Or because input shapes are incorrect (embedded to the model or passed via --input_shape).

[ ERROR ] Run Model Optimizer with --log_level=DEBUG for more information.

[ ERROR ] Exception occurred during running replacer "REPLACEMENT_ID" (<class 'extensions.middle.PartialInfer.PartialInfer'>): Stopped shape/value propagation at "Postprocessor/Cast" node.

For more information please refer to Model Optimizer FAQ (<INSTALL_DIR>/deployment_tools/documentation/docs/MO_FAQ.html), question #38.

Can anybody please help

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Manisha B.,

Please install required versions of components or use install_prerequisites script

in your output is not normal. Please install all prerequisites first under deployment_tools\model_optimizer\install_prerequisites.

Thanks,

Shubha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shubha,

I am getting same error, pleaase see the screen capture below. I have tesed my setup with the demo script ".\demo_security_barrier_camera.bat -d MYRIAD" and it works fine. I have tried three diffent models with my training data but all fail with the same error. Could you please check the error below and help? rregards, Nadeem

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear SUGUMAR, DINESHKUMAR

in line 57 of ssd_v2_support.json you will see this:

"Postprocessor/ToFloat"

Please replace it with "Postprocessor/Cast"

and try again. Your issue doesn't have anything to do with FPGA actually. The Tensorflow Object Detection API models have changed and now the shipped ssd_v2_support.json doesn't match.

Let me know if this works for you.

Thanks,

Shubha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I have the exact same issue. Unfortunately, for me your proposed file change did not work. But it changed the error from the original error to:

[ ERROR ] Exception occurred during running replacer "ObjectDetectionAPISSDPostprocessorReplacement" (<class 'extensions.front.tf.ObjectDetectionAPI.ObjectDetectionAPISSDPostprocessorReplacement'>): The matched sub-graph contains network input node "image_tensor".

For more information please refer to Model Optimizer FAQ (<INSTALL_DIR>/deployment_tools/documentation/docs/MO_FAQ.html), question #75.

It would be great if you could help me.

Kind regards,

Lorenz

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Stangier, Lorenz,

What version of Tensorflow are you using ? Can you kindly upgrade to 1.13 and try again ?

Thanks,

Shubha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great, the proposed fix ( "Postprocessor/ToFloat" replacing with "Postprocessor/Cast") works for me.

Many thanks Shubha for the support.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Dar, Nadeem,

So glad it worked for you ! Thanks for reporting back your success and sharing with the OpenVino community.

Shubha

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page