- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have been trying to hunt down the root cause of a bug we saw in prod where running inference with a classifier model with the MYRIAD plugin on NCS 2 hardware periodically produces NaN outputs but the same model with the CPU plugin does not. Initially I assumed that differences in FP16/FP32 precision on the activations could be causing numeric instability on the NCS 2. I have now found that in fact I can reproduce this bug just by running parallel inference requests with the async API. With the exact same input and the same model I periodically get results that appear to be corrupted (detected by the presence of NaN values, but there may be other bad outputs I have not detected). I have been able to reproduce this on two different hosts with the following code snippet:

import numpy as np

from openvino.inference_engine import IECore, StatusCode

import pickle

import warnings

core = IECore()

base_dir = '.'

openvino_xml_path = base_dir+'/intel_model/saved_model.xml'

output_path_fmt = base_dir+"/nandata/nandata_{}.p"

openvino_output_map = {

"StatefulPartitionedCall/myriad_bug/classifier_out/Sigmoid" : "classifier_out"

}

batch_size = 16

num_inference_requests = 4

input_length = 10752

dummy_input = {'audio_in':np.random.normal(size=[batch_size,1,input_length,1])}

poll_ms = 1 # add a short wait to prevent the polling loop spinning unnecessarily fast

openvino_plugin_name = "MYRIAD"

# Same issue when running multiple NCS 2 devices via the MULTI plugin:

# myriads = list(dev for dev in core.available_devices if dev.startswith('MYRIAD'))

# assert len(myriads) > 0, 'No myriad X devices found!'

# openvino_plugin_name = "MULTI:" + ','.join(myriads)

network = core.read_network(

model=openvino_xml_path,

)

network.batch_size = batch_size

executable_network = core.load_network(

network,

device_name=openvino_plugin_name,

num_requests=num_inference_requests,

)

# prime the requests

for req in executable_network.requests:

req.async_infer(dummy_input)

# start hammering

j = 0

while True:

for i, req in enumerate(executable_network.requests):

infer_status = req.wait(poll_ms if i == 0 else 0)

if infer_status == StatusCode.RESULT_NOT_READY:

continue

if infer_status != StatusCode.OK:

warnings.warn(f'Infer request {j} returned {infer_status}')

continue

# make sure it's ready

# (I found in the past - around R2019 - this was necessary when using the

# C++ API, may not be required in python or with newer OpenVINO)

req.wait()

for k, dispname in openvino_output_map.items():

res = req.output_blobs[k].buffer

if np.any(np.isnan(res)):

print('NaN triggered', j, i, dispname, res)

with open(output_path_fmt.format(j), 'wb') as f:

pickle.dump({k: req.output_blobs[k].buffer for k in openvino_output_map}, f)

break

# start new req

if j % 10 == 0:

print(j)

j += 1

req.async_infer(dummy_input)My manager has told me not to attach the model file publicly but I have permission to provide it privately to an Intel employee over email or other means.

If I had to speculate, it could be that the input shape we are using is an edge case compared to typical image convnets, as it took some trial and error to find a minimal model architecture that allows me to reproduce the bug.

I'm using the openvino-dev package from pypi with python 3.9:

openvino-2021.4.2-3976-cp39-cp39-manylinux2014_x86_64.whl

openvino_dev-2021.4.2-3976-py3-none-any.whl

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi EdBordin,

Thanks for reaching out to us.

You are welcome to send your model file and the nandata_{}.p file privately to my email:

If possible, do also share your original model (before converting to IR) with me.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi EdBordin,

Thanks for sharing your model with us.

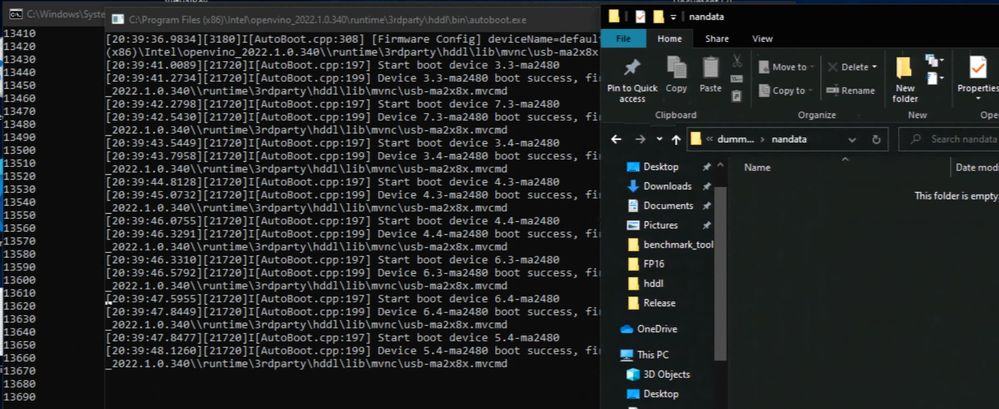

I was able to reproduce the issue on my end.

When loading the network with one infer request, I was able to get rid of the NaN output values.

It might be due to the limitation of Intel® Neural Compute Stick 2’s resources and capabilities.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Peh,

If this is due to a hardware limitation then I would expect that either the model compiler or the MYRIAD plugin could correctly validate that and raise an error rather than leading to this behaviour with corrupted data. Failing that, it would be very helpful if you could work with us to isolate what limitation we are hitting so we can manually avoid it in future models.

Using one request effectively degrades performance to be the same as the synchronous API - we're trying to use this chip for its intended purpose of real time inference at the edge so that's not really a great solution. If we can't resolve this we may have to switch to a different hardware solution.

Regards,

Ed

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi EdBordin,

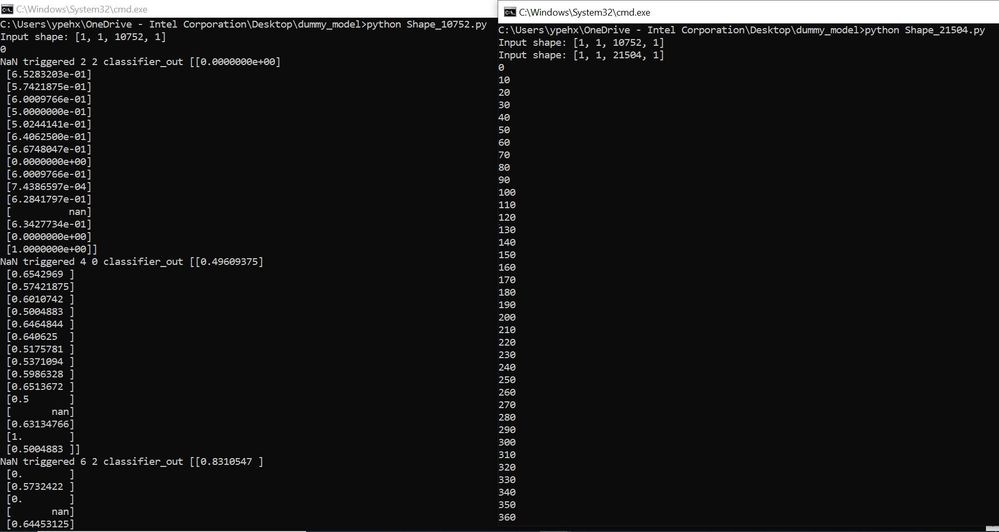

I’ve validated that running the test script with HDDL plugin (8 MYRIAD), the output results are smooth.

By the way, I also try few ways to run the test script with Intel® Neural Compute Stick 2 to get rid of the NaN output values. Surprisingly, when enlarging the model input shape, the output results are also smooth. However, changing the model input shape may significantly affect its accuracy. This proposed workaround has to clarify further from your side.

Few changes added to the test script:

input_length = 21504

input_layer = next(iter(network.input_info))

print(f"Input shape: {network.input_info[input_layer].tensor_desc.dims}")

network.reshape({input_layer: [1, 1, 21504, 1] })

print(f"Input shape: {network.input_info[input_layer].tensor_desc.dims}")

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi EdBordin,

This thread will no longer be monitored since we have provided suggestion and solution. If you need any additional information from Intel, please submit a new question.

Regards,

Peh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page