- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey,

I am trying to optimise a tensorflow trained model based on ObjectDetection Zoo.

Retrain MaskRCNN network => OK

Export a frozen model => OK

Use Model optimiser (mo.py) with command => OK

python .\mo_tf.py --input_model "<path>\frozen_inference_graph.pb" --tensorflow_use_custom_operations_config extensions/front/tf/mask_rcnn_support_api_v1.11.json --tensorflow_object_detection_api_pipeline_config '<path>\pipeline.config'

Common parameters: - Path to the Input Model: <path>\frozen_inference_graph.pb - Path for generated IR: <path> - IR output name: frozen_inference_graph - Log level: ERROR - Batch: Not specified, inherited from the model - Input layers: Not specified, inherited from the model - Output layers: Not specified, inherited from the model - Input shapes: Not specified, inherited from the model - Mean values: Not specified - Scale values: Not specified - Scale factor: Not specified - Precision of IR: FP32 - Enable fusing: True - Enable grouped convolutions fusing: True - Move mean values to preprocess section: False - Reverse input channels: False TensorFlow specific parameters: - Input model in text protobuf format: False - Offload unsupported operations: False - Path to model dump for TensorBoard: None - List of shared libraries with TensorFlow custom layers implementation: None - Update the configuration file with input/output node names: None - Use configuration file used to generate the model with Object Detection API: <path>\pipeline.config - Operations to offload: None - Patterns to offload: None - Use the config file: %Intel_SDK_ModelOptimiser%\extensions\front\tf\mask_rcnn_support_api_v1.11.json Model Optimizer version: 1.5.12.49d067a0 [ WARNING ] Some warning about input shape[WARNING] [ SUCCESS ] Generated IR model. [ SUCCESS ] XML file: <path>\frozen_inference_graph.xml [ SUCCESS ] BIN file: <path>\frozen_inference_graph.bin [ SUCCESS ] Total execution time: 24.00 seconds.

Try to create a custom inference engine in python => OK

# Loading Network : network=IENetwork(model=MODEL_XML,weights=MODEL_BIN) network.add_outputs("detection_output") input_wrapper = next(iter(network.inputs)) n, c, h, w = network.inputs[input_wrapper].shape out_wrapper = next(iter(network.outputs)) plugin=IEPlugin(device="CPU") log.info("Loading CPU Extension...") plugin.add_cpu_extension(CPU_EXTPATH) supported_layers = plugin.get_supported_layers(network) not_supported_layers = [l for l in network.layers.keys() if l not in supported_layers] if len(not_supported_layers) != 0: log.error("Not Supported Layers : "+str(not_supported_layers)) Execution_Network=plugin.load(network=network) del network log.info("Network Loaded") # Inference : #used to resize image with good dimensions i0=image_to_tensor(im,c,h,w) res=Execution_Network.infer(inputs={input_wrapper: i0})

Extract BBox, Masks and draw it => OK (I used object_detection methods)

Inference is faster but i noticed that network detects much less things through OpenVino than directly with tensorflow, what can be the reason ?

Following images, are issued from inference with original model from tensorflow zoo, issues are the same.

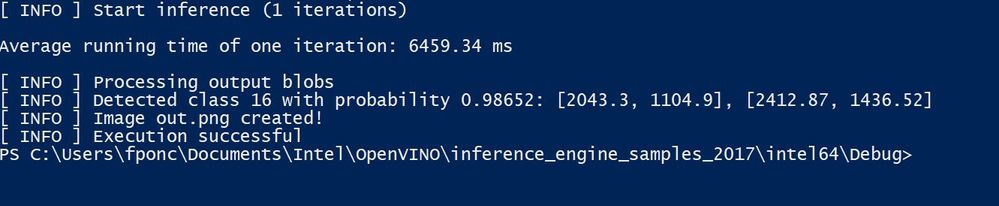

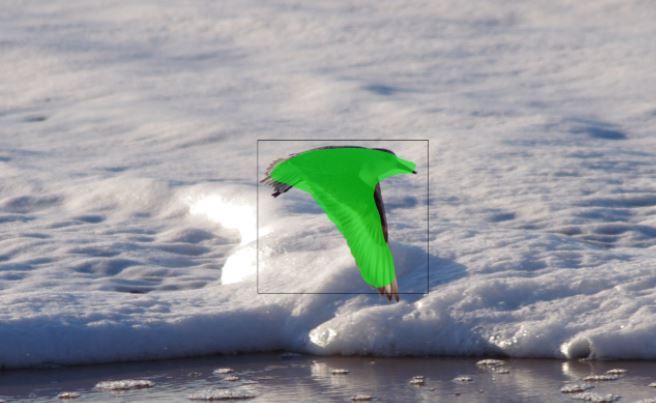

Open Vino :

TensorFlow :

As you can see, there is big differences :

- Some class not detected

- Probability are much lower than with TF

I've read it on OpenVINO R5 introduction about inference engine :

Python* API support is now gold. This means that it is validated on all supported platforms, and the API is not going to be modified. New samples were added for the Python API.

But i'm not sure to understand if i should expect everything to work from this API or not.

Thanks for your help !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Francois, I believe the --reverse_input_channels can solve your problem. I have just tested the model with both code in C++ and Python and the result is exactly the same (as good as I expected). Try it and tell me the news :D . I am happy now that I can continue with Python.

Hope that helps,

Hoa

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After reading documentation, i noticed that inceptionv2 model needs mean_value=[127.5,127.5,127.5], but nothing on inceptionv2mask_rcnn. I else notice that it can be mistake with resizing that should keep aspect ratio.

Then i changed the command to generate optimised model, with fixed input_shape and mean_value...

python "%INTEL_SDK_ModelOpt%\mo_tf.py" --input_model ".\2019_01_04_mask\frozen_inference_graph.pb" --tensorflow_use_custom_operations_config "%INTEL_SDK_ModelOpt%\extensions\front \tf\mask_rcnn_support_api_v1.11.json" --tensorflow_object_detection_api_pipeline_config ".\2019_01_04_mask\pipeline.config" --input_shape=[1,576,768,3] --mean_value=[127.5,127.5,127.5] --scale_value=[1,1,1]

But nothings changed yet about classification.

As if model don't know "Type 3" class.

Anyone have an idea about this issue ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried to use maskrcnn demo with exactly the same model.

It seems to work fine :

I see that in C++ code, there is a CNNNetReader class, that is not callable in Python.

Is it a problem due to Python API support ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Francois,

I'm not quite sure but can you redo your optimizer with arg --reverse_input_channels . I just improved the result with adding it.

My command:

python3 mo_tf.py --input_model /home/ben/IG2/frozen_inference_graph.pb --tensorflow_use_custom_operations_config extensions/front/tf/mask_rcnn_support.json --tensorflow_object_detection_api_pipeline_config /home/ben/IG2/pipeline.config -b 1 --output_dir=/home/ben/ --reverse_input_channels

p/s: What is your inference time ? For me with CPU it is about 4454 ms which is quite long (with opencv I remember it is just 2s)

Hope that helps,

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Francois, I believe the --reverse_input_channels can solve your problem. I have just tested the model with both code in C++ and Python and the result is exactly the same (as good as I expected). Try it and tell me the news :D . I am happy now that I can continue with Python.

Hope that helps,

Hoa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Hoa,

Thanks for your advise ! It solved my problem !

I don't know why but cpp code by OpenCV4.0.1 expect different export than python code.

For OpenCV :

python "%INTEL_SDK_ModelOpt%\mo_tf.py" --input_model "frozen_inference_graph.pb" --tensorflow_use_custom_operations_config "mask_rcnn_support_api_v1.11.json" --tensorflow_object_detection_api_pipeline_config "pipeline.config" --input_shape=[1,576,768,3] --mean_value=[127.5,127.5,127.5] --scale_value=[1,1,1]

Mat IEEngine::ProcessImage(Mat& Image)

{

Mat blob = dnn::blobFromImage(Image, 1.0, Size(NewCols, NewRows), Scalar(), true,false);

this->net.setInput(blob);

// Runs the forward pass to get output from the output layers

vector<Mat> outs;

std::vector<String> outNames = getOutputsNames(net);

net.forward(outs, outNames);

postprocess(Image, outs);

}

With Python :

python "%INTEL_SDK_ModelOpt%\mo_tf.py" --input_model "frozen_inference_graph.pb" --tensorflow_use_custom_operations_config "mask_rcnn_support_api_v1.11.json" --tensorflow_object_detection_api_pipeline_config "pipeline.config" -b 1 --reverse_input_channels

Thank you very much for your help !

It helped yes ! I'll now be able to test easily by python code !

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page