- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After entering the command to text detection demo cpp with text-recognition-0016 model :

./text_detection_demo -i 1.png -m_td text_detection/text.xml

-m_tr text_recog_ori_32/text-recognition-0016-encoder.xml -tr_pt_first

-m_tr_ss "?0123456789abcdefghijklmnopqrstuvwxyz"

-tr_o_blb_nm "logits" -dt simple -lower

i got this error :

[ INFO ] OpenVINO

[ INFO ] version: 2022.1.0

[ INFO ] build: 2022.1.0-7019-cdb9bec7210-releases/2022/1

[ INFO ] Reading model: text_detection/text.xml

[ INFO ] Model name: saved_model

[ INFO ] Inputs:

[ INFO ] Cast_1:0, f32, {1,3,512,512}, [...]

[ INFO ] Outputs:

[ INFO ] detections, f32, {1,1,100,7}, [...]

[ INFO ] Preprocessor configuration:

[ INFO ] Input "Cast_1:0":

User's input tensor: {1,512,512,3}, [N,H,W,C], u8

Model's expected tensor: {1,3,512,512}, [N,C,H,W], f32

Implicit pre-processing steps (2):

convert type (f32): ({1,512,512,3}, [N,H,W,C], u8) -> ({1,512,512,3}, [N,H,W,C], f32)

convert layout [N,C,H,W]: ({1,512,512,3}, [N,H,W,C], f32) -> ({1,3,512,512}, [N,C,H,W], f32)

[ INFO ] The Text Detection model text_detection/text.xml is loaded to CPU

[ INFO ] Device: CPU

[ INFO ] Number of streams: 1

[ INFO ] Number of threads: AUTO

[ INFO ] Reading model: text_recog_ori_32/text-recognition-0016-encoder.xml

[ INFO ] Model name: torch-jit-export

[ INFO ] Inputs:

[ INFO ] imgs, f32, {1,1,64,256}, [N,C,H,W]

[ INFO ] Outputs:

[ INFO ] features, f32, {1,36,1024}, [...]

[ INFO ] decoder_hidden, f32, {1,1,1024}, [...]

[ INFO ] Preprocessor configuration:

[ INFO ] Input "imgs":

User's input tensor: {1,64,256,1}, [N,H,W,C], u8

Model's expected tensor: {1,1,64,256}, [N,C,H,W], f32

Implicit pre-processing steps (2):

convert type (f32): ({1,64,256,1}, [N,H,W,C], u8) -> ({1,64,256,1}, [N,H,W,C], f32)

convert layout [N,C,H,W]: ({1,64,256,1}, [N,H,W,C], f32) -> ({1,1,64,256}, [N,C,H,W], f32)

[ INFO ] The Composite Text Recognition model text_recog_ori_32/text-recognition-0016-encoder.xml is loaded to CPU

[ INFO ] Device: CPU

[ INFO ] Number of streams: 1

[ INFO ] Number of threads: AUTO

[ INFO ] Reading model: text_recog_ori_32/text-recognition-0016-decoder.xml

[ INFO ] Model name: torch-jit-export

[ INFO ] Inputs:

[ INFO ] hidden, f32, {1,1,1024}, [N,C,D]

[ INFO ] features, f32, {1,36,1024}, [N,C,D]

[ INFO ] decoder_input, f32, {1}, [N]

[ INFO ] Outputs:

[ INFO ] decoder_hidden, f32, {1,1,1024}, [...]

[ INFO ] decoder_output, f32, {1,40}, [...]

[ INFO ] The Composite Text Recognition model text_recog_ori_32/text-recognition-0016-decoder.xml is loaded to CPU

[ INFO ] Device: CPU

[ INFO ] Number of streams: 1

[ INFO ] Number of threads: AUTO

[ ERROR ] Failed to determine output blob names

Please clarify this error.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi boe,

Thank you for reaching out to us.

The error that you are facing is due to incompatible text detection model for the demo. I noticed that your text detection model shape is different from the validated supported models.

For your information, Text Detection C++ Demo has been validated for the following text detection models only.

The supported models for this demo can be found in Text Detection Demo Supported Models.

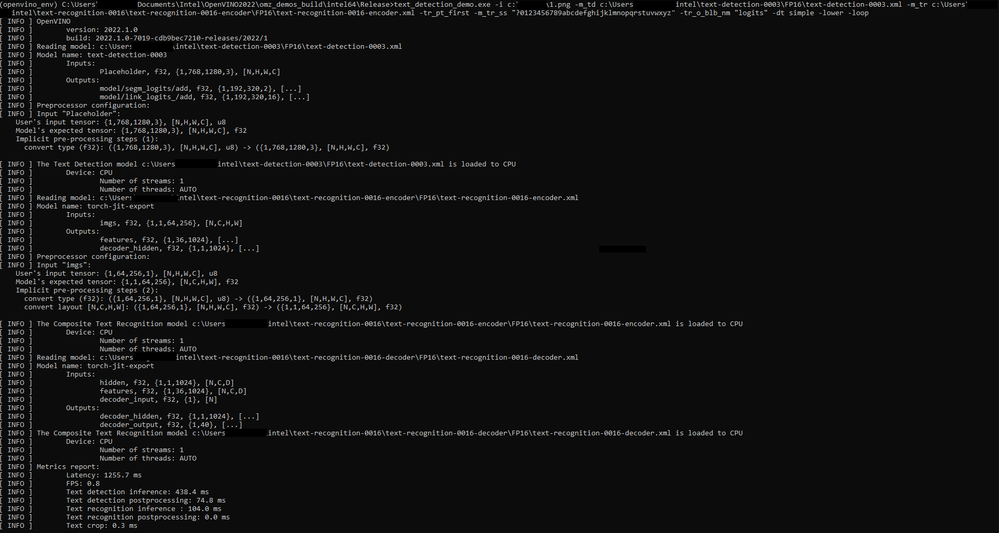

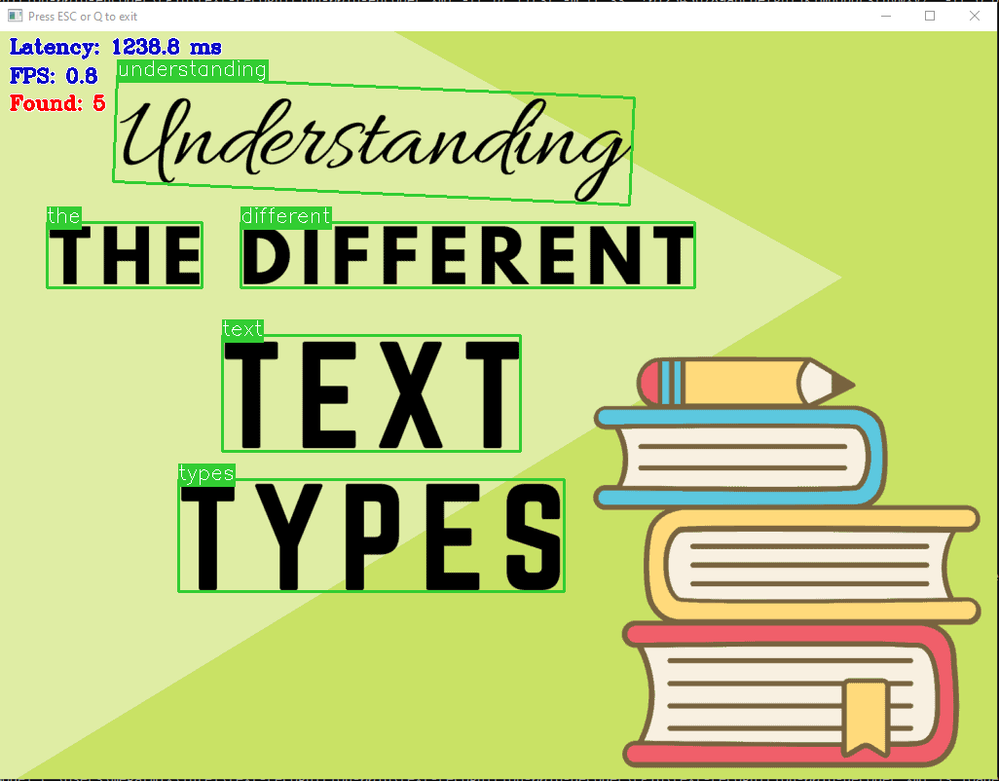

I have validated the Text Detection C++ Demo with the supported models (text-detection-0003 and text-recognition-0016) and I share the results here.

Regards,

Megat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi boe,

This thread will no longer be monitored since we have provided a suggestion. If you need any additional information from Intel, please submit a new question.

Regards,

Megat

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page