- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I have been using the OpenVINO toolkit with my NCS2 for a while now and I have learned alot.

But I still have not met my intended goals. Every time I get close, a major obstacle or redirection arises. I am careful about posting for help, so please forgive me if the info I am seeking is posted already. I am asking for direction.

In a nutshell, many months ago I knew nothing about AI or ML whatsoever, but I had a bunch of cameras, a few raspberrry pis, and my interest. With vague knowledge I had an impulse buy of the Neural Compute Stick 2 on amazon one day. I figured I would like to monitor the activity of my cats while I am away. If that works, then train voice commands, or something.

I am using a Dell Inspiron 5558 (no nvidia gpu) Windows 10 i5 pc and I own 2x Neural Compute Stick 2 to work with. I also have a project setup on google colab if I need gpu or cuda.

I am under the impression that NCS2 and openVINO is mostly designed for inference. It was tough to figure out anything from level 0. But I did get to the point I could install openvino + DL streamer and run inference on my NCS2 from my cctv camera. I am working in python. I know PHP, I can read and modify python. I also compiled openvino on the rpi4b+. I messed with the open model zoo, and went through the notebooks. i installed docker and somehow got the workbench up and running. Overall now I'm just using Openvino in python on windows.

In an ideal world, there would be some way to take footage from my Blue Iris software and run object detection on footage through OpenVINO and save results to a mySQL database, which I can check periodically. OpenVINO does have training extensions for custom data sets, just as blue iris uses deepstack(or something) for vision recognition. Blue Iris's deepstack ai will not run on NCS2 and the guides on OpenVINO training extensions use Ubuntu, no ncs2, and the guides are confusing to the casual techie. I'm trying.

I managed so far to create a dataset of about 900 training images, 300 validation images, and ran them through yolov7. I setup a google collab and produced my *.pt weights file.

I can run yolo inference and get accurate object detection on my cpu, just slow.

I have been trying a few days now to get the conversion from *.pt over to a working openvino IR file set.

I followed the notebook : Convert a PyTorch Model to ONNX and OpenVINO™ IR — OpenVINO™ documentation — Version(latest)

and it works on the specific fastseg model, but not my *.pt model. I have checked input and output shapes. At some point it gets too deep for me.

same thing.

I seem to be able to convert my *.pt models to *.onnx, and then use the openvino model optimizer to convert into IR format. And I can readout the input and output shapes. Running inference on my own generated conversions always outputs some form of crap. I reshape, reconvert, re-encode the data, and I get a new form of mis-match. A mismatch which might require an I.T. college degree to interpret. I'm trying.

Sorry for not being specific about what errors, I am not listing bugs(yet)

My question : What would be the best way to train a custom dataset and get it running on my NCS2?

I started with yolo because it seemed most user friendly, but cannot get it converted over to IR. I'm open to using tensorflow, pytorch, open model zoo + OTE. I just need a working guide with working demos which are slightly flexible on the variables and data. I want to train models to recognize specific individuals, and run it on my NCS2 sticks. Am I on the right path?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From my side, I don't see any issue converting & inferencing both the original YOLOv7 and your model (model named best)

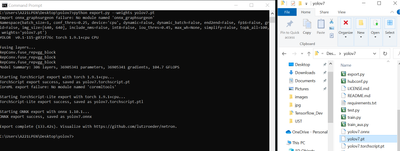

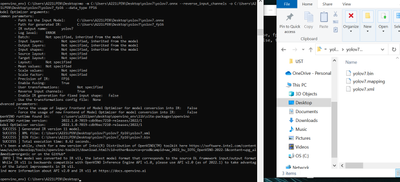

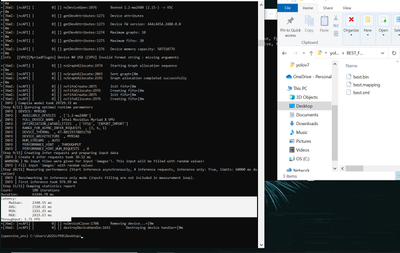

Conversion to ONNX using the script export.py

YOLOV7

BEST

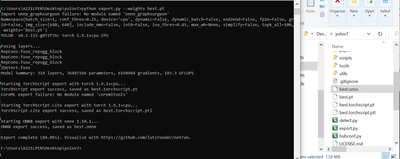

Infer the ONNX model using OpenVINO benchmark_app (default infer without device specification means I'm using intel CPU)

YOLOV7

BEST

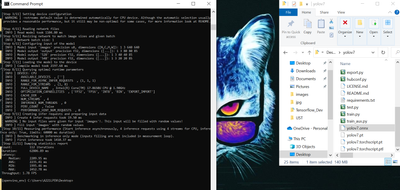

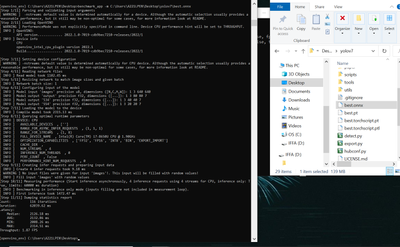

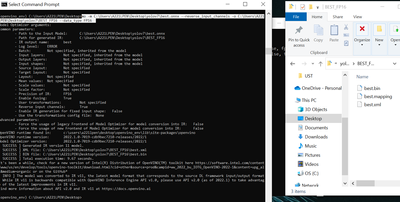

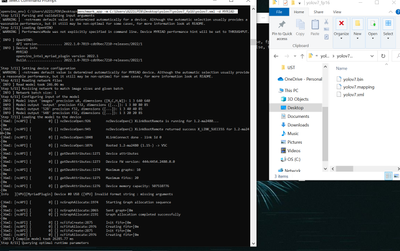

Convert ONNX into OpenVINO IR with FP16 precision

YOLOV7

BEST

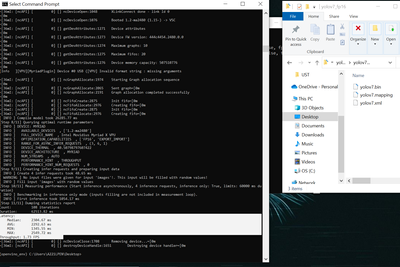

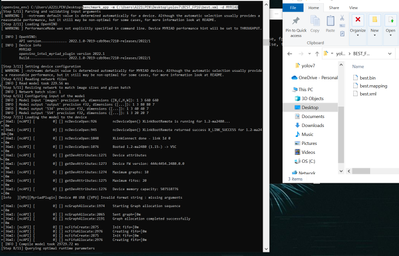

Infer the IR with NCS2/MYRIAD

YOLOV7

BEST

Therefore, from OpenVINO perspective, I don't see any issue with the model.

For implementing those YOLO models (training/infer/etc) in Google Colab you'll need to think out of the box and figure it out by reading the Google Colab documentation. There's a functionality in that collab where you could import your own script and utilize it.

This part is beyond OpenVINO utilization hence it's irrelevant to be answered in this forum.

Note: one of the main purpose of using Intel OpenVINO is to enable inferencing on Intel GPU.

If you want to use CUDA/NVDIA, you might as well use other Deep Learning Platforms e.g. Tensorflow & OpenCV.

Sincerely,

Iffa

Link Copied

- « Previous

-

- 1

- 2

- Next »

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From my side, I don't see any issue converting & inferencing both the original YOLOv7 and your model (model named best)

Conversion to ONNX using the script export.py

YOLOV7

BEST

Infer the ONNX model using OpenVINO benchmark_app (default infer without device specification means I'm using intel CPU)

YOLOV7

BEST

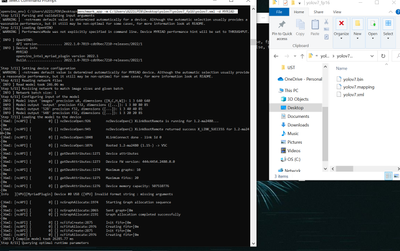

Convert ONNX into OpenVINO IR with FP16 precision

YOLOV7

BEST

Infer the IR with NCS2/MYRIAD

YOLOV7

BEST

Therefore, from OpenVINO perspective, I don't see any issue with the model.

For implementing those YOLO models (training/infer/etc) in Google Colab you'll need to think out of the box and figure it out by reading the Google Colab documentation. There's a functionality in that collab where you could import your own script and utilize it.

This part is beyond OpenVINO utilization hence it's irrelevant to be answered in this forum.

Note: one of the main purpose of using Intel OpenVINO is to enable inferencing on Intel GPU.

If you want to use CUDA/NVDIA, you might as well use other Deep Learning Platforms e.g. Tensorflow & OpenCV.

Sincerely,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Iffa,

Thank you for your attention to this matter.

I was able to successfully prepare a dataset, build a model, convert it to openvino, and run inference on the custom model.

I even went ahead and installed openvino runtime on my raspberry pi 4b+ and put the NCS2 stick on there to run the model.

The missing element was the part where I needed to use Netron to look at my onnx file for output layers. Once I added the --output flag to my ModelOptimizer command, the result was A-OK.

This was a very difficult project and I am glad I was able to get a working demo from start to finish. I will be breaking from this effort for several weeks/a few months.

The goal is to get my CCTV camera system to recognize and monitor my cats' indoor activites and record it to mysql (or something). I needed to train a model to recognize each cat individually. Now I am aware this is possible, and I have the working components which are able to manage the operations.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Greetings,

Intel will no longer monitor this thread since this issue has been resolved. If you need any additional information from Intel, please submit a new question.

Sincerely,

Iffa

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »