- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

KMP_SET_STACK_SIZE affects the stack size of the subsequently created threads. Use the linker option to specify the stack size of the main thread. To verify this assumption:

#ifdef _OPENMP

CALL KMP_SET_STACKSIZE(104857600)

STACKSIZE=KMP_GET_STACKSIZE()

#endif

#ifdef _OPENMP

!$omp parallel

NTHR = OMP_GET_NUM_THREADS()

SUCCESS=SETENVQQ('I_MPI_PIN_DOMAIN=omp')

IF(STACKSIZE/=KMP_GET_STACKSIZE()) THEN

WRITE(*,*) "Stack size issue ", OMP_GET_THREAD_NUM(), KMP_GET_STACKSIZE()

STOP

ENDIF

!$omp end parallel

I know this is a dummy test application. Within the OpenMP portion of the code you should refrain from performing the reduction of large arrays. Instead, if possible, you should have each OpenMP thread work on independent slices of the array. You may have to work out some issues at boundaries, but this is generally more efficient than total array reduction.

Also, try to use the {...}code button to paste code examples (when small). This is what I used above.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have found that a similar limitation in the use of the Reduction clause is also available in solving an OpenMP problem: if the dimension of an array in the clause exceeds the default stack size for threads 4Mb, the stack overflow takes place. The use both the KMP_SET_STACKSIZE routine and IFPORT utility to increase the value of the OMP_STACKSIZE environment variable that defines the stack size for threads created by the OpenMP implementation to 100Mb does not resolve the problem, as the size of the stack for initial (or master) thread remains unchanged and unsufficient.

Below the source of a dummy FORTRAN test_openmp code is given:

PROGRAM TEST_OPENMP

USE IFPORT

IMPLICIT NONE

#ifdef _OPENMP

INCLUDE 'omp_lib.h'

#endif

INTEGER :: I, NTHR, TID, STACKSIZE

INTEGER, PARAMETER :: NDIMX=150, NDIMY=150

REAL(8) :: A0(NDIMX,NDIMY)

LOGICAL :: SUCCESS

#ifdef _OPENMP

SUCCESS=SETENVQQ('OMP_STACKSIZE=100M')

! CALL KMP_SET_STACKSIZE(104857600)

#endif

#ifdef _OPENMP

!$omp parallel

NTHR = OMP_GET_NUM_THREADS()

STACKSIZE=KMP_GET_STACKSIZE()

!$omp end parallel

#endif

WRITE(*,1002)NTHR

1002 FORMAT(1X,'Number of threads=',I2)

WRITE(*,1003)STACKSIZE

1003 FORMAT(1X,'The stack size by thread, bytes, =',I10)

WRITE(*,1004)NDIMX,NDIMY

1004 FORMAT(1X,'NDIMX=',I5,' NDIMY=',I5)

A0=0.

!$OMP PARALLEL PRIVATE(TID,NTHR)

TID=OMP_GET_THREAD_NUM()

WRITE(*,'(" Thread number=",I3)') TID

IF(TID.EQ.0) THEN

NTHR = OMP_GET_NUM_THREADS()

WRITE(*,'(" Number of threads= ",I3)')NTHR

ENDIF

!$OMP DO SCHEDULE(AUTO)

!$OMP+REDUCTION(+:A0)

!$OMP+PRIVATE(I)

DO I=1,10

A0=A0+1.

ENDDO

!$OMP END DO

!$OMP END PARALLEL

WRITE(*,'(" SUM(A0)= ",PE16.8)') SUM(A0)

STOP

END PROGRAM TEST_OPENMP

The code was compiled under Windows 10, installed on Intel i7-4960X based (6 cores, 12 threads) PC, by Intel(R) Visual Fortran Intel(R) 64 Compiler for applications running on Intel(R) 64, Version 17.0.0.109. The following makefile was applied:

ALL: test_openmp.exe

test_openmp.obj: test_openmp.for

ifort @fast_Intel.txt test_openmp.for \

>test_openmp.err 2>&1

test_openmp.exe: test_openmp.obj

ifort test_openmp.obj /link \

/out:test_openmp.exe >test_openmp_link.err 2>&1

with options defined in the fast_Intel.txt file:

/c /O3 /Qprec-div- /QxHost /Qopenmp /fpp /Qopenmp-lib:compat

If the REAL(8) array A0(NDIMX,NDIMY) in the code is defined with parameters NDIMX=150, NDIMY=150 (total amount of memory used is 180000 bytes < 4Mb) the following listing is generated after entering:

test_openmp > test_openmp.lst 2>&1

Number of threads=12

The stack size by thread, bytes, = 104857600

NDIMX= 150 NDIMY= 150

Thread number= 0

Thread number= 6

Thread number= 11

Thread number= 9

Thread number= 3

Thread number= 5

Thread number= 1

Thread number= 4

Number of threads= 12

Thread number= 8

Thread number= 10

Thread number= 2

Thread number= 7

SUM(A0)= 2.25000000E+05

If array A0 is defined with parameters NDIMX=200, NDIMY=200 (total amount of memory used is 320000 bytes < 4Mb) the stack overflow takes place, nevertheless, the default stack size limit for threads 4Mb is not exceeded.

The use of additional option /Fn, with n=104857600, or /heap-arrays:104857600 does not resolve the problem.

The joint use of additional options /Qparallel and /Qpar-adjast-stack:104857600 generates a catastrophic error.

Maybe, there are other options to compile/link the dummy code to resolve the problem?

Andrei Voloshchenko

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As stated in my earlier post:

KMP_SET_STACK_SIZE affects the stack size of the subsequently created threads. Use the linker option to specify the stack size of the main thread.

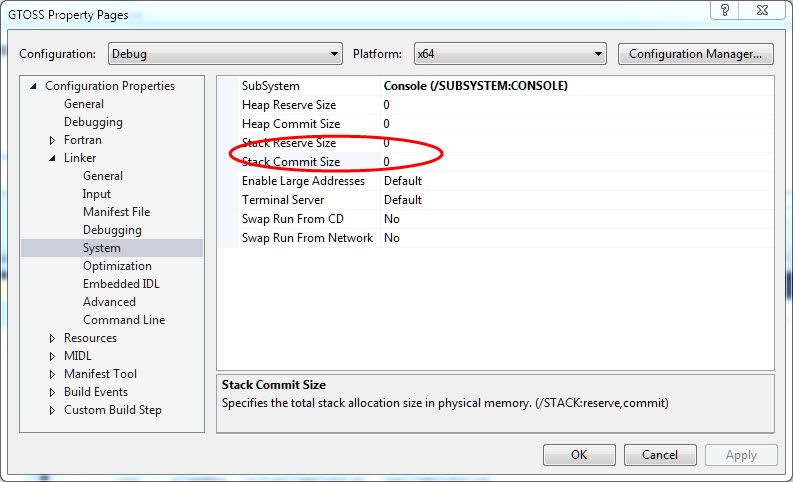

You use the linker properties to set the stack size for the main thread. Set Commit size to the initial value (probably your known requirement) and Reserve size to .GE. commit size to provide room for any uncertainties. These sizes are for the main thread only.

When porting to Linux, use the appropriate command line option.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What would happen if you made the array A0 allocatable ?

This should take the primary copy off the stack, but what would happen to the reduction copies ?

The other option is to do your own reduction, adding a thread id dimension to the A0 array, as:

ALLOCATE ( A0(NDIMX,NDIMY, 0:threads) )

John

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Mr. Jim Dempsey,

Thank you for your help!

Sorry, but I forget that due the use the Microsoft Incremental Linker from the Microsoft Visual Studio by Intel Visual Fortran Compiler, to receive the full list of link options, available for ifort, the following command should be entered:

link >link_help.lst

Details in the use available Microsoft Visual Studio 2015 Linker options can be found in

https://msdn.microsoft.com/en-us/library/y0zzbyt4.aspx

The following makefile seems acceptable for test_openmp dummy problem:

ALL: test_openmp.exe

test_openmp.obj: test_openmp.for

ifort @fast_Intel.txt test_openmp.for \

>test_openmp.err 2>&1

test_openmp.exe: test_openmp.obj

ifort test_openmp.obj /link /STACK:52428800,26214400 \

/out:test_openmp.exe >test_openmp_link.err 2>&1

with options defined in the fast_Intel.txt file:

/c /O3 /Qprec-div- /QxHost /Qopenmp /fpp /Qopenmp-lib:compat

The following makefile seems acceptable for test_mpi_openmp dummy problem:

ALL: test_mpi_openmp.exe

test_mpi_openmp.obj: test_mpi_openmp.for

ifort @fast_Intel.txt test_mpi_openmp.for \

>test_mpi_openmp.err 2>&1

test_mpi_openmp.exe: test_mpi_openmp.obj

ifort test_mpi_openmp.obj impi.lib /link /STACK:52428800,26214400 \

-I_MPL_LINK=opt_mt /out:test_mpi_openmp.exe \

>test_mpi_openmp_link.err 2>&1

with the same options

Andrei Voloshchenko

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Mr. Jim Dempsey,

Thank you for your help!

Sorry, but I forget that due the use the Microsoft Incremental Linker from the Microsoft Visual Studio by Intel Visual Fortran Compiler, to receive the full list of link options, available for ifort, the following command should be entered:

link >link_help.lst

Details in the use available Microsoft Visual Studio 2015 Linker options can be found in

https://msdn.microsoft.com/en-us/library/y0zzbyt4.aspx

The following makefile seems acceptable for test_openmp dummy problem:

ALL: test_openmp.exe

test_openmp.obj: test_openmp.for

ifort @fast_Intel.txt test_openmp.for \

>test_openmp.err 2>&1

test_openmp.exe: test_openmp.obj

ifort test_openmp.obj /link /STACK:52428800,26214400 \

/out:test_openmp.exe >test_openmp_link.err 2>&1

with options defined in the fast_Intel.txt file:

/c /O3 /Qprec-div- /QxHost /Qopenmp /fpp /Qopenmp-lib:compat

The following makefile seems acceptable for test_mpi_openmp dummy problem:

ALL: test_mpi_openmp.exe

test_mpi_openmp.obj: test_mpi_openmp.for

ifort @fast_Intel.txt test_mpi_openmp.for \

>test_mpi_openmp.err 2>&1

test_mpi_openmp.exe: test_mpi_openmp.obj

ifort test_mpi_openmp.obj impi.lib /link /STACK:52428800,26214400 \

-I_MPL_LINK=opt_mt /out:test_mpi_openmp.exe \

>test_mpi_openmp_link.err 2>&1

with the same options

Andrei Voloshchenko

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear colleagues, thank you for your inquire into problem. The solution is in option of Microsoft Visual Studio linker. However I can't reproduce the difference of behavior for main and secondary threads.

jimdempseyatthecove wrote:

KMP_SET_STACK_SIZE affects the stack size of the subsequently created threads. Use the linker option to specify the stack size of the main thread. To verify this assumption:

For the program

PROGRAM TEST_MPI_OPENMP

IMPLICIT NONE

INCLUDE 'omp_lib.h'

INCLUDE 'mpif.h'

INTEGER :: INFO, NTHR, STACKSIZE

CALL MPI_INIT(INFO)

CALL KMP_SET_STACKSIZE(104857600)

STACKSIZE=KMP_GET_STACKSIZE()

!$omp parallel

NTHR = OMP_GET_NUM_THREADS()

WRITE(*,*) "Stack size issue ",

+ OMP_GET_THREAD_NUM(), KMP_GET_STACKSIZE()

!$omp end parallel

CALL MPI_FINALIZE(INFO)

END PROGRAM TEST_MPI_OPENMP

running with -n 2 option the output is

Stack size issue 0 104857600 Stack size issue 1 104857600 Stack size issue 2 104857600 Stack size issue 3 104857600 Stack size issue 0 104857600 Stack size issue 4 104857600 Stack size issue 1 104857600 Stack size issue 2 104857600 Stack size issue 5 104857600 Stack size issue 6 104857600 Stack size issue 7 104857600 Stack size issue 3 104857600 Stack size issue 4 104857600 Stack size issue 6 104857600 Stack size issue 5 104857600 Stack size issue 7 104857600

The output for all threads is the same, so there is no difference between main and secondary threads.

John Campbell wrote:

What would happen if you made the array A0 allocatable ?

We've examined this case also. The result is that treads "skip" iterations with varying result from run to run, equaling "almost" correct one. Using of first or last dimension for thread-specific variable behaves in the same way. Why does they skip, whereas they use different slices of array? Of course, if to specify CRITICAL section for slice operation with A0, result is correct.

PROGRAM TEST_MPI_OPENMP

IMPLICIT NONE

INCLUDE 'mpif.h'

INCLUDE 'omp_lib.h'

INTEGER :: I, J, NTHR, MPRN, SIZPRN, INFO, TID

INTEGER, PARAMETER :: NDIMX=2000, NDIMY=2000

REAL(8), DIMENSION(:,:,:), ALLOCATABLE ::

& A0

CALL MPI_INIT(INFO)

CALL MPI_COMM_SIZE(MPI_COMM_WORLD,SIZPRN,INFO)

CALL MPI_COMM_RANK(MPI_COMM_WORLD,MPRN,INFO)

!$OMP PARALLEL PRIVATE(TID), SHARED(NTHR)

TID=OMP_GET_THREAD_NUM()

WRITE(*,'("MPI RANK ",I4," OMP TH R ",I4)') MPRN, TID

IF(TID.EQ.0) THEN

NTHR = OMP_GET_NUM_THREADS()

WRITE(*,'("MPI RANK ",I4," OMP NUM THR ",I4)') MPRN, NTHR

ENDIF

!$OMP END PARALLEL

ALLOCATE(A0(NTHR,NDIMX,NDIMY))

A0=0.

!$OMP PARALLEL SHARED(A0)

TID=OMP_GET_THREAD_NUM()+1

!$OMP DO SCHEDULE(AUTO)

!$OMP+PRIVATE(I)

DO I=1,10

A0(TID,:,:)=A0(TID,:,:)+1.

ENDDO

!$OMP END DO

!$OMP END PARALLEL

WRITE(*,'("MPI RANK ",I4," SUM(A0) ",PE16.8)') MPRN, SUM(A0)

DEALLOCATE(A0)

CALL MPI_FINALIZE(INFO)

END PROGRAM TEST_MPI_OPENMP

Sample outputs:

MPI RANK 0 SUM(A0) 3.81908480E+07 MPI RANK 1 SUM(A0) 4.00000000E+07 MPI RANK 0 SUM(A0) 3.99937820E+07 MPI RANK 1 SUM(A0) 3.99999430E+07

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Alexander,

The addition of the Link options corrected Volosch's problem. This leads me to suspect that there is a version difference in the OpenMP runtime system that your version does not require the linker option but his does.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Jim, thank you, the runtime error with message about stack overflow disappeared. But my question was, how to reproduce the difference of behavior of stack size of main and secondary threads.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The resultant sum is expected to be 4.0E+7

There are three experiments to perform that may shed light on the problem:

1) Change the FORMAT on line 31 to use D16.8

2) Change line 28 to add 1.0D0 (use double precision literal as opposed to the single precision 1.)

3) Wrap line 27 in a critical section

I know 3) is not what you want. The test is for diagnosis of the problem.

Report back, then I can help diagnose this further.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Jim, thank you kindly.

My mistake was that I havn't declared the variable TID as private in the second parallel region beginning from string #22. Now it works fine, thank you.

!$OMP PARALLEL PRIVATE(TID), SHARED(A0)

BR, Alexander.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Mr. Jim Dempsey,

From about 20 test hybrid MPI/OpenMP jobs for our code KATRIN I have found a job, where the use of the Reduction clause produces an error. Others 19 jobs were solved by KATRIN code with no problems. The code is too large to be presented here, so, only two small fragments of the code are given:

First fragment (routine WMRTZ is called):

DO 30 LSR=1,NSRD

write(m2,4008)myproc,LSR

4008 format(1x,'myproc=',i2,' LSR=',i2)

DO 31 ISR=ISRMN(LSR),ISRMX(LSR)

JSR=NSRD+2-ISR-LSR

iproc=(JSR-1)*NSRR+ISR-1

write(m2,4000)myproc,iproc,LSR,ISRMN(LSR),ISRMX(LSR),ISR,JSR

4000 format(1x,'myproc=',i2,' iproc=',i2,' LSR=',i2,' ISRMN(LSR)=',i2,

+' ISRMX(LSR)=',i2,' ISR=',i2,' JSR',i2)

if(myproc.eq.iproc) then

inext=ISR-1

jnext=JSR-1

write(m2,4009)myproc,iproc,inext,jnext

4009 format(1x,'myproc=',i2,' iproc=',i2,' inext=',i2,' jnext=',i2)

iprev=ISR+1

jprev=JSR+1

if(iprev.le.NSRR) then

iprevp=(JSR-1)*NSRR+iprev-1

write(m2,4001)myproc,ISR,JSR,iprev,iprevp

4001 format(1x,'myproc=',i2,' ISR=',i2,' JSR=',i2,' iprev=',i2,

+' iprevp=',i2)

call RECVFR(iprevp,2,FR0I,BUFFR(1,1,JSR),JSRB,JSRE,JSR,

+MTOMAX,1,MTOTMM,K)

end if

if(jprev.le.NSRT) then

jprevp=(jprev-1)*NSRR+ISR-1

write(m2,4002)myproc,ISR,JSR,jprev,jprevp

4002 format(1x,'myproc=',i2,' ISR=',i2,' JSR=',i2,' jprev=',i2,

+' jprevp=',i2)

call RECVFT(jprevp,1,FT0F,BUFFT(1,1,ISR),ISRB,ISRE,ISR,

+MTOMAX,1,MTOTMM,K)

end if

CALL WMRTZ(FZ0L,FD000,SD000,FM,VR0,VTHE0,VZ0,MR,XR,W,YKSI,

+YETA,Y,QTOT,KOMP,AD(1,1,K),FR0I(1,1,K),FT0F(1,1,K),A0(1,1,K),

+A1(1,1,K),

+A2(1,1,K),B0(1,1,K),B1(1,1,K),B2(1,1,K),C0(1,1,K),C1(1,1,K),

+C2(1,1,K),AM1(1,1,K),AMTT(1,1,K),PI1,PIT,MRR,MRT,

+ISRB,ISRE,JSRB,JSRE,SRB,K,KK,ISR,JSR,-1,-1,ALPS,MLB,MLM,1,MTETMM)

if(inext.gt.0) then

inextp=(JSR-1)*NSRR+inext-1

write(m2,4003)myproc,ISR,JSR,inext,inextp

4003 format(1x,'myproc=',i2,' ISR=',i2,' JSR=',i2,' inext=',i2,

+' inextp=',i2)

call SENDFR(inextp,2,FR0I,BUFFR(1,1,JSR),JSRB,JSRE,JSR,

+MTOMAX,1,MTOTMM,K)

end if

if(jnext.gt.0) then

jnextp=(jnext-1)*NSRR+ISR-1

write(m2,4004)myproc,ISR,JSR,jnext,jnextp

4004 format(1x,'myproc=',i2,' ISR=',i2,' JSR=',i2,' jnext=',i2,

+' jnextp=',i2)

call SENDFT(jnextp,1,FT0F,BUFFT(1,1,ISR),ISRB,ISRE,ISR,

+MTOMAX,1,MTOTMM,K)

end if

end if

31 CONTINUE

call glbarr ( comm_kat )

write(m2,4006)myproc

4006 format(1x,'myproc=',i2,' Exit from 31 cycle')

30 CONTINUE

write(m2,4007)myproc

4007 format(1x,'myproc=',i2,' Exit from 30 cycle')

call glbarr ( comm_kat )

Second fragment:

SUBROUTINE WMRTZ(FZ0L,FD000,SD000,FM,VR0,VTHE0,VZ0,MR,XR,W,YKSI,

+YETA,Y,QTOT,KOMP,AD,FR0I,FT0F,A0,A1,A2,B0,B1,B2,C0,C1,C2,AM1,

+AMTT,PI1,PIT,MRR,MRT,ISRB,ISRE,JSRB,JSRE,SRB,K,KK,ISR,JSR,

+JEYSW,KEYSW,ALPS,MLB,MLM,LBEG,LEND)

C

C Routine makes transport (r-theta) sweep for a given orthogon for "go

C to left in radial variable (\xi_{l,m}<0) case"

C JEYSW=-1/1 indicates to front/to back (\eta_{l,m}<0/>0) sweep case

C KEYSW=-1/1 indicates to bottom/to top (\mu_{l,m}<0/>0) sweep case

use plib_module, only: myproc, nproc, comm_kat

IMPLICIT DOUBLE PRECISION (A-H,O-Z)

DIMENSION FZ0L(MTETA,NPOINR,NPOINT),FD000(MTETA,NPOINR,NPOINT),

+SD000(MTETA,NPOINR,NPOINT),FM(NPOINR,NPOINT,MXL4),VR0(NPOINR),

+VTHE0(NPOINT),VZ0(NPOINZ),MR(NREGR),XR(NREGR),W(MTETA),

+YKSI(MTETA),YETA(MTETA),Y(MTETA),QTOT(NKSUM),

+KOMP(NREGR,NREGT,NREGZ),AD(NPOINR,NPOINT),FR0I(MTETA,NPOINT),

+FT0F(MTETA,NPOINR),A0(NPOINR,NPOINT),A1(NPOINR,NPOINT),

+A2(NPOINR,NPOINT),B0(NPOINR,NPOINT),B1(NPOINR,NPOINT),

+B2(NPOINR,NPOINT),C0(NPOINR,NPOINT),C1(NPOINR,NPOINT),

+C2(NPOINR,NPOINT),AM1(NPOINR,NPOINT),AMTT(NPOINR,NPOINT),

+PI1(NPOINR,NPOINT,MXL4),PIT(NPOINR,NPOINT,MXL4),MRR(NPOINR),

+MRT(NPOINT),ISRB(NSRR),ISRE(NSRR),JSRB(NSRT),JSRE(NSRT),

+SRB(NSRR+1),ALPS(MTETA),MLB(MTETSM),MLM(MTETSM)

INCLUDE 'C_TAPEID.FOR'

COMMON/LITERA/E0,E1,E2,E3,E4,E5,E6,E7,E8,E9,E10,E025,E05

INCLUDE 'C_LIMITS.FOR'

INCLUDE 'C_SERVE.FOR'

write(m2,1001)myproc,k,JEYSW,KEYSW

1001 format(1x,'Enter WMRTZ,',' myproc=',i2,' k=',i3,' JEYSW=',i3,

+' KEYSW=',i3)

!$OMP PARALLEL

!$OMP DO SCHEDULE(DYNAMIC,1)

!$OMP+REDUCTION(+:A0,A1,A2,B0,B1,B2,C0,C1,C2,AM1,AMTT)

!$OMP+PRIVATE(L1,MBEG,AMU,MLMB,MLME)

DO 102 L=LBEG,LEND

L1=L-LBEG+1

MBEG=MLB(L)

AMU=ABS(Y(MBEG))

MLMB=MBEG+1

MLME=MBEG+MLM(L)-1

write(m2,1000)myproc,l,mbeg,mlme,JEYSW,KEYSW

1000 format(1x,'myproc=',i2,' l=',i2,' mbeg=',i3,' mlme=',i3,

+' JEYSW=',i2,' KEYSW=',i2)

CALL SWMRTZ(FZ0L,FD000,SD000,FM(1,1,L1),VR0,VTHE0,VZ0,MR,XR,W,

+YKSI,YETA,Y,QTOT,KOMP,AD,FR0I,FT0F,A0,A1,A2,B0,B1,B2,C0,C1,C2,AM1,

+AMTT,PI1(1,1,L1),PIT(1,1,L1),MRR,MRT,ISRB,ISRE,JSRB,JSRE,SRB,K,KK,

+MLMB,ISR,JSR,JEYSW,KEYSW,ALPS,AMU,MBEG,MLME)

write(m2,1003)myproc,l,mbeg,mlme,JEYSW,KEYSW

1003 format(1x,'Exit SWMRTZ',' myproc=',i2,' l=',i2,' mbeg=',i3,

+' mlme=',i3,' JEYSW=',i2,' KEYSW=',i2)

102 CONTINUE

!$OMP END DO

!$OMP END PARALLEL

write(m2,1002)myproc,k,JEYSW,KEYSW

1002 format(1x,'Exit WMRTZ,',' myproc=',i2,' k=',i3,' JEYSW=',i3,

+' KEYSW=',i3)

RETURN

END

Here myproc is the MPI process number.

The code was compiled under Windows 10, installed on Intel i7-4960X based (6 cores, 12 threads) PC, by Intel(R) Visual Fortran Intel(R) 64 Compiler for applications running on Intel(R) 64, Version 17.0.0.109. Intel(R) MPI Library 2017 for Windows was used.

The following listings (only final fragments of the listings are presented) generated after entering:

mpiexec.smpd -n 4 katrin_noimsl.exe < t4n2grtz_MPI.inp > t4n2grtz_MPI.lst 2>&1

First listing (standard output unit M2=6):

myproc= 0 nq= 1 ns= 0 k= 1

myproc= 0 LSR= 1

myproc= 0 iproc= 3 LSR= 1 ISRMN(LSR)= 2 ISRMX(LSR)= 2 ISR= 2 JSR 2

Second listing (display and run time errors output):

myproc= 2 nq= 1 ns= 0 k= 1

myproc= 1 nq= 1 ns= 0 k= 1

myproc= 1 LSR= 1

myproc= 1 iproc= 3 LSR= 1 ISRMN(LSR)= 2 ISRMX(LSR)= 2 ISR= 2 JSR 2

myproc= 3 nq= 1 ns= 0 k= 1

myproc= 3 LSR= 1

myproc= 3 iproc= 3 LSR= 1 ISRMN(LSR)= 2 ISRMX(LSR)= 2 ISR= 2 JSR 2

myproc= 3 iproc= 3 inext= 1 jnext= 1

Enter WMRTZ, myproc= 3 k= 1 JEYSW= -1 KEYSW= -1

myproc= 3 l= 1 mbeg= 1 mlme= 2 JEYSW=-1 KEYSW=-1

myproc= 3 l= 2 mbeg= 4 mlme= 6 JEYSW=-1 KEYSW=-1

Exit SWMRTZ myproc= 3 l= 1 mbeg= 1 mlme= 2 JEYSW=-1 KEYSW=-1

Exit SWMRTZ myproc= 3 l= 2 mbeg= 4 mlme= 6 JEYSW=-1 KEYSW=-1

myproc= 3 l= 3 mbeg= 9 mlme= 12 JEYSW=-1 KEYSW=-1

Exit SWMRTZ myproc= 3 l= 3 mbeg= 9 mlme= 12 JEYSW=-1 KEYSW=-1

myproc= 2 LSR= 1

myproc= 2 iproc= 3 LSR= 1 ISRMN(LSR)= 2 ISRMX(LSR)= 2 ISR= 2 JSR 2

job aborted:

rank: node: exit code[: error message]

0: DESKTOP-8HDLOHI: 123

1: DESKTOP-8HDLOHI: 123

2: DESKTOP-8HDLOHI: 123

3: DESKTOP-8HDLOHI: -1073741819: process 3 exited without calling finalize

Please, help me to resolve the problem.

Andrei Voloshchenko

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Assuming the issue resolves around excessive stack consumption due to the REDUCTION with many arrays, I would suggest if at all possible that you consider reworking the code such that it can be performed without REDUCTION. IOW each thread works on an independent slice of each array (preferably at vector boundaries).

If partitioning by slice is not possible, then I suggest manually performing the reduction using ALLOCATABLE arrays:

SUBROUTINE WMRTZ(FZ0L,FD000,SD000,FM,VR0,VTHE0,VZ0,MR,XR,W,YKSI,

+YETA,Y,QTOT,KOMP,AD,FR0I,FT0F,A0,A1,A2,B0,B1,B2,C0,C1,C2,AM1,

+AMTT,PI1,PIT,MRR,MRT,ISRB,ISRE,JSRB,JSRE,SRB,K,KK,ISR,JSR,

+JEYSW,KEYSW,ALPS,MLB,MLM,LBEG,LEND)

C

C Routine makes transport (r-theta) sweep for a given orthogon for "go

C to left in radial variable (\xi_{l,m}<0) case"

C JEYSW=-1/1 indicates to front/to back (\eta_{l,m}<0/>0) sweep case

C KEYSW=-1/1 indicates to bottom/to top (\mu_{l,m}<0/>0) sweep case

use plib_module, only: myproc, nproc, comm_kat

IMPLICIT DOUBLE PRECISION (A-H,O-Z)

DIMENSION FZ0L(MTETA,NPOINR,NPOINT),FD000(MTETA,NPOINR,NPOINT),

+SD000(MTETA,NPOINR,NPOINT),FM(NPOINR,NPOINT,MXL4),VR0(NPOINR),

+VTHE0(NPOINT),VZ0(NPOINZ),MR(NREGR),XR(NREGR),W(MTETA),

+YKSI(MTETA),YETA(MTETA),Y(MTETA),QTOT(NKSUM),

+KOMP(NREGR,NREGT,NREGZ),AD(NPOINR,NPOINT),FR0I(MTETA,NPOINT),

+FT0F(MTETA,NPOINR),A0(NPOINR,NPOINT),A1(NPOINR,NPOINT),

+A2(NPOINR,NPOINT),B0(NPOINR,NPOINT),B1(NPOINR,NPOINT),

+B2(NPOINR,NPOINT),C0(NPOINR,NPOINT),C1(NPOINR,NPOINT),

+C2(NPOINR,NPOINT),AM1(NPOINR,NPOINT),AMTT(NPOINR,NPOINT),

+PI1(NPOINR,NPOINT,MXL4),PIT(NPOINR,NPOINT,MXL4),MRR(NPOINR),

+MRT(NPOINT),ISRB(NSRR),ISRE(NSRR),JSRB(NSRT),JSRE(NSRT),

+SRB(NSRR+1),ALPS(MTETA),MLB(MTETSM),MLM(MTETSM)

DOUBLE PRECISION, ALLOCATABLE, DIMENSION(:,:) ::

+rA0,rA1,rA2,rB0,rB1,rB2,rC0,rC1,rC2,rAM1,rAMTT

INCLUDE 'C_TAPEID.FOR'

COMMON/LITERA/E0,E1,E2,E3,E4,E5,E6,E7,E8,E9,E10,E025,E05

INCLUDE 'C_LIMITS.FOR'

INCLUDE 'C_SERVE.FOR'

write(m2,1001)myproc,k,JEYSW,KEYSW

1001 format(1x,'Enter WMRTZ,',' myproc=',i2,' k=',i3,' JEYSW=',i3,

+' KEYSW=',i3)

!$OMP PARALLEL PRIVATE(rA0,rA1,rA2,rB0,rB1,rB2,rC0,rC1,rC2,rAM1,rAMTT)

!$OMP+PRIVATE(L1,MBEG,AMU,MLMB,MLME)

ALLOCATE(rA0(NPOINR,NPOINT),rA1(NPOINR,NPOINT),rA2(NPOINR,NPOINT),

+rB0(NPOINR,NPOINT),rB1(NPOINR,NPOINT),rB2(NPOINR,NPOINT),

+rC0(NPOINR,NPOINT),rC1(NPOINR,NPOINT),rC2(NPOINR,NPOINT),

+rAM1(NPOINR,NPOINT),rAMTT(NPOINR,NPOINT))

rA0=A0;rA1=A1;rA2=A2

rB0=B0;rB1=B1;rB2=B2

rC0=C0;rC1=C1;rC2=C2

rAM1=AM1;rAMTT=AMTT

!$OMP DO SCHEDULE(DYNAMIC,1)

DO 102 L=LBEG,LEND

L1=L-LBEG+1

MBEG=MLB(L)

AMU=ABS(Y(MBEG))

MLMB=MBEG+1

MLME=MBEG+MLM(L)-1

write(m2,1000)myproc,l,mbeg,mlme,JEYSW,KEYSW

1000 format(1x,'myproc=',i2,' l=',i2,' mbeg=',i3,' mlme=',i3,

+' JEYSW=',i2,' KEYSW=',i2)

CALL SWMRTZ(FZ0L,FD000,SD000,FM(1,1,L1),VR0,VTHE0,VZ0,MR,XR,W,

+YKSI,YETA,Y,QTOT,KOMP,AD,FR0I,FT0F,rA0,rA1,rA2,rB0,rB1,rB2,

+rC0,rC1,,rC2,rAM1,rAMTT,PI1(1,1,L1),PIT(1,1,L1),MRR,MRT,ISRB,ISRE,

+JSRB,JSRE,SRB,K,KK,MLMB,ISR,JSR,JEYSW,KEYSW,ALPS,AMU,MBEG,MLME)

write(m2,1003)myproc,l,mbeg,mlme,JEYSW,KEYSW

1003 format(1x,'Exit SWMRTZ',' myproc=',i2,' l=',i2,' mbeg=',i3,

+' mlme=',i3,' JEYSW=',i2,' KEYSW=',i2)

102 CONTINUE

!$OMP END DO

!$OMP CRITICAL(CRITICAL_A)

A0=A0+rA0;A1=A1+rA1;A2=A2+rA2

!$OMP END CRITICAL(CRITICAL_A)

!$OMP CRITICAL(CRITICAL_B)

B0=B0+rB0;B1=B1+rB1;B2=B2+rB2

!$OMP END CRITICAL(CRITICAL_B)

!$OMP CRITICAL(CRITICAL_C)

C0=C0+rC0;C1=C1+rC1;C2=C2+rC2

!$OMP END CRITICAL(CRITICAL_C)

!$OMP CRITICAL(CRITICAL_AM)

AM1=AM1+rAM1;AMTT=AMTT+rAMTT

!$OMP END CRITICAL(CRITICAL_AM)

DELETE(rA0,rA1,rA2,rB0,rB1,rB2,rC0,rC1,rC2,rAM1,rAMTT)

!$OMP END PARALLEL

write(m2,1002)myproc,k,JEYSW,KEYSW

1002 format(1x,'Exit WMRTZ,',' myproc=',i2,' k=',i3,' JEYSW=',i3,

+' KEYSW=',i3)

RETURN

END

*** The above has not been debugged, that will be up to you.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jim,

Thanks for your example, as it suggests a more direct way to manage large private arrays, if the intention is to minimise the use of the stack. I have taken your example and simplified it to a single private array, by first allocating and initialising outside the OMP DO, using inside the OMP DO, then finally reducing in a critical region.

Subroutine Simple ( A0, NPOINR,NPOINT, LBEG,LEND ) !C ! Simplified example of replacing REDUCTION with managed allocatable array !C ! IMPLICIT DOUBLE PRECISION (A-H,O-Z) INTEGER NPOINR,NPOINT, LBEG,LEND DOUBLE PRECISION A0(NPOINR,NPOINT) ! INTEGER L, L1 DOUBLE PRECISION, ALLOCATABLE, DIMENSION(:,:) :: rA0 !$OMP PARALLEL PRIVATE(rA0) !$OMP+PRIVATE(L1) ALLOCATE(rA0(NPOINR,NPOINT)) rA0=A0 ! shouldn't this be rA0=0 !$OMP DO SCHEDULE(DYNAMIC,1) DO 102 L=LBEG,LEND L1=L-LBEG+1 CALL SWMRTZ( L1, rA0 ) 102 CONTINUE !$OMP END DO !$OMP CRITICAL(CRITICAL_A) A0=A0+rA0 !$OMP END CRITICAL(CRITICAL_A) DEALLOCATE (rA0) !$OMP END PARALLEL RETURN END

I have a couple of questions:

1) If the ALLOCATE rA0 took place outside the !$OMP PARALLEL region, would the private duplicates of rA0 be on the stack or be Allocated ?

2) You initialise rA0=A0. Shouldn't this be rA0=0, or are there problems with this initialisation? I find ambiguity with initialising PRIVATE accumulators.

3) Would the approach of ALLOCATE inside !$OMP PARALLEL before !$OMP DO be good in general for large PRIVATE arrays, especially as the number of supported threads increases?

( I have picked off the easy DO loops for OMP and I am now investigating an option of applying !$OMP to DO loops, which use 2 x 1mb temporary arrays. These arrays can become a problem for stack usage when the number of available threads increases, while each loop step operations count is only moderate. I am assuming there is some benefit in keeping multiple threads active ?)

I hope you could comment on some of these questions.

John

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

John,

1) When the array rA0 is not allocated prior to parallel region, and passes into the parallel region as private (well make the empty arrays FIRSTPRIVATE due to issue on earlier compilers), then the empty array descriptor is passed into the parallel region. This consumes a relatively small amount of stack. The in the region allocation then obtains the data space from the heap. Should rA0 be allocated prior to the parallel region then the stack gets the array descriptor plus the allocation (see comment in 3) below).

2) line 15, yes rA0 = 0.0_8. What is ambiguous about rA0 = 0.0_8? Note rA0 is not part of a REDUCTION and requires initialization.

3) I recommend large private arrays be allocated inside the region, but before your DO loops (why allocate more than once). Note, although the IVF compiler has /heap-arrays[:size] which can perform large allocation on heap, other compilers may not. Possibly, at some future implementation of OpenMP you can specify PRIVATEHEAP and FIRSTPRIVATEHEAP or something like that.

>>These arrays can become a problem for stack usage when the number of available threads increases

Yes, my KNL has 256 threads, and large SMP systems can have much more.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Mr. Jim Dempsey,

I have found that after porting our code KATRIN to Linux all (more than 20) test hybrid MPI/OpenMP jobs were solved without any reduction problem if the option

ulimit -s unlimited

is added in .bash_profile to increase memory for the master thread, and the stack size for created threads is increased to 25 Mb by

CALL KMP_SET_STACKSIZE(26214400)

The code was compiled under Red Hat 7.2 64-bit Linux, installed on Intel i7-4960X based (6 cores, 12 threads) PC, by Intel(R) Visual Fortran Intel(R) 64 Compiler for applications running on Intel(R) 64, Version 2017.0.098. Intel(R) MPI Library 2017.0.098 for Linux was used.

Andrei Voloshchenko

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page