- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On an HPC cluster with Omni-Path interconnect, the attached demo code hangs at the call to MPI_Win_wait on rank 0 when run with two processes on two distinct nodes. The problem only occurs with Intel MPI and not with OpenMPI.

Interestingly, the problem can be circumvented by different settings:

- Setting FI_PROVIDER=tcp avoids the use of psm2 by OFI. This remedies the issue, hinting towards a problem with psm2.

- Setting I_MPI_DEBUG=1 also gets rid of the problem although this setting should not change the behavior of the code apart from creating verbose output.

- Setting I_MPI_FABRIC=foobar also gets rid of the problem. And I actually mean "foobar", i.e. a non-existing fabric. This should not change the behavior at all as MPI will fall back to ofi in this case.

The last bullet point makes me really wonder what the problem could be here. I ran the code with and without I_MPI_FABRIC=foobar and in both cases also set FI_LOG_LEVEL=debug to get verbose output from ofi. Apart from the ordering of lines the outputs are totally identical. However, in one case the code freezes and in the other it does not. Maybe this is some race condition that is influenced by these little environment changes.

I can reproduce the problem with Intel MPI versions 2021.4.0 and 2021.5.0, using the corresponding ICC versions for compilation. Note that it cannot be reproduced with two processes running on the same node.

Attached files:

- test.c - Source code that reproduces the problem.

- impi_debug.txt - Output when setting I_MPI_DEBUG=1. As mentioned, this hides the issue.

- ofi_debug_freezing.txt - Output when setting I_MPI_FABRIC=foobar. The program freezes.

- ofi_debug_invalid_fabric.txt - Same as the previous but with I_MPI_FABRIC=foobar set. The program does not freeze.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for posting in Intel communities.

Could you please provide the following details to investigate more on your issue?

- Operating System details.

- Cluster details(If any).

- Job scheduler being used.

- Steps to reproduce your issue. (Commands used for compiling & running the MPI application on the cluster)

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure. First some details on the cluster nodes:

cat /etc/redhat-release:

Red Hat Enterprise Linux release 8.3 (Ootpa)

lscpu:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 40

On-line CPU(s) list: 0-39

Thread(s) per core: 1

Core(s) per socket: 20

Socket(s): 2

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 85

Model name: Intel(R) Xeon(R) Gold 6148 CPU @ 2.40GHz

Stepping: 4

CPU MHz: 3179.112

CPU max MHz: 3700.0000

CPU min MHz: 1000.0000

BogoMIPS: 4800.00

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 1024K

L3 cache: 28160K

NUMA node0 CPU(s): 0-19

NUMA node1 CPU(s): 20-39

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault epb cat_l3 cdp_l3 invpcid_single ssbd mba ibrs ibpb stibp fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm cqm mpx rdt_a avx512f avx512dq rdseed adx smap clflushopt clwb intel_pt avx512cd avx512bw avx512vl xsaveopt xsavec xgetbv1 xsaves cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local dtherm ida arat pln pts hwp hwp_act_window hwp_epp hwp_pkg_req pku ospke md_clear flush_l1d

lspci|grep -i omni:

5e:00.0 Fabric controller: Intel Corporation Omni-Path HFI Silicon 100 Series [discrete] (rev 11)

The workload manager is SLURM in version 21.08.8-2. To reproduce the problem, I can use a job script like the following which I pass to sbatch:

#!/bin/bash

#SBATCH -Amy_account

#SBATCH -t1:0

#SBATCH -N2

#SBATCH -n2

module reset

module load toolchain

module load intel

mpiicc -o test test.c

srun ./test

The intel module that is loaded there is the intel toolchain from easybuild that will bring ICC and Intel MPI into the environment. I tested these toolchains in versions 2021b and 2022.00 which correspond to the ICC and MPI versions mentioned in the first post.

SLURM sets the following environment variables for Intel MPI:

I_MPI_PMI_LIBRARY=/opt/cray/slurm/default/lib/libpmi2.so

I_MPI_PMI=pmi2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

To eliminate the EasyBuild environment and the legacy ICC compiler as influencing factors, I retried this with a stock installation of oneAPI 2022.1. I loaded the provided modules compiler/2022.0.1 and mpi/2021.5.0 and can reproduce the problem with the following job script:

#!/bin/bash

#SBATCH -Amy_account

#SBATCH -t1:0

#SBATCH -N2

#SBATCH -n2

module reset

module load toolchain/inteloneapi # makes the following modules available

module load compiler/2022.0.1 mpi/2021.5.0

mpiicc -cc=icx -o test test.c

srun --mpi=pmi2 ./test

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for providing all the details.

We are working on your issue and we will get back to you soon.

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We tried running the job with the following script on Rocky Linux machines.

#!/bin/bash

#SBATCH -Amy_account

#SBATCH -t1:0

#SBATCH -N2

#SBATCH -n2

source /opt/intel/oneAPI/2022.2.0.262/setvars.sh

mpiicc -cc=icx -o test test.c

I_MPI_PMI=pmi2 mpirun -bootstrap ssh -n 2 -ppn 1 ./test

It is running fine at our end. We are not able to reproduce the issue. Please refer to the attachment(log.txt) for the output.

So, could you please confirm if you are able to run the IMB-MPI1 benchmark (or) sample hello world MPI program successfully on 2 distinct nodes?

Example Command:

mpirun -n 2 -ppn 1 -hosts <host1>,<host2> ./helloworld

Also, please share with us the cluster checker log using the below command:

source/opt/intel/oneapi/setvars.sh

clck -f <nodefile>

Please refer to the Getting Started Guide for Cluster Checker for more details.

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for testing this on your end. Can you confirm that your nodes are connected via Omni-Path and that Intel MPI uses the ofi fabric with psm2 provider? I think all of these factors are relevant to this issue.

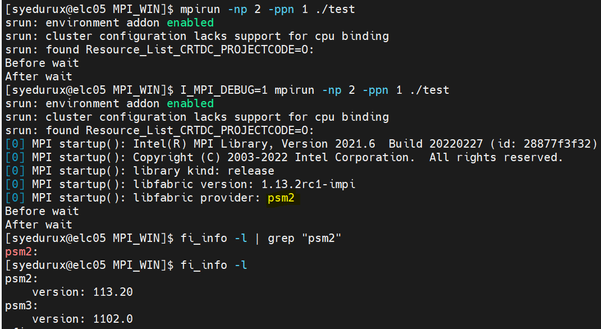

I can still reproduce the problem on our end, also with the most recent oneAPI, also using a similar mpirun call:

module load clck/2021.6.0 compiler/2022.1.0 mpi/2021.6.0

mpiicc -cc=icx -o test test.c

mpirun -np 2 -ppn 1 ./test

MPI startup(): Warning: I_MPI_PMI_LIBRARY will be ignored since the hydra process manager was found

MPI startup(): Warning: I_MPI_PMI_LIBRARY will be ignored since the hydra process manager was found

Before wait

[hangs]

I can confirm that Intel MPI in general works properly on this cluster as it is used productively. This issue seems very specific to the case of using general active target synchronization (MPI_Win_wait) and maybe the fact that rank 0 is both origin and target of the one-sided communication.

I ran the cluster checker on the same two nodes I used for testing and it shows no issues:

Intel(R) Cluster Checker 2021 Update 6 (build 20220318)

SUMMARY

Command-line: clck

Tests Run: health_base

Overall Result: No issues found

--------------------------------------------------------------------------------

2 nodes tested: gpu-[0001-0002]

2 nodes with no issues: gpu-[0001-0002]

0 nodes with issues:

--------------------------------------------------------------------------------

FUNCTIONALITY

No issues detected.

HARDWARE UNIFORMITY

No issues detected.

PERFORMANCE

No issues detected.

SOFTWARE UNIFORMITY

No issues detected.

--------------------------------------------------------------------------------

Framework Definition Dependencies

health_base

|- cpu_user

| `- cpu_base

|- environment_variables_uniformity

|- ethernet

|- infiniband_user

| `- infiniband_base

| `- dapl_fabric_providers_present

|- network_time_uniformity

|- node_process_status

`- opa_user

`- opa_base

--------------------------------------------------------------------------------

Intel(R) Cluster Checker 2021 Update 6

14:12:12 July 6 2022 UTC

Databases used: $HOME/.clck/2021.6.0/clck.db

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

>>"Can you confirm that your nodes are connected via Omni-Path and that Intel MPI uses the ofi fabric with psm2 provider?"

Yes, the nodes are connected via Omni-Path interconnect and use the psm2 provider. But we couldn't reproduce your issue from our end. Please refer to the below screenshot.

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks! I hoped it would be reproducible under these conditions but it seems to be some kind of heisenbug or be specific to our cluster. Right now I am out of ideas how to track this down further.

If I figure out any additional factors that contribute to this, I will post again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We haven't heard back from you. Could you please provide any update on your issue?

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately I was not able to get any more insight. I used the correctness checker from itac using -check_mpi and it tells me that there is a deadlock but it does not provide any more details other than in which MPI routines the program hangs - and we know that already. I also tried modifying the program behavior by introducing sleeps at different positions for different ranks but to no avail. So I think we need to leave it at that unless Intel engineers have an idea on how to proceed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Could you please try the debugging commands mentioned below using the gdb debugger?

- Compile your code with "-g" option.

mpiicc -g test.c - Run the code using the below command:

mpirun -bootstrap ssh -gdb -n 2 -hosts host1,host2 ./a.outAs running the above command hangs for you, keep the terminal idle without terminating the process(MPI Job).

Run all the below commands in a new terminal:

- Now open a new terminal and do SSH to either host1 or host2.

ssh host1 - After SSH to host1/host2, run the below command to get the PID(Process ID) of the hanged MPI job.

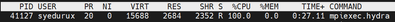

topExample output is as shown in the below screenshot:

Copy the PID of mpiexec.hydra/mpirun command which makes an exit from top. - Now run the below command which redirects us to GDB debugging mode.

gdb attach <PID>Example: gdb attach 41127

- Now run the below command to get the backtrace.

bt

Could you please provide us with the complete backtrace log? This might help us to investigate further on your issue.

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm having some difficulties getting the proposed procedure to work:

- I need to add -ppn 1 to mpirun to distribute the processes among both hosts

- On the hosts, there is no mpiexec.hydra process but just hydra_pmi_proxy. mpiexec.hydra runs on the node from where I run mpirun.

- Attaching GDB to those hydra_pmi_proxy processes, the backtrace does not provide much information:

#0 0x0000155554695a08 in poll () from /lib64/libc.so.6

#1 0x000000000042c8db in HYDI_dmx_poll_wait_for_event (wtime=7562032) at ../../../../../src/pm/i_hydra/libhydra/demux/hydra_demux_poll.c:39

#2 0x000000000040687d in main (argc=7562032, argv=0x4) at ../../../../../src/pm/i_hydra/proxy/proxy.c:1026 - Attaching GDB to the running ./a.out processes is not possible as these are already attached to mpigdb

- In mpigdb I can run the program but I don't know how to interrupt it once it hangs to get the backtraces

So I went a different route which hopefully provides the same result. I started the program as follows:

mpiicc -g test.c

mpirun -bootstrap ssh -n 2 -ppn 1 -hosts host1,host2 ./a.out

Then I ssh onto both hosts and run gdb attach PID where PID is the one of the running ./a.out.

The backtraces vary a bit, depending on when exactly the program is interrupted by attaching gdb. So I took different samples. Unfortunately, the line numbers within test.c (lines 44 and 49) do not exactly match the lines in the test.c attached to this post. But they refer to the only MPI_Win_wait and MPI_Win_free calls in the code.

Sample 1 (rank 0 interrupted first)

host1 / rank 0:

#0 0x000015555392512c in MPIDI_Progress_test (flags=<optimized out>, req=<optimized out>) at ../../src/mpid/ch4/src/ch4_progress.c:93

#1 MPID_Progress_test_impl (req=0x0) at ../../src/mpid/ch4/src/ch4_progress.c:152

#2 0x0000155553eed57b in MPIDIG_mpi_win_wait (win=<optimized out>) at ../../src/mpid/ch4/src/ch4r_win.h:272

#3 MPIDI_NM_mpi_win_wait (win=<optimized out>) at ../../src/mpid/ch4/netmod/include/../ofi/ofi_win.h:164

#4 MPID_Win_wait (win=<optimized out>) at ../../src/mpid/ch4/src/ch4_win.h:125

#5 PMPI_Win_wait (win=0) at ../../src/mpi/rma/win_wait.c:87

#6 0x0000000000400dd7 in main () at test.c:44

host2 / rank 1:

#0 get_cycles () at /usr/src/debug/libpsm2-11.2.204-1.x86_64/include/linux-x86_64/sysdep.h:93

#1 ips_ptl_poll (ptl_gen=0x621000, _ignored=0) at /usr/src/debug/libpsm2-11.2.204-1.x86_64/ptl_ips/ptl.c:529

#2 0x00001555509cfe8f in __psmi_poll_internal (ep=0x621000, poll_amsh=0) at /usr/src/debug/libpsm2-11.2.204-1.x86_64/psm.c:1069

#3 0x00001555509c9d4d in psmi_mq_ipeek_inner (mq=<optimized out>, oreq=<optimized out>, status=<optimized out>, status_copy=<optimized out>)

at /usr/src/debug/libpsm2-11.2.204-1.x86_64/psm_mq.c:1122

#4 __psm2_mq_ipeek (mq=0x621000, oreq=0x0, status=0x2b45f7) at /usr/src/debug/libpsm2-11.2.204-1.x86_64/psm_mq.c:1161

#5 0x0000155550c52480 in psmx2_cq_poll_mq () from /cm/shared/apps/pc2/INTEL-ONEAPI/mpi/2021.6.0/libfabric/lib/prov/libpsmx2-fi.so

#6 0x0000155550c541b4 in psmx2_cq_readfrom () from /cm/shared/apps/pc2/INTEL-ONEAPI/mpi/2021.6.0/libfabric/lib/prov/libpsmx2-fi.so

#7 0x00001555538d35d7 in MPIDI_OFI_Allreduce_intra_direct_recursive_multiplying_template (sendbuf=0x621000, recvbuf=0x0, count=2835959, datatype=1352474384, op=0,

comm_ptr=0x619c48, errflag=0x7fffffffa880, radix=4) at /p/pdsd/scratch/Uploads/IMPI/other/software/libfabric/linux/v1.9.0/include/rdma/fi_eq.h:385

#8 0x00001555538de551 in MPIDI_OFI_Barrier_intra_multiplying (comm_ptr=<optimized out>, errflag=<optimized out>, parameters=<optimized out>)

at ../../src/mpid/ch4/include/../netmod/ofi/intel/ofi_coll_direct.h:2045

#9 MPIDI_NM_mpi_barrier (comm=<optimized out>, errflag=<optimized out>, ch4_algo_parameters_container_in=<optimized out>)

at ../../src/mpid/ch4/netmod/include/../ofi/intel/autoreg_ofi_coll.h:50

#10 MPIDI_Barrier_intra_composition_alpha (comm_ptr=<optimized out>, errflag=<optimized out>, ch4_algo_parameters_container=<optimized out>)

at ../../src/mpid/ch4/src/intel/ch4_coll_impl.h:55

#11 MPID_Barrier_invoke (comm=0x621000, errflag=0x0, ch4_algo_parameters_container=0x2b45f7) at ../../src/mpid/ch4/src/intel/autoreg_ch4_coll.h:26

#12 0x00001555538b0be9 in MPIDI_coll_invoke (coll_sig=0x621000, container=0x0, req=0x2b45f7) at ../../src/mpid/ch4/src/intel/ch4_coll_select_utils.c:3368

#13 0x0000155553890780 in MPIDI_coll_select (coll_sig=0x621000, req=0x0) at ../../src/mpid/ch4/src/intel/ch4_coll_globals_default.c:143

#14 0x0000155553992495 in MPID_Barrier (comm=<optimized out>, errflag=<optimized out>) at ../../src/mpid/ch4/src/intel/ch4_coll.h:31

#15 MPIR_Barrier (comm_ptr=0x621000, errflag=0x0) at ../../src/mpi/coll/intel/src/coll_impl.c:349

#16 0x00001555539710fd in MPIDIG_mpi_win_free (win_ptr=0x621000) at ../../src/mpid/ch4/src/ch4r_win.c:790

#17 0x00001555539909c2 in MPID_Win_free (win_ptr=0x621000) at ../../src/mpid/ch4/src/ch4_win.c:80

#18 0x0000155553ecedd1 in PMPI_Win_free (win=0x621000) at ../../src/mpi/rma/win_free.c:111

#19 0x0000000000400e0d in main () at test.c:49

Sample 2 (rank 1 interrupted first)

host1 / rank 0:

#0 MPIR_Progress_hook_exec_on_vci (vci_idx=0, made_progress=0x0) at ../../src/util/mpir_progress_hook.c:195

#1 0x00001555539252ac in MPIDI_Progress_test (flags=<optimized out>, req=<optimized out>) at ../../src/mpid/ch4/src/ch4_progress.c:88

#2 MPID_Progress_test_impl (req=0x0) at ../../src/mpid/ch4/src/ch4_progress.c:152

#3 0x0000155553eed57b in MPIDIG_mpi_win_wait (win=<optimized out>) at ../../src/mpid/ch4/src/ch4r_win.h:272

#4 MPIDI_NM_mpi_win_wait (win=<optimized out>) at ../../src/mpid/ch4/netmod/include/../ofi/ofi_win.h:164

#5 MPID_Win_wait (win=<optimized out>) at ../../src/mpid/ch4/src/ch4_win.h:125

#6 PMPI_Win_wait (win=0) at ../../src/mpi/rma/win_wait.c:87

#7 0x0000000000400dd7 in main () at test.c:44

host2 / rank 1:

#0 0x0000155550c52452 in psmx2_cq_poll_mq () from /cm/shared/apps/pc2/INTEL-ONEAPI/mpi/2021.6.0/libfabric/lib/prov/libpsmx2-fi.so

#1 0x0000155550c541b4 in psmx2_cq_readfrom () from /cm/shared/apps/pc2/INTEL-ONEAPI/mpi/2021.6.0/libfabric/lib/prov/libpsmx2-fi.so

#2 0x00001555538d35d7 in MPIDI_OFI_Allreduce_intra_direct_recursive_multiplying_template (sendbuf=0x619b4c, recvbuf=0x2, count=-140016, datatype=-139944, op=0,

comm_ptr=0x619c48, errflag=0x7fffffffa880, radix=4) at /p/pdsd/scratch/Uploads/IMPI/other/software/libfabric/linux/v1.9.0/include/rdma/fi_eq.h:385

#3 0x00001555538de551 in MPIDI_OFI_Barrier_intra_multiplying (comm_ptr=<optimized out>, errflag=<optimized out>, parameters=<optimized out>)

at ../../src/mpid/ch4/include/../netmod/ofi/intel/ofi_coll_direct.h:2045

#4 MPIDI_NM_mpi_barrier (comm=<optimized out>, errflag=<optimized out>, ch4_algo_parameters_container_in=<optimized out>)

at ../../src/mpid/ch4/netmod/include/../ofi/intel/autoreg_ofi_coll.h:50

#5 MPIDI_Barrier_intra_composition_alpha (comm_ptr=<optimized out>, errflag=<optimized out>, ch4_algo_parameters_container=<optimized out>)

at ../../src/mpid/ch4/src/intel/ch4_coll_impl.h:55

#6 MPID_Barrier_invoke (comm=0x619b4c, errflag=0x2, ch4_algo_parameters_container=0x7ffffffddd10) at ../../src/mpid/ch4/src/intel/autoreg_ch4_coll.h:26

#7 0x00001555538b0be9 in MPIDI_coll_invoke (coll_sig=0x619b4c, container=0x2, req=0x7ffffffddd10) at ../../src/mpid/ch4/src/intel/ch4_coll_select_utils.c:3368

#8 0x0000155553890780 in MPIDI_coll_select (coll_sig=0x619b4c, req=0x2) at ../../src/mpid/ch4/src/intel/ch4_coll_globals_default.c:143

#9 0x0000155553992495 in MPID_Barrier (comm=<optimized out>, errflag=<optimized out>) at ../../src/mpid/ch4/src/intel/ch4_coll.h:31

#10 MPIR_Barrier (comm_ptr=0x619b4c, errflag=0x2) at ../../src/mpi/coll/intel/src/coll_impl.c:349

#11 0x00001555539710fd in MPIDIG_mpi_win_free (win_ptr=0x619b4c) at ../../src/mpid/ch4/src/ch4r_win.c:790

#12 0x00001555539909c2 in MPID_Win_free (win_ptr=0x619b4c) at ../../src/mpid/ch4/src/ch4_win.c:80

#13 0x0000155553ecedd1 in PMPI_Win_free (win=0x619b4c) at ../../src/mpi/rma/win_free.c:111

#14 0x0000000000400e0d in main () at test.c:49

Sample 3 (rank 0 interrupted first)

host1 / rank 0:

#0 psmi_spin_trylock (lock=<optimized out>) at /usr/src/debug/libpsm2-11.2.204-1.x86_64/psm_lock.h:108

#1 psmi_spin_lock (lock=<optimized out>) at /usr/src/debug/libpsm2-11.2.204-1.x86_64/psm_lock.h:132

#2 psmi_mq_ipeek_inner (mq=<optimized out>, oreq=<optimized out>, status=<optimized out>, status_copy=<optimized out>) at /usr/src/debug/libpsm2-11.2.204-1.x86_64/psm_mq.c:1121

#3 __psm2_mq_ipeek (mq=0x0, oreq=0x7fffffffa630, status=0x1) at /usr/src/debug/libpsm2-11.2.204-1.x86_64/psm_mq.c:1161

#4 0x0000155550c52480 in psmx2_cq_poll_mq () from /cm/shared/apps/pc2/INTEL-ONEAPI/mpi/2021.6.0/libfabric/lib/prov/libpsmx2-fi.so

#5 0x0000155550c541b4 in psmx2_cq_readfrom () from /cm/shared/apps/pc2/INTEL-ONEAPI/mpi/2021.6.0/libfabric/lib/prov/libpsmx2-fi.so

#6 0x0000155553d563fe in MPIDI_OFI_progress (vci=0, blocking=-22992) at /p/pdsd/scratch/Uploads/IMPI/other/software/libfabric/linux/v1.9.0/include/rdma/fi_eq.h:385

#7 0x0000155553925133 in MPIDI_Progress_test (flags=<optimized out>, req=<optimized out>) at ../../src/mpid/ch4/src/ch4_progress.c:93

#8 MPID_Progress_test_impl (req=0x0) at ../../src/mpid/ch4/src/ch4_progress.c:152

#9 0x0000155553eed57b in MPIDIG_mpi_win_wait (win=<optimized out>) at ../../src/mpid/ch4/src/ch4r_win.h:272

#10 MPIDI_NM_mpi_win_wait (win=<optimized out>) at ../../src/mpid/ch4/netmod/include/../ofi/ofi_win.h:164

#11 MPID_Win_wait (win=<optimized out>) at ../../src/mpid/ch4/src/ch4_win.h:125

#12 PMPI_Win_wait (win=0) at ../../src/mpi/rma/win_wait.c:87

#13 0x0000000000400dd7 in main () at test.c:44

host2 / rank 1:

#0 psmi_spin_trylock (lock=<optimized out>) at /usr/src/debug/libpsm2-11.2.204-1.x86_64/psm_lock.h:108

#1 psmi_spin_lock (lock=<optimized out>) at /usr/src/debug/libpsm2-11.2.204-1.x86_64/psm_lock.h:132

#2 psmi_mq_ipeek_inner (mq=<optimized out>, oreq=<optimized out>, status=<optimized out>, status_copy=<optimized out>)

at /usr/src/debug/libpsm2-11.2.204-1.x86_64/psm_mq.c:1121

#3 __psm2_mq_ipeek (mq=0x0, oreq=0x7ffffffddd50, status=0x1) at /usr/src/debug/libpsm2-11.2.204-1.x86_64/psm_mq.c:1161

#4 0x0000155550c52480 in psmx2_cq_poll_mq () from /cm/shared/apps/pc2/INTEL-ONEAPI/mpi/2021.6.0/libfabric/lib/prov/libpsmx2-fi.so

#5 0x0000155550c541b4 in psmx2_cq_readfrom () from /cm/shared/apps/pc2/INTEL-ONEAPI/mpi/2021.6.0/libfabric/lib/prov/libpsmx2-fi.so

#6 0x00001555538d35d7 in MPIDI_OFI_Allreduce_intra_direct_recursive_multiplying_template (sendbuf=0x0, recvbuf=0x7ffffffddd50, count=1, datatype=-139944, op=0,

comm_ptr=0x619c48, errflag=0x7fffffffa880, radix=4) at /p/pdsd/scratch/Uploads/IMPI/other/software/libfabric/linux/v1.9.0/include/rdma/fi_eq.h:385

#7 0x00001555538de551 in MPIDI_OFI_Barrier_intra_multiplying (comm_ptr=<optimized out>, errflag=<optimized out>, parameters=<optimized out>)

at ../../src/mpid/ch4/include/../netmod/ofi/intel/ofi_coll_direct.h:2045

#8 MPIDI_NM_mpi_barrier (comm=<optimized out>, errflag=<optimized out>, ch4_algo_parameters_container_in=<optimized out>)

at ../../src/mpid/ch4/netmod/include/../ofi/intel/autoreg_ofi_coll.h:50

#9 MPIDI_Barrier_intra_composition_alpha (comm_ptr=<optimized out>, errflag=<optimized out>, ch4_algo_parameters_container=<optimized out>)

at ../../src/mpid/ch4/src/intel/ch4_coll_impl.h:55

#10 MPID_Barrier_invoke (comm=0x0, errflag=0x7ffffffddd50, ch4_algo_parameters_container=0x1) at ../../src/mpid/ch4/src/intel/autoreg_ch4_coll.h:26

#11 0x00001555538b0be9 in MPIDI_coll_invoke (coll_sig=0x0, container=0x7ffffffddd50, req=0x1) at ../../src/mpid/ch4/src/intel/ch4_coll_select_utils.c:3368

#12 0x0000155553890780 in MPIDI_coll_select (coll_sig=0x0, req=0x7ffffffddd50) at ../../src/mpid/ch4/src/intel/ch4_coll_globals_default.c:143

#13 0x0000155553992495 in MPID_Barrier (comm=<optimized out>, errflag=<optimized out>) at ../../src/mpid/ch4/src/intel/ch4_coll.h:31

#14 MPIR_Barrier (comm_ptr=0x0, errflag=0x7ffffffddd50) at ../../src/mpi/coll/intel/src/coll_impl.c:349

#15 0x00001555539710fd in MPIDIG_mpi_win_free (win_ptr=0x0) at ../../src/mpid/ch4/src/ch4r_win.c:790

#16 0x00001555539909c2 in MPID_Win_free (win_ptr=0x0) at ../../src/mpid/ch4/src/ch4_win.c:80

#17 0x0000155553ecedd1 in PMPI_Win_free (win=0x0) at ../../src/mpi/rma/win_free.c:111

#18 0x0000000000400e0d in main () at test.c:49

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I submitted a lengthy response yesterday but it is not visible in the forum. I'm pretty sure it was submitted as the automatically saved draft is gone as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Sorry for the inconvenience. We could not see your post. We request you to post it again.

Thanks,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The post now has reappeared: https://community.intel.com/t5/Intel-oneAPI-HPC-Toolkit/Intel-MPI-MPI-Win-wait-hangs-forever-when-using-ofi-fabric-with/m-p/1404203#M9728

If any information is missing or I can help any further, please ask.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

>>" But they refer to the only MPI_Win_wait and MPI_Win_free calls in the code."

Could you please try keeping an MPI_Barrier() before the MPI_Win_free() call?

//your code

MPI_Barrier(MPI_COMM_WORLD);

MPI_Win_free(&win);

//your code

Please try at your end and let us know if it helps.

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

the additional MPI_Barrier does not really change the behavior. Rank 0 still hangs in MPI_Win_wait() and Rank 1 hangs in the added MPI_Barrier.

Backtrace rank 0:

#0 MPIR_Progress_hook_exec_on_vci (vci_idx=0, made_progress=0x0) at ../../src/util/mpir_progress_hook.c:195

#1 0x00001555539252ac in MPIDI_Progress_test (flags=<optimized out>, req=<optimized out>) at ../../src/mpid/ch4/src/ch4_progress.c:88

#2 MPID_Progress_test_impl (req=0x0) at ../../src/mpid/ch4/src/ch4_progress.c:152

#3 0x0000155553eed57b in MPIDIG_mpi_win_wait (win=<optimized out>) at ../../src/mpid/ch4/src/ch4r_win.h:272

#4 MPIDI_NM_mpi_win_wait (win=<optimized out>) at ../../src/mpid/ch4/netmod/include/../ofi/ofi_win.h:164

#5 MPID_Win_wait (win=<optimized out>) at ../../src/mpid/ch4/src/ch4_win.h:125

#6 PMPI_Win_wait (win=0) at ../../src/mpi/rma/win_wait.c:87

#7 0x0000000000400e27 in main () at test.c:36

Backtrace rank 1:

#0 psmi_spin_trylock (lock=<optimized out>) at /usr/src/debug/libpsm2-11.2.204-1.x86_64/psm_lock.h:108

#1 psmi_spin_lock (lock=<optimized out>) at /usr/src/debug/libpsm2-11.2.204-1.x86_64/psm_lock.h:132

#2 psmi_mq_ipeek_inner (mq=<optimized out>, oreq=<optimized out>, status=<optimized out>, status_copy=<optimized out>) at /usr/src/debug/libpsm2-11.2.204-1.x86_64/psm_mq.c:1121

#3 __psm2_mq_ipeek (mq=0x0, oreq=0x7ffffffddcd0, status=0x1) at /usr/src/debug/libpsm2-11.2.204-1.x86_64/psm_mq.c:1161

#4 0x0000155550c52480 in psmx2_cq_poll_mq () from /cm/shared/apps/pc2/INTEL-ONEAPI/mpi/2021.6.0/libfabric/lib/prov/libpsmx2-fi.so

#5 0x0000155550c541b4 in psmx2_cq_readfrom () from /cm/shared/apps/pc2/INTEL-ONEAPI/mpi/2021.6.0/libfabric/lib/prov/libpsmx2-fi.so

#6 0x00001555538d35d7 in MPIDI_OFI_Allreduce_intra_direct_recursive_multiplying_template (sendbuf=0x0, recvbuf=0x7ffffffddcd0, count=1, datatype=-140072, op=0, comm_ptr=0x619c38,

errflag=0x7fffffffa7e0, radix=4) at /p/pdsd/scratch/Uploads/IMPI/other/software/libfabric/linux/v1.9.0/include/rdma/fi_eq.h:385

#7 0x00001555538de551 in MPIDI_OFI_Barrier_intra_multiplying (comm_ptr=<optimized out>, errflag=<optimized out>, parameters=<optimized out>)

at ../../src/mpid/ch4/include/../netmod/ofi/intel/ofi_coll_direct.h:2045

#8 MPIDI_NM_mpi_barrier (comm=<optimized out>, errflag=<optimized out>, ch4_algo_parameters_container_in=<optimized out>)

at ../../src/mpid/ch4/netmod/include/../ofi/intel/autoreg_ofi_coll.h:50

#9 MPIDI_Barrier_intra_composition_alpha (comm_ptr=<optimized out>, errflag=<optimized out>, ch4_algo_parameters_container=<optimized out>)

at ../../src/mpid/ch4/src/intel/ch4_coll_impl.h:55

#10 MPID_Barrier_invoke (comm=0x0, errflag=0x7ffffffddcd0, ch4_algo_parameters_container=0x1) at ../../src/mpid/ch4/src/intel/autoreg_ch4_coll.h:26

#11 0x00001555538b0be9 in MPIDI_coll_invoke (coll_sig=0x0, container=0x7ffffffddcd0, req=0x1) at ../../src/mpid/ch4/src/intel/ch4_coll_select_utils.c:3368

#12 0x0000155553890780 in MPIDI_coll_select (coll_sig=0x0, req=0x7ffffffddcd0) at ../../src/mpid/ch4/src/intel/ch4_coll_globals_default.c:143

#13 0x0000155553992495 in MPID_Barrier (comm=<optimized out>, errflag=<optimized out>) at ../../src/mpid/ch4/src/intel/ch4_coll.h:31

#14 MPIR_Barrier (comm_ptr=0x0, errflag=0x7ffffffddcd0) at ../../src/mpi/coll/intel/src/coll_impl.c:349

#15 0x000015555386f444 in PMPI_Barrier (comm=0) at ../../src/mpi/coll/barrier/barrier.c:244

#16 0x0000000000400e5d in main () at test.c:41

The modified code (this time with matching line numbers) is attached to this post.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We are working on your issue internally and we will get back to you.

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have examined your reproducer and I am also unable to reproduce this behavior, confirmed to be using Omni-Path with the psm2 provider. I have a few more suggestions you can try:

- Rerun Cluster Checker with the MPI prerequisites test:

clck -f <nodefile> -F mpi_prereq_user - Try launching an interactive SLURM session, then running in a separate environment outside of the SLURM environment. Depending on your system configuration, you may be able to launch the interactive session and then in a different terminal SSH to one of the nodes for launch. Alternatively, you could launch an interactive session, and launch a new bash environment which does not contain the SLURM environment.

- I don't expect this to have an effect, but try setting I_MPI_ASYNC_PROGRESS=1.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry for the late response, I was out of office last week. Here are my results:

- Cluster Checker with MPI prerequisites test: The result looks fine to me. One check that cannot be run is the lscpu test. Not sure why this is now an issue and was not a couple of weeks ago. Maybe an update changed the output of lscpu which is definitely installed.

- clck_results.log

Intel(R) Cluster Checker 2021 Update 6 (build 20220318) SUMMARY Command-line: clck -F mpi_prereq_user Tests Run: mpi_prereq_user **WARNING**: 1 test failed to run. Information may be incomplete. See clck_execution_warnings.log for more information. Overall Result: Could not run all tests. -------------------------------------------------------------------------------- 2 nodes tested: gpu-[0004-0005] 2 nodes with no issues: gpu-[0004-0005] 0 nodes with issues: -------------------------------------------------------------------------------- FUNCTIONALITY No issues detected. HARDWARE UNIFORMITY No issues detected. PERFORMANCE No issues detected. SOFTWARE UNIFORMITY No issues detected. -------------------------------------------------------------------------------- INFORMATIONAL The following additional information was detected: 1. network-interfaces-recomended:ethernet Message: The Ethernet network interface is present on all nodes in the grouping. You can set this interface as default with: I_MPI_OFI_PROVIDER=tcp mpiexec.hydra 2 nodes: gpu-[0004-0005] Test: mpi_prereq_user 2. network-interfaces-recomended:opa Message: The Intel(R) OPA network interface is present on all nodes in the grouping. You can set this interface as default with: I_MPI_OFI_PROVIDER=psm2 mpiexec.hydra 2 nodes: gpu-[0004-0005] Test: mpi_prereq_user 3. mpi-network-interface Message: The cluster has 2 network interfaces (Intel(R) Omni-Path Architecture, Ethernet). Intel(R) MPI Library uses by default the first interface detected in the order of: (1) Intel(R) Omni-Path Architecture (Intel(R) OPA), (2) InfiniBand, (3) Ethernet. You can set a specific interface by setting the environment variable I_MPI_OFI_PROVIDER. Ethernet: I_MPI_OFI_PROVIDER=tcp mpiexec.hydra; InfiniBand: I_MPI_OFI_PROVIDER=mlx mpiexec.hydra; Intel(R) OPA: I_MPI_OFI_PROVIDER=psm2 mpiexec.hydra. 2 nodes: gpu-[0004-0005] Test: mpi_prereq_user -------------------------------------------------------------------------------- Framework Definition Dependencies mpi_prereq_user |- opa_user | `- opa_base |- cpu_user | `- cpu_base |- infiniband_user | `- infiniband_base | `- dapl_fabric_providers_present |- memory_uniformity_user | `- memory_uniformity_base |- mpi_environment |- mpi_ethernet | `- ethernet `- mpi_libfabric -------------------------------------------------------------------------------- Intel(R) Cluster Checker 2021 Update 6 12:31:41 August 29 2022 UTC Databases used: $HOME/.clck/2021.6.0/clck.db - clck_execution_warnings.log

Intel(R) Cluster Checker 2021 Update 6 (build 20220318) Command-line: clck -F mpi_prereq_user RUNTIME ERRORS Intel(R) Cluster Checker encountered the following errors during execution: 1. lscpu-data-error Message: The 'lscpu' provider was executed but did not run successfully due to an unknown reason. The 'lscpu' data is either not parsable or the provider did not run correctly. Some CPU related analysis may not execute successfully because of this. Remedy: Please ensure that the 'lscpu' tool is installed and the provider can successfully run. 2 nodes: gpu-[0004-0005] Test: cpu_base -------------------------------------------------------------------------------- Intel(R) Cluster Checker 2021 Update 6 12:31:41 August 29 2022 UTC Databases used: $HOME/.clck/2021.6.0/clck.db - lscpu

Architektur: x86_64 CPU Operationsmodus: 32-bit, 64-bit Byte-Reihenfolge: Little Endian CPU(s): 40 Liste der Online-CPU(s): 0-39 Thread(s) pro Kern: 1 Kern(e) pro Socket: 20 Sockel: 2 NUMA-Knoten: 2 Anbieterkennung: GenuineIntel Prozessorfamilie: 6 Modell: 85 Modellname: Intel(R) Xeon(R) Gold 6148F CPU @ 2.40GHz Stepping: 4 CPU MHz: 2185.268 Maximale Taktfrequenz der CPU: 3700,0000 Minimale Taktfrequenz der CPU: 1000,0000 BogoMIPS: 4800.00 Virtualisierung: VT-x L1d Cache: 32K L1i Cache: 32K L2 Cache: 1024K L3 Cache: 28160K NUMA-Knoten0 CPU(s): 0-19 NUMA-Knoten1 CPU(s): 20-39 Markierungen: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault epb cat_l3 cdp_l3 invpcid_single ssbd mba ibrs ibpb stibp fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm cqm mpx rdt_a avx512f avx512dq rdseed adx smap clflushopt clwb intel_pt avx512cd avx512bw avx512vl xsaveopt xsavec xgetbv1 xsaves cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local dtherm ida arat pln pts hwp hwp_notify hwp_act_window hwp_epp hwp_pkg_req pku ospke md_clear flush_l1d

- clck_results.log

- Getting on the nodes via SSH is possible. So I ran the program using in a separate SSH session on one of the two allocated nodes and not within the SLURM environment. The result is the same and the execution hangs:

[lass@gpu-0004 mpi-general-active-target-sync]$ mpirun -bootstrap ssh -n 2 -ppn 1 -hosts gpu-0004,gpu-0005 ./a.out Before wait [hangs] - I_MPI_ASYNC_PROGRESS=1, as you expected, does not change the behavior.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page