- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OpenFOAM is the Open Source CFD Toolbox.

I can use Intel parallel studio 2018u3 to successfully run OpenFOAM test case.

But, there is a fatal error when I use OneAPI HPCKit_p_2021.1.0.2684.

Why does OneAPI report this error? How can I fix ?

The fatal error is:

Abort(740365582) on node 0 (rank 0 in comm 0): Fatal error in PMPI_Allgatherv: Message truncated, error stack:

PMPI_Allgatherv(437)..........................: MPI_Allgatherv(sbuf=0x21a8280, scount=1007, MPI_INT, rbuf=0x21c0820, rcounts=0x265dc00, displs=0x265dc10, datatype=MPI_INT, comm=comm=0x84000003) failed

MPIDI_Allgatherv_intra_composition_alpha(1764):

MPIDI_NM_mpi_allgatherv(394)..................:

MPIR_Allgatherv_intra_recursive_doubling(75)..:

MPIR_Localcopy(42)............................: Message truncated; 4028 bytes received but buffer size is 4008

icc -v

icc version 2021.1 (gcc version 7.3.0 compatibility)

mpirun --version

Intel(R) MPI Library for Linux* OS, Version 2021.1 Build 20201112 (id: b9c9d2fc5)

Copyright 2003-2020, Intel Corporation.

mpi debug info

[0] MPI startup(): Intel(R) MPI Library, Version 2021.1 Build 20201112 (id: b9c9d2fc5)

[0] MPI startup(): Copyright (C) 2003-2020 Intel Corporation. All rights reserved.

[0] MPI startup(): library kind: release

[0] MPI startup(): Size of shared memory segment (112 MB per rank) * (8 local ranks) = 902 MB total

[0] MPI startup(): libfabric version: 1.11.0-impi

[0] MPI startup(): libfabric provider: verbs;ofi_rxm

[0] MPI startup(): Rank Pid Node name Pin cpu

[0] MPI startup(): 0 1610443 Hnode5 {0,1,2,3,7,8}

[0] MPI startup(): 1 1610444 Hnode5 {12,13,14,18,19,20}

[0] MPI startup(): 2 1610445 Hnode5 {4,5,6,9,10,11}

[0] MPI startup(): 3 1610446 Hnode5 {15,16,17,21,22,23}

[0] MPI startup(): 4 1610447 Hnode5 {24,25,26,27,31,32}

[0] MPI startup(): 5 1610448 Hnode5 {36,37,38,42,43,44}

[0] MPI startup(): 6 1610449 Hnode5 {28,29,30,33,34,35}

[0] MPI startup(): 7 1610450 Hnode5 {39,40,41,45,46,47}

[0] MPI startup(): I_MPI_ROOT=/Oceanfile/kylin/Intel-One-API/mpi/2021.1.1

[0] MPI startup(): I_MPI_MPIRUN=mpirun

[0] MPI startup(): I_MPI_HYDRA_TOPOLIB=hwloc

[0] MPI startup(): I_MPI_INTERNAL_MEM_POLICY=default

[0] MPI startup(): I_MPI_DEBUG=10

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This issue has been resolved and we will no longer respond to this thread. If you require additional assistance from Intel, please start a new thread. Any further interaction in this thread will be considered community only

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Chen,

Looks like there has been a mismatch between the expected and received buffer size.

The recieve_buffer might has been allocated only 4008bytes while the sent data from all processes results in 4028bytes.

Please recheck the receive buffer size matches with the global_size*count*INT.

In your code, as we can see that scount is 1007 and total bytes received are 4028 we can infer that total ranks are 4 and rbuf should be allocated memory as :

rbuf = (int *)malloc(global_size*1007*sizeof(int));

Could you please provide us the code snippet involving this mpi_Allgatherv call?

That would help us a lot in debugging the error.

Regards

Prasanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Chen,

We haven't heard back from you.

Let us know if your issue is resolved and the given workaround fixed the issue.

Regards

Prasanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Prasanth,

Sorry for the late reply. I spent a lot of time trying to solve this problem.

The OpenFOAM source code is complex and contains some third-party dependent libraries.

Fortunately, a third-party library called scotch_6.0.6 was found to cause the problem.

I think the root cause is that there are some overly aggressive optimizations in mpiicc with O3 option.

Here are some tests to recompile scotch_6.0.6:

| Software stack | Compiler | Compilation Options | Whether this issue occurs |

| gcc9.3 and openmpi4.0.3 | mpicc | -O3 | no |

| OneAPI HPCKit_p_2021.1.0.2684 | mpicc | -O3 | no |

| OneAPI HPCKit_p_2021.1.0.2684 | mpiicc | -O1 | no |

| OneAPI HPCKit_p_2021.1.0.2684 | mpiicc | -O3 | yes |

Intel MPI provides both mpiicc and mpicc.

My question is, what is the difference between them?

When using the O3 option, which optimization of mpiicc is optimal may cause this error?

Thanks for your help.

Regards

Chen

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Chen,

So you are facing the issue only when O3 optimization is enabled.

As you have said the error is maybe due to some optimization. We will look into the issue of what might be causing this error.

The difference between mpicc and mpiicc is that mpiicc uses Intel compilers (icc ) and mpicc uses gnu compilers (gcc).

Could you also please test with O2 once that would be helpful.

Regards

Prasanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Prasanth,

Unfortunately, using mpiicc with O2 option still cause this error.

For better performance, I currently use mpicc to compile programs.

Regards

Chen

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Chen,

We have tried to reproduce the issue.

I have downloaded Thirdparty-7 from the OpenFoam repository and build scotch6.0.6.

I have replaced the Makefile.inc with Makefile.inc.x86-64_pc_linux2.icc.impi and build the scotch.

It ran fine and the optimizations used were all -O0.

Could you let me know how to reproduce your error? As the error seems to be with the received buffer but you were able to compile it with different optimizations and with GCC+OpenMPI.

Regards

Prasanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Prasanth,

You can reproduce the problem by performing the following steps:

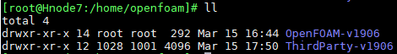

- Downloading Openfoam and Third-Party Library Source Codes

https://altushost-swe.dl.sourceforge.net/project/openfoam/v1906/OpenFOAM-v1906.tgz

https://altushost-swe.dl.sourceforge.net/project/openfoam/v1906/ThirdParty-v1906.tgz

- Decompress the source code package to the same directory

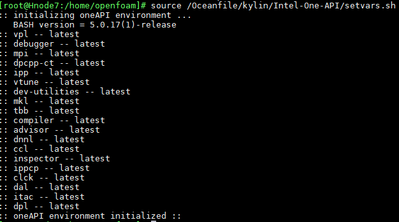

- Configuring the Environment Variables of the Intel OneAPI

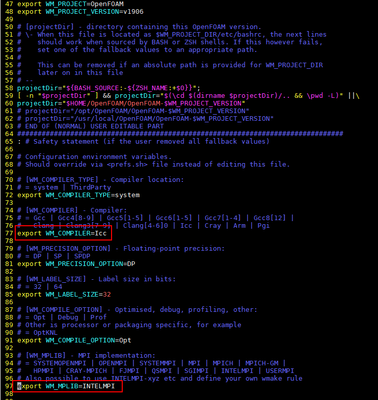

- Configuring OpenFOAM Environment Variables

cd OpenFOAM-v1906

vim etc/bashrc

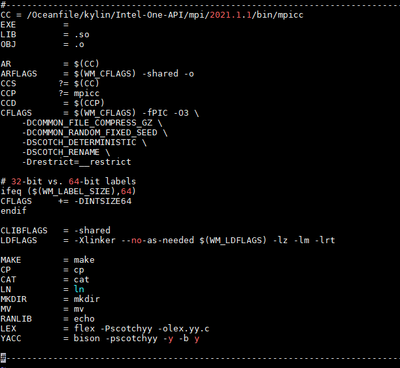

Modify the configuration file to use the Intel OneAPI.

After the modification, load environment variables.

source etc/bashrc

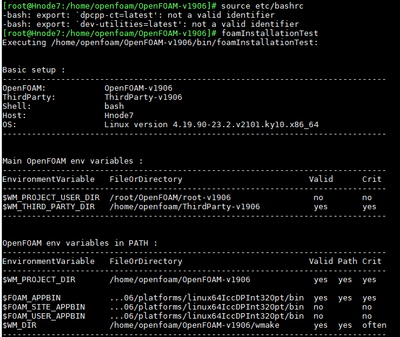

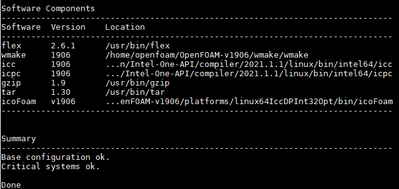

When you load environment variables for the first time, a message is displayed indicating that the software is not installed. Rest assured, don't worry about this tip.

- Compile the program using the built-in script.

./Allwmake -j 8 -s -k -q

It takes a long time to compile, which may take up to 2 hours.

After the compilation is complete, load environment variables again.

Check whether the OpenFOAM is successfully installed.

(scotch6.0.6 is compiled through mpiicc with O3 option)

- Run test cases to reproduce the problem

cd tutorials/incompressible/pisoFoam/LES/motorBike/motorBike/

./Allclean

./Allrun

You can see the error information in the log.snappyHexMesh file.

- Recompile scotch_6.0.6

cd ThirdParty-v1906/scotch_6.0.6/src

Modify the Makefile.inc file and use mpicc.

Compiling Library Files, and add the newly generated library to the environment variable.

make clean && make libptscotch -j

cd libscotch

export LD_LIBRARY_PATH=/home/openfoam/ThirdParty-v1906/scotch_6.0.6/src/libscotch:$LD_LIBRARY_PATH

- Run test cases

cd OpenFOAM-v1906/tutorials/incompressible/pisoFoam/LES/motorBike/motorBike

./Allclean

./Allrun

If all goes well, the test cases can run properly.

I hope this case is helpful to Intel OneAPI.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Chen,

Thanks for providing the steps. They were very helpful.

We are looking into it and will get back to you.

Regards

Prasanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I can reproduce the issue but it looks like an issue in the application code of ptscotch.

Please feel free to attach our ITAC message checker tool (export LD_PRELOAD=libVTmc.so:libmpi.so), which will report the following.

[0] WARNING: LOCAL:MEMORY:OVERLAP: warning

[0] WARNING: Data transfer addresses the same bytes at address 0x195a3f4

[0] WARNING: in the receive buffer multiple times, which is only

[0] WARNING: allowed for send buffers.

[0] WARNING: Control over new buffer is about to be transferred to MPI at:

[0] WARNING: MPI_Allgatherv(*sendbuf=0x1911b04, sendcount=191, sendtype=MPI_INT, *recvbuf=0x195a3f4, *recvcounts=0x33353f8, *displs=0x33353e0, recvtype=MPI_INT, comm=0xffffffffc4000000 SPLIT COMM_WORLD [0:3])

Best regards,

Michael

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This issue has been resolved and we will no longer respond to this thread. If you require additional assistance from Intel, please start a new thread. Any further interaction in this thread will be considered community only

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page