- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi

I had a problem with Pardiso in the past (https://software.intel.com/en-us/forums/intel-math-kernel-library/topic/538908?language=en-us&https=1) and thanks to Alex, we were able to come out a solution in 2015.

Now I am running on an i7-8700k 3.70GHz 6-core 12-thread PC, and we are using mkl 2017 update 3. We found out that Pardiso does not scale at all. NOTE the time below is for the solve time, since we factorize the matrix once and solve thousands of times.

The testing matrix has DOF 5811 and 618378 non-zero element (sparsity 1.8%). It was ordered through Metis.

I setup the options, load the matrix, factorize the matrix, AND then I solve the same RHS 1000 times.

Here is what the problems.

1) When I set the MKL thread number to 6 (the number of physical cores), and no matter what value of i in the function Domain_Set_Num_Threads(i, MKL_DOMAIN_PARDISO), Pardiso decided to run 6 cores.

The code is like this (where NT is the number of threads, 1, 2, 4, 6)

GetMKL_Service()->Set_Num_Threads(6);

GetMKL_Service()->Domain_Set_Num_Threads(NT, 4); //4 stands for MKL_DOMAIN_PARDISO

SetOption(3, NT); // set Pardiso Option[3] to NT;

Here is the task manager screenshot

https://drive.google.com/file/d/1-hNBA2a82qIyy4DiZ0WXSveRqGuWZ2yN/view?usp=sharing

NOTE a) There are lots of red internal operation while Pardiso is running. b) the memory keeps creeping up even though I called phase=-1 after test on each number of threads.

The times are here

1 threads are running for Pardiso.solve 1000 times

Pardiso.solve takes: 8.6750000000 s to run on 1 threads.

2 threads are running for Pardiso.solve 1000 times

Pardiso.solve takes: 8.1410000000 s to run on 2 threads.

4 threads are running for Pardiso.solve 1000 times

Pardiso.solve takes: 8.1460000000 s to run on 4 threads.

6 threads are running for Pardiso.solve 1000 times

Pardiso.solve takes: 7.9120000000 s to run on 6 threads.

Pardiso scaling 1.0000000220 1 threads

Pardiso scaling 1.0655939308 2 threads

Pardiso scaling 1.0649398712 4 threads

Pardiso scaling 1.0964358178 6 threads

So there is pretty much no gain in the solve time jumping from 1thread to 6 threads

2) When I set the MKL and Pardiso to both use the i number of threads

GetMKL_Service()->Set_Num_Threads(NT);

GetMKL_Service()->Domain_Set_Num_Threads(NT, 4); //4 stands for MKL_DOMAIN_PARDISO

SetOption(3, NT); // set Pardiso Option[3] to NT;

here is the task manager screenshot.

https://drive.google.com/file/d/1H377rFFTYmEqWxTvuzGUnJhBMqD2aEZ1/view?usp=sharing

NOTE 1) at least now the Pardiso is running with different threads, consistent with Domain_Set_Num_Threads, and Pardiso Option[3]. 2) there are still lots of red spinning thread there. 3) the memory still crept up after each run.

the data is here.

1 threads are running for Pardiso.solve 1000 times

Pardiso.solve takes: 8.3920000000 s to run on 1 threads.

2 threads are running for Pardiso.solve 1000 times

Pardiso.solve takes: 7.7710000000 s to run on 2 threads.

4 threads are running for Pardiso.solve 1000 times

Pardiso.solve takes: 7.6470000000 s to run on 4 threads.

6 threads are running for Pardiso.solve 1000 times

Pardiso.solve takes: 8.0690000000 s to run on 6 threads.

Pardiso scaling 1.0000000236 1 threads

Pardiso scaling 1.0799125207 2 threads

Pardiso scaling 1.0974238523 4 threads

Pardiso scaling 1.0400297680 6 threads

We still use Pardiso the same as we posted https://software.intel.com/en-us/forums/intel-math-kernel-library/topic/538908?language=en-us&https=1. We want Padiso to use NT number of threads, but other MKL functions (GEMM, GESVD, etc) to use 1 thread, because they are parallelized through TBB. But we couldnot let it happen.

The tested matrix can be downloaded from here (and it is attached as a zip file)

https://drive.google.com/file/d/1wcl8cRaKq704-nFwlScgLTbTIdmhIWdd/view?usp=sharing

The format is like this

# of rows

IA

# of NNZ

JA, Valr, Vali

You read it in like this

std::ifstream is("DSparseDebug.txt", std::ios_base::in);

if (is) {

float val, valr, vali;

is >> val;

m_nRows = val - 1;

m_nCols = m_nRows;

m_row_ptr.resize(m_nRows + 1);

for (int ir = 0; ir < m_nRows + 1; ++ir)

is >> m_row_ptr[ir];

is >> val;

m_nnz = int(val);

m_col_ind.resize(m_nnz);

m_val.resize(m_nnz);

for (int iz = 0; iz < m_nnz; ++iz) {

is >> val >> valr >> vali;

m_col_ind[iz] = val;

m_val[iz] = complex(valr, vali);

}

}

For Padiso's defense, I did see some speedup in the factorization step.

Factor Step:

Pardiso scaling 1.0000000019 1 threads

Pardiso scaling 1.5593220369 2 threads

Pardiso scaling 2.1904761947 4 threads

Pardiso scaling 2.4864864913 6 threads

However, in our application, we use the matrix as preconditioner so we solve the matrix thousands of times.

And one of my coworker pointed out that in the manual:

IPARM (3) — Number of processors. Input On entry: IPARM(3) must contain the number of processors that are available for parallel execution. The number must be equal to the OpenMP environment variable OMP NUM THREADS. Note: If the user has not explicitly set OMP NUM THREADS, then this value can be set by the operating system to the maximal numbers of processors on the system. It is therefore always recommended to control the parallel execution of the solver by explicitly setting OMP NUM THREADS. If fewer processors are available than specified, the execution may slow down instead of speeding up. There is no default value for IPARM(3).

I never set the OMP NUM THREADS, so IPARM(3) cannot be equal to OMP NUM THREADS. will this cause some problem? And I found this two pages that are quite different for IPARM[3], which one should I use?

https://software.intel.com/en-us/mkl-developer-reference-fortran-pardiso-iparm-parameter

https://pardiso-project.org/manual/manual.pdf

in Intel manual, iparm(3): Reserved. Set to zero... So how do I set Pardiso to run with different number of threads than the number of physical cores.

thanks

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Yuan,

Thank you for the report and test matrix. As I understand, here are two problem.

1) Pardiso does not scale well

2) possible memory leak.

about 1),

How do you link mkl in your program? Could you please provide us the test.cpp and command line?

We had gotten the issue about the pardiso performance , which we improve in latest version MKL 2018 update 2. for example,

https://software.intel.com/en-us/articles/intel-math-kernel-library-intel-mkl-2018-bug-fixes-list

| MKLD-2776 | Improved the performance of Intel MKL Pardiso routine in the case of multiple RHS and linking with Intel® Threaded Building Blocks (Intel® TBB) threading layer. |

If possible, could you please try the latest 2018u2 version at your side?

2) about memory leak, we may investigate it when we got the test case , you may upload it by Official channel : Online Service Center - Intel, if the file is protected.

Best Regards,

Ying

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi, Ying

Sorry to say that the Online Service Center - Intel is not the most helpful website that I accessed. I tried Chrome, Firefox, and Edge, at all of which "create a support account" was greyed out so I could not type in my name and create an account.

Anyway, here is the file, NOTE here are the steps to run it.

1) testPardiso() is the function to call

2) I will have to set the two function GetMKL_Service()->Set_Num_Threads(m_NT); GetMKL_Service()->Domain_Set_Num_Threads(m_NT, 4); to let Pardiso to run with m_NT (the number of threads that I specify). And then the memory leak will show up. If I do not have those two functions, Pardiso will utilize all the physical cores, which is not always the case we want. There are still memory increase after each run (1000 solve), but it is much more contained.

3) In SetupMatrixRhsX(), you will have to replace the std::string str = "C:\\DSparseDebug.txt"; to wherever the txt data file is.

4) I am still confused with IPARM(3). It used to set up the number of cores in 2015; in the most recent MKL manual, it is reserved. So now Pardiso use the same threads as MKL_thread?

5) I am running with 1-RHS, so I do not know if MultiRHS fix will be helpful. We are running on MKL 2018 update 1, FYI. We sure can try to build update 2, but we want to get confirmation before we invest time and efforts to do it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Yuan,

Thank for the test code and feedback.

Are you linking TBB libmkl_tbb_thead, under linux?

I'm afraid these seting of mkl_num_threads is only valid for OpenMP threads.

// Numbers of processors, value of OMP_NUM_THREADS

iparm[2] = 0;

for example, if I use OpenMP thread

g++ --std=c++0x -m64 -w -I"/opt/intel/mkl/include" \

./main.cpp \

-L"/opt/intel/""mkl/lib/intel64" \

-lmkl_intel_lp64 \

-lmkl_intel_thread \

-lmkl_core \

-lm \

-L"/opt/intel/""compiler/lib/intel64" \

-liomp5 \

-lpthread -lm -ldl \

-o test_pardiso

Pardiso.solve takes: 0.0548460000 s to run on 4 threads.

Pardiso scaling 0.9999999807 1 threads

Pardiso scaling 0.4563384025 2 threads

Pardiso scaling 0.2446851136 4 threads

It seems run fine with threads. We will investigate the tbb part and let you know if any new result.

Best Regards,

Ying

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ying

This is encouraging!! thanks.

The problem was observed under Visual Studio 2017 15.5.3. Thanks

Let's go to VS. Would you please elaborate "the seting of mkl_num_threads is only valid for OpenMP threads. "... do you mean we did not link the iomp5 library?

when you set iparm[2] = 0; do I just call omp_set_num_threads(NT) to control the code? I played with OMP_NUM_THREADS before https://software.intel.com/en-us/mkl-windows-developer-guide-setting-the-number-of-threads-using-an-openmp-environment-variable , either set it in VS, in control panel, through cmd, nothing happened. which is why we use the mkl_set_num_threads().

Here is another issue, though. In https://software.intel.com/en-us/forums/intel-math-kernel-library/topic/538908?language=en-us&https=1 , # of threads Pardiso uses is different from that of mkl or tbb, which is why alex suggested us to use Domain_Set_Num_Threads(i, MKL_DOMAIN_PARDISO). Can we still use the function to control the code?

Thanks for your respond, we now think something was wrong when we compile and link the code.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi, Ying

Any update?

In my original post, when I give out the

Pardiso scaling 1.0400297680 6 threads

I mean Pardiso runs 1.04x faster on 6 threads (physical cores), comparing to 1 thread.

In your last post, when you said

Pardiso scaling 0.2446851136 4 threads

Does it mean Pardiso took about a quarter of the time on 4 threads, compared with that on 1 thread? or it means the parallel-efficiency is 0.244?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Yuan,

Case A: MKL using TBB threading

I can see almost same result as yours under MSVC 2015 windows. , MKL is using TBB threading. In the Case, the thread control like omp_set_num_threads, DOMAIN THREADS etc are not valid. All of codes should use all of tbb threading. Thus you can't see the scalability .

Case B: MKL using OpenMP threading (by default)

If switch the TBB as NO, then MKL will use OpenMP by default. In the case the omp_set_num_threads, DOMAIN_PARDISO etc setting will works.

In last post : Pardiso scaling 0.2446851136 4 threads , the result is from Linux, As you see, it looks abnormal . so i take some times to investigate.

The final result shows the time measure clock() is not suitable in multi-threads environments. I did change it ,

double t = dsecnd();

for (iRun = 0; iRun < nRun; ++iRun)

{

PardisoRun.Solve();

}

// auto end = std::chrono::steady_clock::now();

double t0 = dsecnd() - t;

// auto fp_ms = end - start;

//double t0 = double(t) / CLOCKS_PER_SEC;

speedup.push_back(t0);

The result looks scalable now

yhu5@dell-r640:~/mkl2018_issue/NI$ icc main.cpp -mkl -I. -std=c++11 -o omp

yhu5@dell-r640:~/mkl2018_issue/NI$ ./omp

MKL 2019, minor 0, update 0, version 20190000, build date 20180312

start test run

1 threads are running for Pardiso.solve 10 times

Pardiso.solve takes: 0.2061377550 s to run on 1 threads.

2 threads are running for Pardiso.solve 10 times

Pardiso.solve takes: 0.1156833507 s to run on 2 threads.

4 threads are running for Pardiso.solve 10 times

Pardiso.solve takes: 0.1247158526 s to run on 4 threads.

Pardiso scaling 1.0000000316 1 threads

Pardiso scaling 1.7819138208 2 threads

Pardiso scaling 1.6528593379 4 threads (here seems still have scability issue, we are investigating , considering different machine, KMP_AFFINITY etc)

yhu5@dell-r640:~/mkl2018_issue/NI$ icc main.cpp -lmkl_intel_lp64 -lmkl_tbb_thread -lmkl_core -ltbb -I. -std=c++11 -o tbb

yhu5@dell-r640:~/mkl2018_issue/NI$ ./tbb

MKL 2019, minor 0, update 0, version 20190000, build date 20180312

start test run

1 threads are running for Pardiso.solve 10 times

Pardiso.solve takes: 0.1915764399 s to run on 1 threads.

2 threads are running for Pardiso.solve 10 times

Pardiso.solve takes: 0.1791000580 s to run on 2 threads.

4 threads are running for Pardiso.solve 10 times

Pardiso.solve takes: 0.1721397322 s to run on 4 threads.

Pardiso scaling 0.9999999806 1 threads

Pardiso scaling 1.0696614968 2 threads

Pardiso scaling 1.1129123634 4 threads

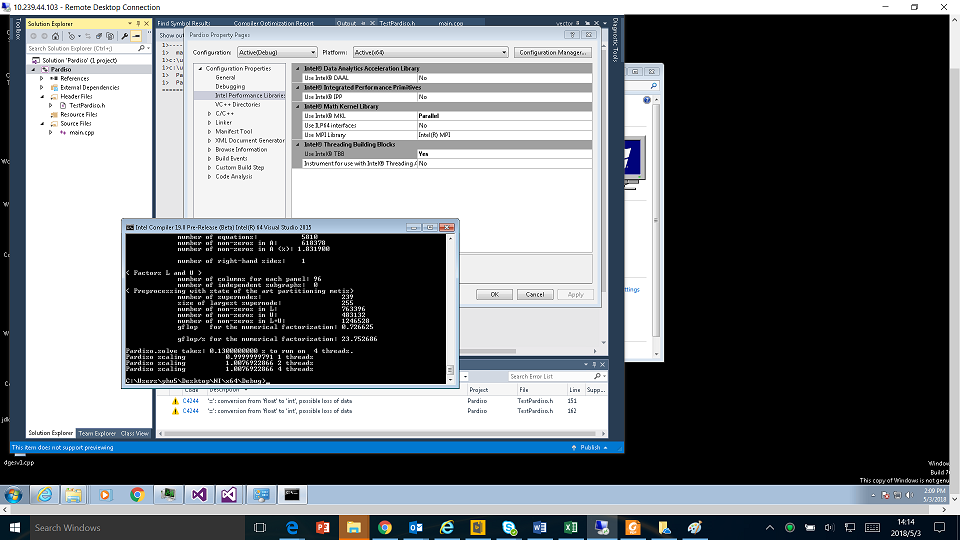

Here is windows screencopy

Best Regards,

Ying

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi, Ying

Thanks for digging into different setups and explaining to us.

As I mentioned in 2015, we use TBB intensively and Pardiso is only a small portion of our solver. In each iteration, we do thousands (or hundreds of thousands) of small GEMM (using TBB parallelization) together with one Pardiso call.

So

1) In the 2018 update 2, Pardiso, MKLD-2776 Improved the performance of Intel MKL Pardiso routine in the case of multiple RHS and linking with Intel® Threaded Building Blocks (Intel® TBB) threading layer. Will it make Pardiso work together with TBB?

2) How do I "switch the TBB as NO", on the fly, and then turn it back on after Pardiso solve? Would you mind sharing your code with us? Our TBB threads have lots of cached data and it took quite some time to set up and release... Is it possible to turn on and off the TBB threading without destructing the thread, efficiently? I tried to put

tbb::task_scheduler_init* tbbs = new tbb::task_scheduler_init(1);

in front of omp_set_num_threads(NT), unfortunately, it did not change a thing.

I am pretty sure that back in 2015, TBB and Pardiso worked fairly seamlessly since we have been using the DOMAIN_PARDISO back then and was able to report decent parallel efficiency. Something must have changed in the past years...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Yuan ,

Just update , MKL release a new version: 2019. You are welcomed to try this version.

About the performance issues. i recalled we discuss it. copy here for other's reference.

Best Regards,

Ying

If slightly rewrite the code to measure solving step only (initially you measure additional calls in algorithm) i got following numbers:

Pardiso.solve takes: 0.0697835851 s to run on 4 threads.

Pardiso scaling 1.0000000226 1 threads

Pardiso scaling 1.1120671299 2 threads

Pardiso scaling 1.1797417078 4 threads

make: warning: Clock skew detected. Your build may be incomplete.

As one can see there is no negative scaling. Taking into account that matrix is small and have huge number of nonzero in factors (more than 3%) I think that this is not an issue but expected behavior.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yuan, have you had a chance to check if this problem exists with the latest version 2019.3?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page