- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I use project https://github.com/kholia/RC4-40-brute-pdf for testing Intel Xeon Phi coprocessor (60 cores, 1Gz per each, 234 threads).

In this project RC4 40-bit encrypts (brute-force algorithm). The part of the offloaded code running on the coprocessor is next (I carried "#pragma omp parallel for" clause to first loop) :

#pragma offload target(mic) in(keyHash:length(keyHashLen)) in(keyHashLen)

{

#pragma omp parallel for

for (int i = is; i <= 255; i++) {

for (int j = js; j <= 255; j++) {

for (int k = ks; k <= 255; k++) {

for (int l = ls; l <= 255; l++) {

for (int m = ms; m <= 255; m++) {

unsigned char hashBuf[5];

hashBuf[0] = (char)i;

hashBuf[1] = (char)j;

hashBuf[2] = (char)k;

hashBuf[3] = (char)l;

hashBuf[4] = (char)m;

unsigned char output[32];

RC4_KEY arc4;

RC4_set_key(&arc4, 5, hashBuf);

RC4(&arc4, 32, padding, output);

if (memcmp(output, keyHash, 32) == 0) {

printf("Key is : ");

print_hex(hashBuf, 5);

exit(0);

}

}

}

}

}

}

}

Additional options for MIC offload compiler "-O2 -no-prec-div -ipo", additional options for MIC offload linker "-L\"c:\openssl\mic\lib\" -lssl -lcrypto"

So the my main question is why it perform slower twice on the coprocessor than on the computer with 2 cores (2.5Gz per each, 24 threads)?

I try to remove the part of code with RC4 functions and try to remove part of code with compare condition but result does not changed (slower twice).

Please, help me understand the situation.

Regards,

Alexander.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What are the values for: is, js, ks, ls?

>> computer with 2 cores (2.5Gz per each, 24 threads)?

Do you mean 2 CPUs, each with 6 cores, 2 threads per core? (2 x 6 x 2 = 24 hardware threads)

The KNC processor is ~1.3GHz. The scalar integer performance of the above loops are likely to saturate the KNC cores using 2 HT/s per core. So you might want to set

KMP_AFFINITY=scatter

OMP_NUM_THREADS=120

Depending on the values of is, js, ks, ls, you might want to collapse (join) multiple loops

#pragma omp parallel for collapse(2) // or (3) or (4) or (5)

If this is your first parallel region, keep in mind that the first parallel region runtime includes the time to start the process OpenMP thread pool. More threads on the KNC == more overhead. The first offload also has the overhead of copying the offload code into the KNC. To correct for this, time the above offload in a loop such that you can observe the first time, and the 2nd and 3rd run times. This should give you a better idea of performance.

1) use collapse(n) (you determine best n)

2) use timing loop that iterates at least twice around your first offload

3) for this test use 2 threads per core

Note, if your code is not amenable to looping the timed section, then add an offload at the beginning of main. that contains a parallel region. Make sure that this code is not optimized out. something like:

#pragma offload target(mic)

{

#pragma omp parallel

if(omp_get_thread_num() == -1) exit(-1);

}

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As usual, Jim's points are all good. (I'd add that even if is is zero, unless you collapse the loops there is at most parallelism of 256 so you will have significant imbalance with 240 threads, and as soon as is is >15 you have threads for which there is no work at all!)

I'll just make one more: You exit as soon as you find a hash of zero. That means that as you change the number of threads you likely also change the amount of work that you do before you find it. (assuming an answer exists...) As an example, suppose that the answer happens when i=4 and we're starting from is=0. If we have 64 threads, then (since this is a static schedule), thread one will execute the i={4,5,6,7} case and find the answer on its first iteration. But if we had 50 threads, then thread zero will execute iterations {0,1,2,3,4,5}, and you won't find the answer until after executing four iterations. In general, you'd expect the performance to follow a sawtooth pattern as you alter the number of threads.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yet another interesting integer-code example ...

I'm not really surprised that the KNC does not perform that well on this. I've grabbed the code from the github link and compiled it for my Phi 5120; the code runs about as fast on the KNC as it does when using ~11 threads on the host (Sandy Bridge) E5.

The vectorization report from the compiler also states that it cannot really vectorize the loops - something which you'd need for true performance improvement.

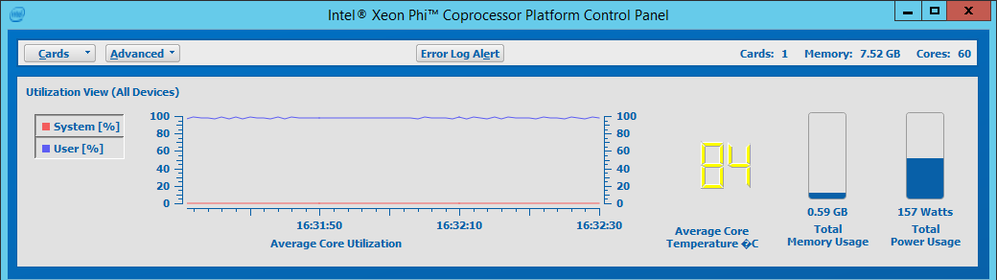

And as James stated, I do see the sawtooth pattern while the code is running on the phi:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are looking at something different there, which is the utilization of the cores over time (that will also show a sawtooth, because of load imbalance, but is not what I was referring to), What I was saying is that as you change the number of cores you run the code with and then plot performance vs number of cores (a classic "scaling" graph, [but I hope do it right]), you should expect to see a sawtooth there, because of the properties of static scheduling and the fact that you stop as soon as you find an answer. That behaviour is unrelated to machine hardware, just more obvious when you have more cores to play with!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What are the values for: is, js, ks, ls

Its variables from original code are designed to simplify testing and in my case are 0

Do you mean 2 CPUs, each with 6 cores, 2 threads per core? (2 x 6 x 2 = 24 hardware threads)

Yes, I rechecked and realized that was wrong (I changed the title of this topic, It sounded too sensational). Your specified the correct configuration - 12 cores and 24 threads.

KMP_AFFINITY=scatter

OMP_NUM_THREADS=120

I set KMP_AFFINITY=scatter (and also tried "balanced") and this slightly increased performance. If I limited the number of threads to 120 (with OMP_NUM_THREADS or KMP_HW_SUBSET environment variables) the program is executed ~1.5 times slowly than without limit (234 threads).

Depending on the values of is, js, ks, ls, you might want to collapse (join) multiple loops

#pragma omp parallel for collapse(2) // or (3) or (4) or (5)

This slightly increased performance too, thanks.

JJK, I moved the parallel section to the first loop (see my first message) and this my pattern (with using "collapse" ):

Eventually the program runs on the Xeon Phi ~1.4-1.5 times slower than on the host and it looks a little bit better. Is this normal? Maybe I didn't consider any other environment variables?

Regards,

Alexander.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page