- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The performance of HPL in my Icelake server is unexpectedly poor.

CPU: Dual Intel(R) Xeon(R) Gold 6346 CPU @ 3.10GHz

OS: CentOS Linux release 7.9.2009 (Core)

HPL: xhpl_intel64_dynamic (oneMKL/21.3)

1. Rpeak

Since each 6346 is equipped with 2 FMA unit, the peak performance is:

2 x 16 x 32 x 3.1 = 3174.4 GFlops

2. Rmax

The below HPL.dat was used

HPL.out output file name (if any)

6 device out (6=stdout,7=stderr,file)

1 # of problems sizes (N)

100000 Ns

1 # of NBs

384 NBs

1 PMAP process mapping (0=Row-,1=Column-major)

1 # of process grids (P x Q)

1 Ps

2 Qs

16.0 threshold

1 # of panel fact

0 PFACTs (0=left, 1=Crout, 2=Right)

1 # of recursive stopping criterium

2 NBMINs (>= 1)

1 # of panels in recursion

2 NDIVs

1 # of recursive panel fact.

0 RFACTs (0=left, 1=Crout, 2=Right)

1 # of broadcast

3 BCASTs (0=1rg,1=1rM,2=2rg,3=2rM,4=Lng,5=LnM)

1 # of lookahead depth

0 DEPTHs (>=0)

2 SWAP (0=bin-exch,1=long,2=mix)

1 swapping threshold

1 L1 in (0=transposed,1=no-transposed) form

1 U in (0=transposed,1=no-transposed) form

1 Equilibration (0=no,1=yes)

8 memory alignment in double (> 0)- The block size of 384 is the recommended value for Xeon Scalable Processor.

- 2 MPI ranks are used for this dual socket server, i.e MPI_PER_NODE=2 and MPI_PROC_NUM=2

Results

================================================================================

T/V N NB P Q Time Gflops

--------------------------------------------------------------------------------

WC03L2L2 100000 384 1 2 349.18 1.90929e+03

HPL_pdgesv() start time Mon Apr 25 10:20:16 2022

HPL_pdgesv() end time Mon Apr 25 10:26:06 2022

--------------------------------------------------------------------------------

||Ax-b||_oo/(eps*(||A||_oo*||x||_oo+||b||_oo)*N)= 3.32239514e-03 ...... PASSED

================================================================================This translates to only 60% of theoretical performance which is very confusing to me.

3. Questions:

- Is the CPU being throttled ? If yes, how can I investigate the performance bottleneck ?

- How can I further improve the performance of HPL for Icelake server ?

- Does HPL requires specific BIOS setting for benchmark purpose ?

Regards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't know where Intel hides the document that lists the Turbo frequencies for the 3rd gen Xeon Scalable Processors, but my copy says that the Xeon Gold 6346 has a base AVX512 frequency of 2.5 GHz and a max all-core AVX-512 frequency of 3.5 GHz.

Assuming the lower number, the peak FP64 performance would be 2560 GFLOPS. Intel's tuned HPL typically delivers about 90% of peak, so I would expect the HPL result to be at least 2300 GFLOPS. (More if the average frequency is higher -- some versions of Intel xHPL report the frequency, or you can run the executable under "perf stat", or you could run "turbostat" to monitor frequencies and power consumption while running.

With an appropriate power supply and cooling system, frequencies should not drop below 2.5 GHz when running xHPL -- if they do, you would certainly want to use turbostat (or some other tool) to monitor power consumption and temperature during the run.

I would expect the OS to report if the package temperature limit is hit, but it is very hard to keep track of all the layers of management software that might block or redirect such messages.

We had unexpected low performance with HPL on our Xeon Platinum 8380 processors initially, but fairly quickly figured out that the problem was processors getting a throttle signal from an overheating power supply. Increasing the fan speeds on the power supplies fixed this problem and now these ICX processors are running very well.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't know where Intel hides the document that lists the Turbo frequencies for the 3rd gen Xeon Scalable Processors, but my copy says that the Xeon Gold 6346 has a base AVX512 frequency of 2.5 GHz and a max all-core AVX-512 frequency of 3.5 GHz.

Assuming the lower number, the peak FP64 performance would be 2560 GFLOPS. Intel's tuned HPL typically delivers about 90% of peak, so I would expect the HPL result to be at least 2300 GFLOPS. (More if the average frequency is higher -- some versions of Intel xHPL report the frequency, or you can run the executable under "perf stat", or you could run "turbostat" to monitor frequencies and power consumption while running.

With an appropriate power supply and cooling system, frequencies should not drop below 2.5 GHz when running xHPL -- if they do, you would certainly want to use turbostat (or some other tool) to monitor power consumption and temperature during the run.

I would expect the OS to report if the package temperature limit is hit, but it is very hard to keep track of all the layers of management software that might block or redirect such messages.

We had unexpected low performance with HPL on our Xeon Platinum 8380 processors initially, but fairly quickly figured out that the problem was processors getting a throttle signal from an overheating power supply. Increasing the fan speeds on the power supplies fixed this problem and now these ICX processors are running very well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi John,

Thanks for your insights into HPL.

I also could not find the specification document for 3rd Gen Scalable Processor either. But based on your replay, it seems that the nominal frequency has been reduced compared to all-core AVX512 one.

From your previous reply here: https://community.intel.com/t5/Software-Tuning-Performance/Linpack-result-for-Intel-Xeon-Gold-6148-cluster/m-p/1151395

Xeon(R) Gold 6148:

- Base AVX512: 1.6 GHz

- All-core AVX512: 2.2 GHz

- Nominal: 2.4 GHz (unrealistic)

Xeon(R) Gold 6346

- Base AVX512: 2.5 GHz

- All-core AVX512: 3.5 GHz

- Nominal: 3.1 GHz (realistic)

Using perf we observed a cpu cycles of ~ 2.4 GHz and we are checking power and cooling system.

So my take away message is that it is no longer appropriate to use nominal frequency to calculate Rpeak.

One should measure the sustained frequency from which Rpeak will be derived.

Rpeak = 2.4 * 32 * 32 = 2457 GFlops

Rmax = 1900 GFlops or 80%

Am I understand correctly ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think your understanding is correct. There are several "peak" values that can be computed, but none are exactly what we are used to seeing....

- Computing the "Peak GFLOPS" using the "Base AVX-512 frequency" will give a lower bound on the peak GFLOPS.

- If the processor does not sustain that frequency while running HPL, there is probably a problem with the processor or the cooling or the power supply.

- All of our systems are purchased with an HPL performance requirement that corresponds to a sustained frequency that is slightly higher than the "Base AVX-512" frequency (though the details differ for each generation of processors).

- Computing the "Peak GFLOPS" using the "Max All-core AVX-512 Turbo frequency" will give an upper bound on the peak GFLOPS, but due to power-limited frequency throttling, it is no longer as tight an upper bound as it used to be on earlier processors.

- When running HPL, every processor will adjust its frequency to run as close to the TDP as possible.

- The resulting average frequency will depend on the intrinsic leakage properties of the processor die combined with the specifics of the cooling system. (E.g., in many systems one socket is "downwind" of the other, so tends to run hotter. Leakage current increases exponentially with temperature, so these processors will have to reduce the frequency more to keep power consumption under TDP.)

- The average frequency should be between the "Base AVX-512 frequency" and the "Max All-core AVX-512 Turbo frequency", but sometimes this is a pretty wide range. E.g., in the Xeon Platinum 8280 (Cascade Lake) processors of the TACC Frontera system, the Base AVX-512 frequency is 1.8 GHz, while the max all-core AVX-512 Turbo frequency is 2.5 GHz -- almost 40% higher.

- When running single-node HPL, it is sometimes important to remember that the two sockets will (in general) have different average frequencies. If the differences are large enough, the "faster" chip may finish early and wait for the slower chip (though this depends on details of the HPL implementation -- some use dynamic scheduling of blocks within a socket and others may use dynamic scheduling across sockets.)

- On the TACC Frontera system (Xeon Platinum 8280, 28-core Cascade Lake processors), the version of HPL that we ran for acceptance includes code to monitor the frequency (core 0 only, I think), and reports "HPL Efficiency by CPU Cycle" at the end of the run. For the ~7879 nodes with results on the test date:

- Measured HPL GFLOPS varies from 3115.1 GFLOPS to 3481.9 -- a range of ~11.8%

- Using the Base AVX512 frequency, the lower bound on the peak GFLOPS for these nodes is 3225.6 GFLOPS

- Using the Max All-core AVX512 Turbo frequency, the upper bound on the peak GFLOPS is 4480.0 GFLOPS

- 90% of the results reported "HPL Efficiency by CPU Cycle" between 85% and 91%.

- The remaining results were almost all in the 77%-85% range. My guess is that these nodes had significant differences in average CPU frequency between the sockets, the socket 0 (where cycles are counted) running significantly faster than socket 1 (which was the performance limiter).

- Measured HPL GFLOPS varies from 3115.1 GFLOPS to 3481.9 -- a range of ~11.8%

- I don't have comparable results for our Xeon 8380 (40-core Ice Lake) nodes -- the testing done there did not include the frequency monitoring, but the results were consistent with average frequencies in the 1.9 to 2.0 GHz range.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1+2:

I crosschecked the 2nd gen specifications against Intel Ark. It is now clear to me that the nominal frequency actually corresponds to a non-AVX one. In case of Xeon Platinum 8280, it is 2.7 GHz and 1.8 GHz for non-AVX and AVX-512, respectively. When applying the 32-flops-per-cycle scaling factor against non-AVX frequency, theoretical performance is of course grossly inflated.

3:

I reduced the number of core usage via HPL_HOST_CORE environmental variable, expecting a better performance gain once each core now runs at a higher clock. But the performance actually drops in this case. So it is still better to utilize all cores in HPL benchmark, even at the base AVX-512 frequency.

4:

I tested each socket separately via HPL_HOST_NODE environmental variable. There is indeed a ~ 5% performance difference between them. With two sockets, the performance loss is about 20%:

one socket: 1.15 GFlops

two socket: 1.9 GFlops

If there is a way to improve cross socket scheduling as you mention, the performance is probably better.

5+6

Thanks for sharing the data from Frontera. This gives us a good reference frame to judge the performance of our system.

Is the HPL version with frequency monitoring available to download, e.g. from github ?

Our recent experiences with 8360Y shows that it also run close to its AVX-512 base frequency of 1.8 GHz.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

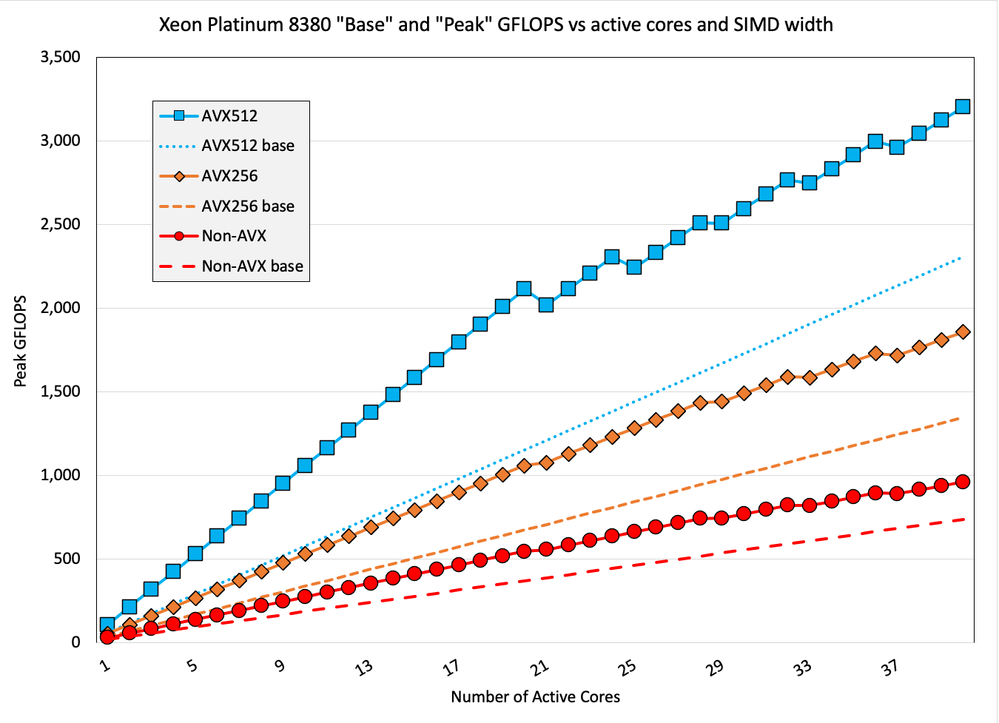

Reducing the core count will not increase the aggregate peak performance for any of the configurations I have looked at. For the Xeon Platinum 8380, the figure below shows the "base" and "peak" FP64 GFLOPS for this processor as a function of core count and SIMD instruction width.

- FLOPS per cycle by SIMD width

- "Non-AVX": includes 128-bit SIMD from AVX2 and AVX512 instruction sets, so 2 128-bit FMAs per cycle = 8 FLOPS/cycle

- "AVX256": 2 256-bit FMAs per cycle = 16 FLOPS/cycle

- "AVX512": 2 512-bit FMAs per cycle = 32 FLOPS/cycle

- Frequency:

- "Base" uses the guaranteed base frequency for each SIMD width (2.3, 2.1, 1.8 GHz for this processor) for all core counts.

- "Peak" uses the maximum Turbo frequency for each SIMD width and active core count.

The version of HPL that reports the frequency came from Intel at some point, but I don't know where or when we obtained it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Once again thank you for your insights and explanation.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page