- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Support Team,

I am compiling a hybrid DPC++/MPI taken from oneAPI sample codes.

I also attached the file for reference.

Could you let me know what is the correct way to compile and run the code ?

1. According to the sample code itself:

mpiicpc -cxx=dpcpp pi_mpi_dpcpp.cpp -o pi_mpi.xCompilation was successful. Yet, the following error was generated when running:

$ qsub -I -l nodes=1:gen9:ppn=2

$ mpirun -np 2 ./pi_mpi.x

[0] MPI startup(): I_MPI_PM environment variable is not supported.

[0] MPI startup(): Similar variables:

I_MPI_PIN

I_MPI_SHM

I_MPI_PLATFORM

I_MPI_PMI

I_MPI_PMI_LIBRARY

[0] MPI startup(): I_MPI_RANK_CMD environment variable is not supported.

[0] MPI startup(): I_MPI_CMD environment variable is not supported.

[0] MPI startup(): Similar variables:

I_MPI_CC

[0] MPI startup(): To check the list of supported variables, use the impi_info utility or refer to https://software.intel.com/en-us/mpi-library/documentation/get-started.

Abort was called at 39 line in file:

/opt/src/opencl/shared/source/built_ins/built_ins.cpp

Abort was called at 39 line in file:

/opt/src/opencl/shared/source/built_ins/built_ins.cpp

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= PID 241139 RUNNING AT s001-n141

= EXIT CODE: 6

= CLEANING UP REMAINING PROCESSES

= YOU CAN IGNORE THE BELOW CLEANUP MESSAGES

===================================================================================

Intel(R) MPI Library troubleshooting guide:

https://software.intel.com/node/561764

===================================================================================

2. According to github: (https://github.com/oneapi-src/oneAPI-samples/tree/master/DirectProgramming/DPC%2B%2B/ParallelPatterns/dpc_reduce)

The correct compilation should be:

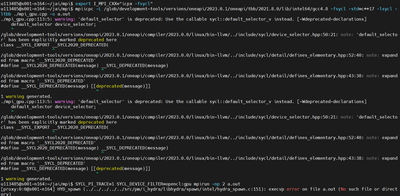

$ mpiicpc -O3 -fsycl -std=c++17 -fsycl-unnamed-lambda pi_mpi_dpcpp.cppCompilation failed with the following error:

icpc: error #10408: The Intel(R) oneAPI DPC++ compiler cannot be found in the expected location. Please check your installation or documentation for more information.

/glob/development-tools/versions/oneapi/2022.3.1/oneapi/compiler/2022.2.1/linux/bin/clang++: No such file or directoryPerhaps the path to clang++ compiler was not correctly set. Please kindly advise.

Regards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Sorry for the delay.

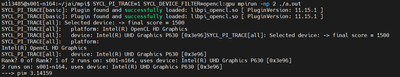

I am able to build and run on a devcloud gpu node with the below commands. screenshot attached of mpirun using the gpu.

>>Login to GPU node with the below command:

qsub -I -l nodes=1:gen9:ppn=2>>After logging into the compute node, please run the below commands:

export I_MPI_CXX="icpx -fsycl"mpiicpc -L /glob/development-tools/versions/oneapi/2023.0.1/oneapi/tbb/2021.8.0/lib/intel64/gcc4.8 -fsycl -std=c++17 -lsycl -ltbb ./<input_file> -o a.outSYCL_PI_TRACE=1 SYCL_DEVICE_FILTER=opencl:gpu mpirun -np 2 ./a.out

If this resolves your issue, make sure to accept this as a solution. This would help others with similar issue. Thank you!

Regards,

Jaideep

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for posting in Intel communities.

We were able to reproduce your issue from our end and working on this internally. We will get back to you with an update.

Thanks,

Jaideep

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Jaideep,

Thanks for looking into this issue.

I was able to make a little bit progress by manually setting I_MPI_CXX, i.e.

$ export I_MPI_CXX=dpcpp

$ mpiicpc -fsycl -std=c++17 -lsycl pi_mpi_dpcpp.cpp -o pi_mpi.xCompilation succeeded, and correct result was observed with CPU backend:

$ SYCL_PI_TRACE=1 SYCL_DEVICE_FILTER=opencl:cpu mpirun -np 2 ./pi_mpi.x

SYCL_PI_TRACE[all]: Selected device ->

SYCL_PI_TRACE[all]: platform: Intel(R) OpenCL

SYCL_PI_TRACE[all]: device: 11th Gen Intel(R) Core(TM) i9-11900KB @ 3.30GHz

SYCL_PI_TRACE[all]: Selected device ->

SYCL_PI_TRACE[all]: platform: Intel(R) OpenCL

SYCL_PI_TRACE[all]: device: 11th Gen Intel(R) Core(TM) i9-11900KB @ 3.30GHz

Rank? 0 of 2 runs on: s019-n002, uses device: 11th Gen Intel(R) Core(TM) i9-11900KB @ 3.30GHz

Rank? 1 of 2 runs on: s019-n002, uses device: 11th Gen Intel(R) Core(TM) i9-11900KB @ 3.30GHz

---> pi= 3.14159For GPU backend, an exception was caught.

YCL_PI_TRACE[basic]: Plugin found and successfully loaded: libpi_opencl.soSYCL_PI_TRACE[basic]: Plugin found and successfully loaded: libpi_opencl.so

SYCL_PI_TRACE[all]: Selected device ->

SYCL_PI_TRACE[all]: platform: Intel(R) OpenCL HD Graphics

SYCL_PI_TRACE[all]: device: Intel(R) UHD Graphics [0x9a60]

Rank? 0 of 2 runs on: s019-n002, uses device: Intel(R) UHD Graphics [0x9a60]

Failure

SYCL_PI_TRACE[all]: Selected device ->

SYCL_PI_TRACE[all]: platform: Intel(R) OpenCL HD Graphics

SYCL_PI_TRACE[all]: device: Intel(R) UHD Graphics [0x9a60]

Rank? 1 of 2 runs on: s019-n002, uses device: Intel(R) UHD Graphics [0x9a60]

Failure

---> pi= 0Unfortunately, the exception does not provides much information but the 'Failure' message. I re-factor the code by removing try/catch clause. Please refer to the attached file. The exact error message is:

$ SYCL_PI_TRACE=1 SYCL_DEVICE_FILTER=opencl:gpu mpirun -np 2 pi_mpi.x

terminate called after throwing an instance of 'cl::sycl::compile_program_error'

terminate called after throwing an instance of 'cl::sycl::compile_program_error'

what(): The program was built for 1 devices

Build program log for 'Intel(R) UHD Graphics [0x9a60]':

-6 (CL_OUT_OF_HOST_MEMORY)

what(): The program was built for 1 devices

Build program log for 'Intel(R) UHD Graphics [0x9a60]':

-6 (CL_OUT_OF_HOST_MEMORY)

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= PID 495706 RUNNING AT s019-n002

= EXIT CODE: 1

= CLEANING UP REMAINING PROCESSES

= YOU CAN IGNORE THE BELOW CLEANUP MESSAGES

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= PID 495706 RUNNING AT s019-n002

= EXIT CODE: 1

= CLEANING UP REMAINING PROCESSES

= YOU CAN IGNORE THE BELOW CLEANUP MESSAGES

===================================================================================

Intel(R) MPI Library troubleshooting guide:

https://software.intel.com/node/561764

===================================================================================I only have access to gen9/gen11 queue from Intel DevCloud and cannot validate the code elsewhere.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Sorry for the delay, we are working on this internally. We will get back to you with an update.

Thanks,

Jaideep

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Sorry for the delay.

I am able to build and run on a devcloud gpu node with the below commands. screenshot attached of mpirun using the gpu.

>>Login to GPU node with the below command:

qsub -I -l nodes=1:gen9:ppn=2>>After logging into the compute node, please run the below commands:

export I_MPI_CXX="icpx -fsycl"mpiicpc -L /glob/development-tools/versions/oneapi/2023.0.1/oneapi/tbb/2021.8.0/lib/intel64/gcc4.8 -fsycl -std=c++17 -lsycl -ltbb ./<input_file> -o a.outSYCL_PI_TRACE=1 SYCL_DEVICE_FILTER=opencl:gpu mpirun -np 2 ./a.out

If this resolves your issue, make sure to accept this as a solution. This would help others with similar issue. Thank you!

Regards,

Jaideep

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

If this resolves your issue, make sure to accept this as a solution. This would help others with similar issue. Thank you!

Thanks,

Jaideep

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jaideep,

Sorry for belated reply.

I confirm that the code is now working as expected by following your suggestion. The solution has been accepted.

Thanks for getting back at the issue even after a long time has passed.

Regards.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Good day to you.

Glad to know that your issue is resolved. If you need any additional information, please post a new question as this thread will no longer be monitored by Intel.

Have a great day ahead.

Regards,

Jaideep

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page